ARNodes Network Benchmarks.

In prevision of the transfer of the arnodes to the new Cisco SMB SG300 switches I decided to gather some stats and benchmarks numbers just so I can compare the performance. I used arnode07 and arnodes08 for that purpose. There were no SGE jobs running at the systems were totally quiescent.

Network Hardware and Configuration

First, the hardware. From lspcie each systems has 3 onboard NICs, eth0, eth1 and 2:

02:00.0 Ethernet controller: Intel Corporation 82574L Gigabit Network Connection 0a:00.0 Ethernet controller: Intel Corporation 82576 Gigabit Network Connection (rev 01) 0a:00.1 Ethernet controller: Intel Corporation 82576 Gigabit Network Connection (rev 01)

From lshw:

*-network

description: Ethernet interface

product: 82574L Gigabit Network Connection

vendor: Intel Corporation

physical id: 0

bus info: pci@0000:02:00.0

logical name: eth0

version: 00

serial: 00:e0:81:c2:03:f7

size: 1GB/s

capacity: 1GB/s

width: 32 bits

clock: 33MHz

capabilities: pm msi pciexpress msix bus_master cap_list ethernet physical tp 10bt 10bt-fd 100bt 100bt-fd 1000bt-fd autonegotiation

configuration: autonegotiation=on broadcast=yes driver=e1000e driverversion=2.3.2-k duplex=full firmware=1.8-0 ip=192.168.86.207 latency=0

link=yes multicast=yes port=twisted pair speed=1GB/s

resources: irq:17 memory:faee0000-faefffff ioport:cc00(size=32) memory:faedc000-faedffff

*-network:0

description: Ethernet interface

product: 82576 Gigabit Network Connection

vendor: Intel Corporation

physical id: 0

bus info: pci@0000:0a:00.0

logical name: eth1

version: 01

serial: 00:e0:81:c2:04:c2

size: 1GB/s

capacity: 1GB/s

width: 32 bits

clock: 33MHz

capabilities: pm msi msix pciexpress bus_master cap_list rom ethernet physical tp 10bt 10bt-fd 100bt 100bt-fd 1000bt-fd autonegotiation

configuration: autonegotiation=on broadcast=yes driver=igb driverversion=5.0.3-k duplex=full firmware=1.64, 0xe5ff0000 latency=0 link=yes

multicast=yes port=twisted pair slave=yes speed=1GB/s

resources: irq:24 memory:fbb20000-fbb3ffff memory:fbb00000-fbb1ffff ioport:d880(size=32) memory:fbafc000-fbafffff

memory:fbac0000-fbadffff memory:fbaa0000-fbabffff memory:fba80000-fba9ffff

*-network:1

description: Ethernet interface

product: 82576 Gigabit Network Connection

vendor: Intel Corporation

physical id: 0.1

bus info: pci@0000:0a:00.1

logical name: eth2

version: 01

serial: 00:e0:81:c2:04:c3

size: 1GB/s

capacity: 1GB/s

width: 32 bits

clock: 33MHz

capabilities: pm msi msix pciexpress bus_master cap_list rom ethernet physical tp 10bt 10bt-fd 100bt 100bt-fd 1000bt-fd autonegotiation

configuration: autonegotiation=on broadcast=yes driver=igb driverversion=5.0.3-k duplex=full firmware=1.64, 0xe5ff0000 latency=0 link=yes

multicast=yes port=twisted pair slave=yes speed=1GB/s

resources: irq:34 memory:fbbe0000-fbbfffff memory:fbbc0000-fbbdffff ioport:dc00(size=32) memory:fbbbc000-fbbbffff memory:fbb80000-fbb9ffff

memory:fbb60000-fbb7ffff memory:fbb40000-fbb5ffff

Eth0 is one the private network 192.168.86.0/24 network while eth1 and eth2 are on the private (management) network 172.16.10.0/24. eth1 and eth2 are bonded together as bond0.

/etc/network/interface:

# eth0 - static auto eth0 iface eth0 inet static address 192.168.86.208 netmask 255.255.255.0 broadcast 192.168.86.255 gateway 192.168.86.1 # bonding ethernet network auto bond0 iface bond0 inet static address 172.16.10.208 netmask 255.255.255.0 #arp_ip_target 172.16.10.254 172.16.10.2 #gateway 172.16.10.1 bond_mode balance-alb bond_miimon 100 bond_downdelay 200 bond_updelay 200 slaves eth1 eth2 #up /sbin/ifenslave bond0 eth1 eth2 #down /sbin/ifenslave -d bond0 eth1 eth2

$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:e0:81:c1:e6:4f brd ff:ff:ff:ff:ff:ff

inet 192.168.86.208/24 brd 192.168.86.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::2e0:81ff:fec1:e64f/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,SLAVE,UP,LOWER_UP> mtu 1500 qdisc mq master bond0 state UP qlen 1000

link/ether 00:e0:81:c1:e6:9e brd ff:ff:ff:ff:ff:ff

4: eth2: <BROADCAST,MULTICAST,SLAVE,UP,LOWER_UP> mtu 1500 qdisc mq master bond0 state UP qlen 1000

link/ether 00:e0:81:c1:e6:9f brd ff:ff:ff:ff:ff:ff

5: bond0: <BROADCAST,MULTICAST,MASTER,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP

link/ether 00:e0:81:c1:e6:9e brd ff:ff:ff:ff:ff:ff

inet 172.16.10.208/24 brd 172.16.10.255 scope global bond0

valid_lft forever preferred_lft forever

inet6 fe80::2e0:81ff:fec1:e69e/64 scope link

valid_lft forever preferred_lft forever

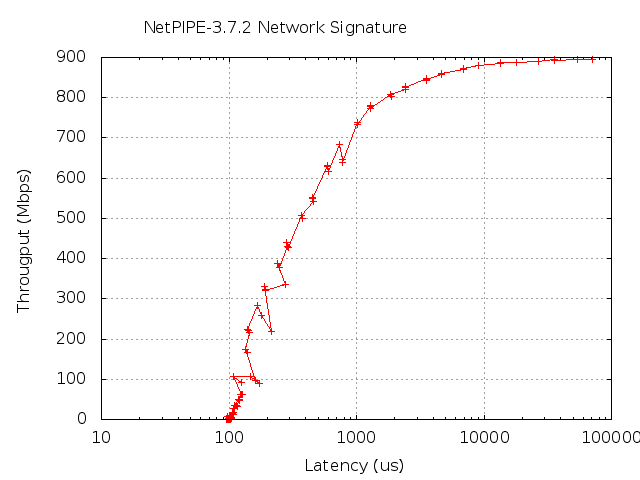

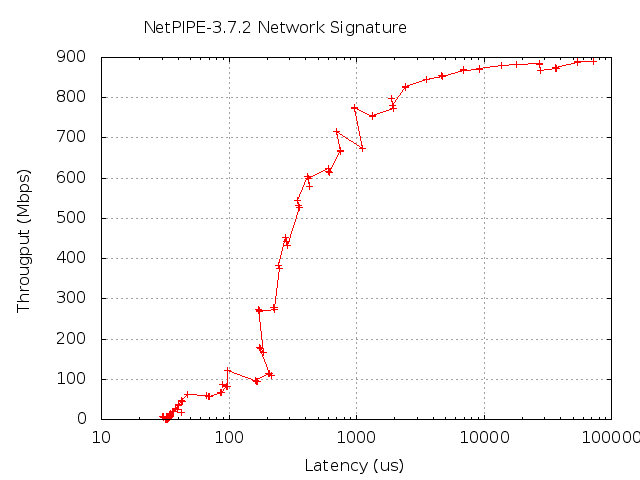

NetPipe TCP Benchmarks

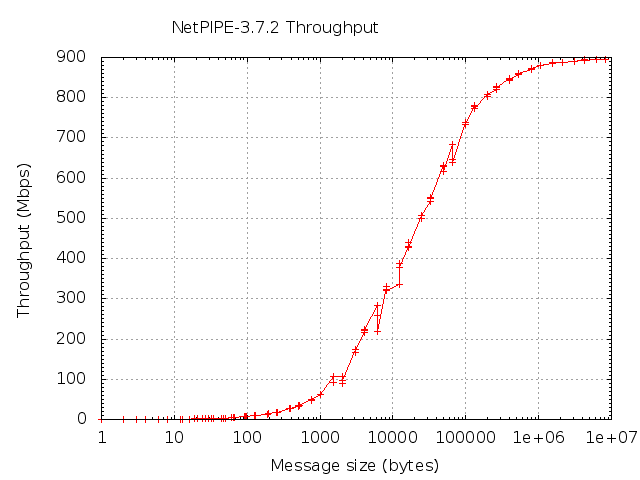

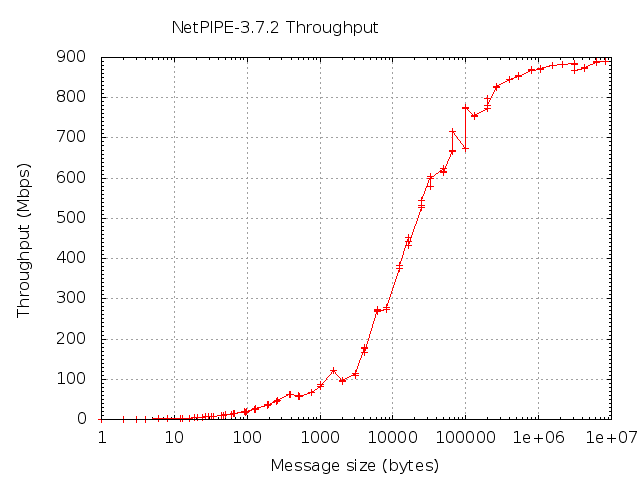

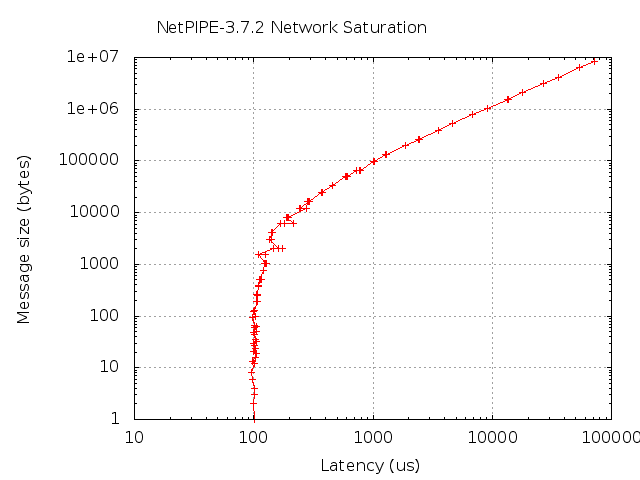

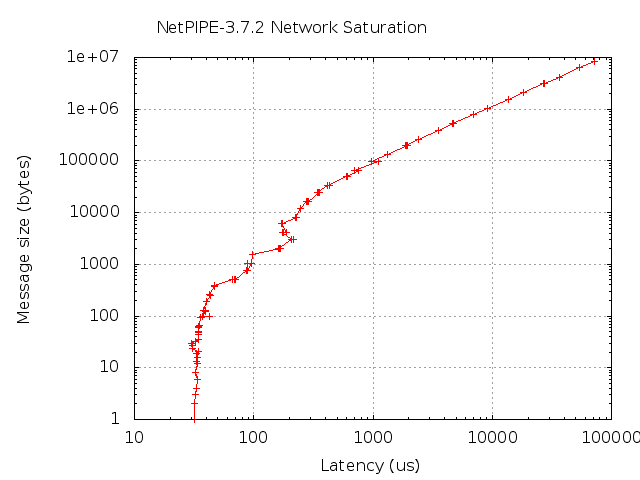

Run NPtcp on the receiver (node08) NPtcp -i and on the sender (node07) run NPtcp -h 192.168.86.208 for eth0 and NPtcp -h 172.16.10.208 for the bond0 interface. Here are the resulting throughput, saturation and signature plots.

The plots on the left are for eth0 and those on the right for bond0.

Throughput Plots

Saturation Plots

Block size values versus the transfer/elapsed time.

Plotted using a log-log scale, this graph allows us to determine the saturation point, the block size threshold value after which an increase of the block size just results in a close-to-linear increase in transfer time. The region from the saturation point until the end of the graph is the saturation interval, the region where where throughput cannot be improved by increasing the block size.

Signature Plots

Transfer speed versus the elapsed time.

This represents network accelaration. When plotted using a logarithmic scale for the elapsed time one can easily see that the network latency coincides with the time value where the graph takes off.

Nttcp benchmarks

Here I show nttcp benchmarks for different size of the socket buffer with the option -l <size>. Note that I have trimmed the output quite a bit after the first run with the lowest socket buffer length, 4096 bytes

eth0

$ for i in 4096 8192 16384 65536 262144 524288; do nttcp -D -v -t -T -x 8388608 -l $i 10.0.0.217; done

nttcp-1: buflen=4096, bufcnt=2048, dataport=5038/tcp

nttcp-l: transmitted 8388608 bytes

Bytes Real s CPU s Real-MBit/s CPU-MBit/s Calls Real-C/s CPU-C/s

l 8388608 0.06 0.00 1040.6088 20134.6727 2048 31756.86 614461.4

1 8388608 0.07 0.00 923.5122 20134.6727 2365 32545.72 709571.0

nttcp-1: buflen=8192, bufcnt=1024, dataport=5038/tcp

Bytes Real s CPU s Real-MBit/s CPU-MBit/s Calls Real-C/s CPU-C/s

l 8388608 0.06 0.00 1054.77286710886.4000 1024 16094.56102400000.0

1 8388608 0.07 0.00 925.5629 20134.6727 1237 17060.66 371137.1

nttcp-1: buflen=16384, bufcnt=512, dataport=5038/tcp

Bytes Real s CPU s Real-MBit/s CPU-MBit/s Calls Real-C/s CPU-C/s

l 8388608 0.07 0.00 1011.37636710886.4000 512 7716.1951200000.0

1 8388608 0.07 0.00 918.0419 20134.6727 779 10656.63 233723.4

nttcp-1: buflen=65536, bufcnt=128, dataport=5038/tcp

Bytes Real s CPU s Real-MBit/s CPU-MBit/s Calls Real-C/s CPU-C/s

l 8388608 0.07 0.00 1009.32286710886.4000 128 1925.1312800000.0

1 8388608 0.07 0.00 919.4758 20134.6727 386 5288.69 115811.6

nttcp-1: buflen=262144, bufcnt=32, dataport=5038/tcp

Bytes Real s CPU s Real-MBit/s CPU-MBit/s Calls Real-C/s CPU-C/s

l 8388608 0.07 0.00 973.73536710886.4000 32 464.31 3200000.0

1 8388608 0.08 0.00 870.6278 20134.6727 307 3982.82 92109.2

nttcp-1: buflen=524288, bufcnt=16, dataport=5038/tcp

Bytes Real s CPU s Real-MBit/s CPU-MBit/s Calls Real-C/s CPU-C/s

l 8388608 0.07 0.00 1015.37026710886.4000 16 242.08 1600000.0

1 8388608 0.07 0.01 901.4193 5033.2906 445 5977.33 33375.8

bond0

$ for i in 4096 8192 16384 65536 262144 524288; do nttcp -D -v -t -T -x 8388608 -l $i 172.16.10.208; done

nttcp-1: buflen=4096, bufcnt=2048, dataport=5038/tcp

Bytes Real s CPU s Real-MBit/s CPU-MBit/s Calls Real-C/s CPU-C/s

l 8388608 0.07 0.00 966.8611 20134.6727 2048 29506.26 614461.4

1 8388608 0.08 0.00 886.8857 20134.6727 2233 29510.49 669967.0

nttcp-1: buflen=8192, bufcnt=1024, dataport=5038/tcp

Bytes Real s CPU s Real-MBit/s CPU-MBit/s Calls Real-C/s CPU-C/s

l 8388608 0.07 0.00 949.4477 20134.6727 1024 14487.42 307230.7

1 8388608 0.08 0.00 884.7809 20134.6727 1184 15610.17 355235.5

nttcp-1: buflen=16384, bufcnt=512, dataport=5038/tcp

Bytes Real s CPU s Real-MBit/s CPU-MBit/s Calls Real-C/s CPU-C/s

l 8388608 0.07 0.00 985.31576710886.4000 512 7517.3651200000.0

1 8388608 0.07 0.00 895.5132 20134.6727 760 10141.58 228022.8

nttcp-1: buflen=65536, bufcnt=128, dataport=5038/tcp

Bytes Real s CPU s Real-MBit/s CPU-MBit/s Calls Real-C/s CPU-C/s

l 8388608 0.07 0.00 943.83936710886.4000 128 1800.2312800000.0

1 8388608 0.08 0.00 881.19106710886.4000 385 5055.3538500000.0

nttcp-1: buflen=262144, bufcnt=32, dataport=5038/tcp

Bytes Real s CPU s Real-MBit/s CPU-MBit/s Calls Real-C/s CPU-C/s

l 8388608 0.07 0.00 970.63696710886.4000 32 462.84 3200000.0

1 8388608 0.08 0.00 885.82046710886.4000 394 5200.7039400000.0

nttcp-1: buflen=524288, bufcnt=16, dataport=5038/tcp

Bytes Real s CPU s Real-MBit/s CPU-MBit/s Calls Real-C/s CPU-C/s

l 8388608 0.07 0.00 984.57846710886.4000 16 234.74 1600000.0

1 8388608 0.08 0.01 882.9184 6711.5576 396 5209.98 39604.0