Isilon Stuff and Other Things

This is a disclaimer: Using the notes below is dangerous for both your sanity and peace of mind. If you still want to read them beware of the fact that they may be "not even wrong". Everything I write in there is just a mnemonic device to give me a chance to fix things I badly broke because I'm bloody stupid and think I can tinker with stuff that is way above my head and go away with it. It reminds me of Gandalf's warning: "Perilous to all of us are the devices of an art deeper than we ourselves possess." Moreover, a lot of it I blatantly stole on the net from other obviously cleverer persons than me -- not very hard. Forgive me. My bad. Please consider it and go away. You have been warned!

(:#toc:)

EMC Support

- Support is at https://support.emc.com

- One must create a profile with 2 roles, one as Authorized Contact and another as Dial Home, Primary Contact.

- Site ID is:

Site ID: 1003902358 Created On: 05/13/2016 12:36 PM Site Name: MCGILL UNIVERSITY Address 1: 3801 UNIVERSITY ST Address 2: ROOM WB212 City: MONTREAL State: Country: CA Postal Code: H3A 2B4

About This Cluster

This is from the web interface, [Help] → [About This Cluster]

About This Cluster

OneFSUpgrade

Isilon OneFS v8.0.0.4 B_MR_8_0_0_4_053(RELEASE) installed on all nodes.

Packages and Updates

No packages or updates are installed.

Cluster Information

GUID: 000e1ea7eec05c211157780e00f5f0ce64c1

Cluster Hardware

Node Model Configuration Serial Number

Node 1 Isilon X410-4U-Dual-64GB-2x1GE-2x10GE SFP+-144TB 400-0049-03 SX410-301608-0260

Node 2 Isilon X410-4U-Dual-64GB-2x1GE-2x10GE SFP+-144TB 400-0049-03 SX410-301608-0255

Node 4 Isilon X410-4U-Dual-64GB-2x1GE-2x10GE SFP+-144TB 400-0049-03 SX410-301608-0264

Node 3 Isilon X410-4U-Dual-64GB-2x1GE-2x10GE SFP+-144TB 400-0049-03 SX410-301608-0254

Node 5 Isilon X410-4U-Dual-64GB-2x1GE-2x10GE SFP+-144TB 400-0049-03 SX410-301608-0248

Cluster Firmware

Device Type Firmware Nodes

BMC_S2600CP BMC 1.25.9722 1-5

CFFPS1 CFFPS 03.03 1-5

CFFPS2 CFFPS 03.03 1-5

CMCSDR_Honeybadger CMCSDR 00.0B 1-5

CMC_HFHB CMC 02.05 1-5

IsilonFPV1 FrontPnl UI.01.36 1-5

LOx2-MLC-YD Nvram rp180c01+rp180c01 1-5

Lsi DiskCtrl 20.00.04.00 1-5

LsiExp0 DiskExp 0910+0210 1-5

LsiExp1 DiskExp 0910+0210 1-5

Mellanox Network 2.30.8020+ISL1090110018 1-5

QLogic-NX2 10GigE 7.6.55 1-5

Copyright © 2001-2017 EMC Corporation. All Rights Reserved. This software is protected, without limitation, by copyright law and international treaties. Use of this software and intellectual property contained therein is expressly limited to the terms and conditions of the License Agreement under which it is provided by or on behalf of EMC. All other trademarks used herein are the property of their respective owners.

Logical Node Numbers (LNN), Device IDs, Serial Numbers and Firmwares

- Use

isi_for_array commandto loop over the nodes and run the commandcommand

BIC-Isilon-Cluster-4# isi_for_array isi_hw_status -i BIC-Isilon-Cluster-4: SerNo: SX410-301608-0264 BIC-Isilon-Cluster-4: Config: 400-0049-03 BIC-Isilon-Cluster-4: FamCode: X BIC-Isilon-Cluster-4: ChsCode: 4U BIC-Isilon-Cluster-4: GenCode: 10 BIC-Isilon-Cluster-4: Product: X410-4U-Dual-64GB-2x1GE-2x10GE SFP+-144TB BIC-Isilon-Cluster-1: SerNo: SX410-301608-0260 BIC-Isilon-Cluster-1: Config: 400-0049-03 BIC-Isilon-Cluster-1: FamCode: X BIC-Isilon-Cluster-1: ChsCode: 4U BIC-Isilon-Cluster-1: GenCode: 10 BIC-Isilon-Cluster-1: Product: X410-4U-Dual-64GB-2x1GE-2x10GE SFP+-144TB BIC-Isilon-Cluster-2: SerNo: SX410-301608-0255 BIC-Isilon-Cluster-2: Config: 400-0049-03 BIC-Isilon-Cluster-2: FamCode: X BIC-Isilon-Cluster-2: ChsCode: 4U BIC-Isilon-Cluster-2: GenCode: 10 BIC-Isilon-Cluster-2: Product: X410-4U-Dual-64GB-2x1GE-2x10GE SFP+-144TB BIC-Isilon-Cluster-3: SerNo: SX410-301608-0254 BIC-Isilon-Cluster-3: Config: 400-0049-03 BIC-Isilon-Cluster-3: FamCode: X BIC-Isilon-Cluster-3: ChsCode: 4U BIC-Isilon-Cluster-3: GenCode: 10 BIC-Isilon-Cluster-3: Product: X410-4U-Dual-64GB-2x1GE-2x10GE SFP+-144TB BIC-Isilon-Cluster-5: SerNo: SX410-301608-0248 BIC-Isilon-Cluster-5: Config: 400-0049-03 BIC-Isilon-Cluster-5: FamCode: X BIC-Isilon-Cluster-5: ChsCode: 4U BIC-Isilon-Cluster-5: GenCode: 10 BIC-Isilon-Cluster-5: Product: X410-4U-Dual-64GB-2x1GE-2x10GE SFP+-144TB

isi_nodescan extract formatted strings like:

BIC-Isilon-Cluster-3# isi_nodes %{id} %{lnn} %{name} %{serialno}

1 1 BIC-Isilon-Cluster-1 SX410-301608-0260

2 2 BIC-Isilon-Cluster-2 SX410-301608-0255

4 3 BIC-Isilon-Cluster-3 SX410-301608-0254

3 4 BIC-Isilon-Cluster-4 SX410-301608-0264

6 5 BIC-Isilon-Cluster-5 SX410-301608-0248

- Why is there an

%{id}equal to 6? - 20160923: I can now answer this question I think.

- It might be the result of the initial configuration of the cluster back in April ‘16.

- The guy who did it (from J Laganiere from Gallium-it.com) has a few problems with nodes not responding.

- The nodes are labeled from top to bottom as 1 (highest in the rack) to 5 (lowest in the rack).

- They should have been labeled as their physical order in the rack, 1/bottom to 5/top.

- As to why the LLN don’t match the Device IDs: the Device IDs are incrementally updated when failing and adding nodes.

- I smartfailed one node once so that explains the ID=6.

- This is extremely annoying as the allocation of IPs is also affected.

- The last octet of IP pools

prodandnodedon’t match for the same LNN.

BIC-Isilon-Cluster >>> lnnset

LNN Device ID Cluster IP

----------------------------------------

1 1 10.0.3.1

2 2 10.0.3.2

3 4 10.0.3.4

4 3 10.0.3.3

5 6 10.0.3.5

BIC-Isilon-Cluster-2# isi_nodes %{lnn} %{devid} %{external} %{dynamic}

1 1 172.16.10.20 132.206.178.237,172.16.20.237

2 2 172.16.10.21 132.206.178.236,172.16.20.236

3 4 172.16.10.22 132.206.178.233,172.16.20.234

4 3 172.16.10.23 132.206.178.234,172.16.20.235

5 6 172.16.10.24 132.206.178.235,172.16.20.233

BIC-Isilon-Cluster-2# isi network interfaces ls

LNN Name Status Owners IP Addresses

--------------------------------------------------------------------

1 10gige-1 Up - -

1 10gige-2 Up - -

1 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.237

groupnet0.node.pool1 172.16.20.237

1 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.20

1 ext-2 No Carrier - -

1 ext-agg Not Available - -

2 10gige-1 Up - -

2 10gige-2 Up - -

2 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.236

groupnet0.node.pool1 172.16.20.236

2 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.21

2 ext-2 No Carrier - -

2 ext-agg Not Available - -

3 10gige-1 Up - -

3 10gige-2 Up - -

3 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.233

groupnet0.node.pool1 172.16.20.234

3 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.22

3 ext-2 No Carrier - -

3 ext-agg Not Available - -

4 10gige-1 Up - -

4 10gige-2 Up - -

4 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.234

groupnet0.node.pool1 172.16.20.235

4 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.23

4 ext-2 No Carrier - -

4 ext-agg Not Available - -

5 10gige-1 Up - -

5 10gige-2 Up - -

5 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.235

groupnet0.node.pool1 172.16.20.233

5 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.24

5 ext-2 No Carrier - -

5 ext-agg Not Available - -

--------------------------------------------------------------------

Total: 30

- This will list the status of devices and firmware on a node:

BIC-Isilon-Cluster-1# isi upgrade cluster firmware devices Device Type Firmware Mismatch Lnns --------------------------------------------------------------------- CFFPS1_Blastoff CFFPS 03.03 - 1-5 CFFPS2_Blastoff CFFPS 03.03 - 1-5 CMC_HFHB CMC 01.02 - 1-5 CMCSDR_Honeybadger CMCSDR 00.0B - 1-5 Lsi DiskCtrl 17.00.01.00 - 1-5 LsiExp0 DiskExp 0910+0210 - 1-5 LsiExp1 DiskExp 0910+0210 - 1-5 IsilonFPV1 FrontPnl UI.01.36 - 1-2,4-5 Mellanox Network 2.30.8020+ISL1090110018 - 1-5 LOx2-MLC-YD Nvram rp180c01+rp180c01 - 1-5 --------------------------------------------------------------------- Total: 10

Licenses

- The following licenses are active:

BIC-Isilon-Cluster-3# isi license licenses ls Name Status Expiration ---------------------------------------------------- SmartDedupe Inactive - Swift Activated - SmartQuotas Activated - InsightIQ Activated - SmartPools Inactive - SmartLock Inactive - Isilon for vCenter Inactive - CloudPools Inactive - Hardening Inactive - SnapshotIQ Activated - HDFS Inactive - SyncIQ Inactive - SmartConnect Advanced Activated - ---------------------------------------------------- Total: 13

Alerts and Events

- Modify event retention period from 90 days (default) to 360:

BIC-Isilon-Cluster-1# isi event settings view

Retention Days: 90

Storage Limit: 1

Maintenance Start: Never

Maintenance Duration: Never

Heartbeat Interval: daily

BIC-Isilon-Cluster-1# isi event settings modify --retention-days 360

BIC-Isilon-Cluster-1# isi event settings view

Retention Days: 360

Storage Limit: 1

Maintenance Start: Never

Maintenance Duration: Never

Heartbeat Interval: daily

- The syntax to modify events settings:

isi event settings modify [--retention-days <integer>] [--storage-limit <integer>] [--maintenance-start <timestamp>] [--clear-maintenance-start] [--maintenance-duration <duration>] [--heartbeat-interval

- Every event has two ID numbers that help to establish the context of the event.

- The event type ID identifies the type of event that has occurred.

- The event instance ID is a unique number that is specific to a particular occurrence of an event type.

- When an event is submitted to the kernel queue, an event instance ID is assigned.

- You can reference the instance ID to determine the exact time that an event occurred.

- You can view individual events. However, you manage events and alerts at the event group level.

BIC-Isilon-Cluster-3# isi event events list

ID Occurred Sev Lnn Eventgroup ID Message

--------------------------------------------------------------------------------------------------------

1.426 04/19 13:28 U 0 1 Resolved from PAPI

3.309 04/19 11:11 C 4 1 External network link ext-1 (igb0) down

2.530 04/20 00:00 I 2 131 Heartbeat Event

3.545 04/27 22:09 C 4 131101 Disk Repair Complete: Bay 2, Type HDD, LNUM 34. Replace the drive according to the instructions in the OneFS Help system.

1.664 05/05 12:05 U 0 131124 Resolved from PAPI

3.563 05/03 23:56 C 4 131124 One or more drives (bay(s) 2 / type(s) HDD) are ready to be replaced.

3.551 04/27 22:19 C 4 131124 One or more drives (bay(s) 2 / type(s) HDD) are ready to be replaced.

BIC-Isilon-Cluster-3# isi event events view 3.545

ID: 3.545

Eventgroup ID: 131101

Event Type: 100010010

Message: Disk Repair Complete: Bay 2, Type HDD, LNUM 34. Replace the drive according to the instructions in the OneFS Help system.

Devid: 3

Lnn: 4

Time: 2016-04-27T22:09:12

Severity: critical

Value: 0.0

BIC-Isilon-Cluster-3# isi event groups list

ID Started Ended Causes Short Events Severity

---------------------------------------------------------------------------------

3 04/19 11:10 04/19 13:28 external_network 2 critical

2 04/19 11:10 04/19 13:28 external_network 2 critical

4 04/19 11:10 04/19 11:16 NODE_STATUS_OFFLINE 2 critical

1 04/19 11:11 04/19 13:28 external_network 2 critical

24 04/19 11:16 04/19 11:16 NODE_STATUS_ONLINE 1 information

26 04/19 11:23 04/19 13:28 external_network 2 critical

27 04/19 11:31 04/19 13:28 WINNET_AUTH_NIS_SERVERS_UNREACH 6 critical

32 04/19 12:43 04/19 12:44 HW_IPMI_POWER_SUPPLY_STATUS_REG 4 critical

...

524525 05/30 02:18 05/30 02:18 SYS_DISK_REMOVED 1 critical

524538 05/30 02:19 -- SYS_DISK_UNHEALTHY 3 critical

...

BIC-Isilon-Cluster-3# isi event groups view 524525

ID: 524525

Started: 05/30 02:18

Causes Long: Disk Repair Complete: Bay 18, Type HDD, LNUM 27. Replace the drive according to the instructions in the OneFS Help system.

Last Event: 2016-05-30T02:18:16

Ignore: No

Ignore Time: Never

Resolved: Yes

Ended: 05/30 02:18

Events: 1

Severity: critical

BIC-Isilon-Cluster-3# isi event groups view 524538

ID: 524538

Started: 05/30 02:19

Causes Long: One or more drives (bay(s) 18 / type(s) HDD) are ready to be replaced.

Last Event: 2016-06-04T12:42:09

Ignore: No

Ignore Time: Never

Resolved: No

Ended: --

Events: 3

Severity: critical

Scheduling A Maintenance Window

- You can schedule a maintenance window by setting a maintenance start time and duration.

- During a scheduled maintenance window, the system will continue to log events, but no alerts will be generated.

- Scheduling a maintenance window will keep channels from being flooded by benign alerts associated with cluster maintenance procedures.

- Active event groups will automatically resume generating alerts when the scheduled maintenance period ends.

- You can schedule a maintenance window to discontinue alerts while you are performing maintenance on your cluster.

- Schedule a maintenance window by running the

isi event settings modifycommand. - The following example command schedules a maintenance window that begins on September 1, 2015 at 11:00pm and lasts for two days:

isi event settings modify --maintenance-start 2015-09-01T23:00:00 --maintenance-duration 2D

Hardware, Devices and Nodes

Storage Pool Protection Level

- Default and suggested protection level for a cluster size less than 2PB is

+2d:1n. - A

+2d:1nprotection level implies that the cluster can recover from two simultaneous drive failures or one node failure without sustaining any data loss. - The parity overhead is 20% for a 5-nodes cluster with a

+2d:1nprotection level.

BIC-Isilon-Cluster-4# isi storagepool list Name Nodes Requested Protection HDD Total % SSD Total % -------------------------------------------------------------------------------------- x410_144tb_64gb 1-5 +2d:1n 1.1190T 641.6275T 0.17% 0 0 0.00% -------------------------------------------------------------------------------------- Total: 1 1.1190T 641.6275T 0.17% 0 0 0.00%

Hardware status on a specific node:

BIC-Isilon-Cluster-4# isi_hw_status

SerNo: SX410-301608-0264

Config: 400-0049-03

FamCode: X

ChsCode: 4U

GenCode: 10

Product: X410-4U-Dual-64GB-2x1GE-2x10GE SFP+-144TB

HWGen: CTO (CTO Hardware)

Chassis: ISI36V3 (Isilon 36-Bay(V3) Chassis)

CPU: GenuineIntel (2.00GHz, stepping 0x000306e4)

PROC: Dual-proc, Octa-HT-core

RAM: 68602642432 Bytes

Mobo: IntelS2600CP (Intel S2600CP Motherboard)

NVRam: LX4381 (Isilon LOx NVRam Card) (2016MB card) (size 2113798144B)

FlshDrv: None (No physical dongle supported) ((null))

DskCtl: LSI2308SAS2 (LSI 2308 SAS Controller) (8 ports)

DskExp: LSISAS2X24_X2 (LSI SAS2x24 SAS Expander (Qty 2))

PwrSupl: PS1 (type=ACBEL POLYTECH , fw=03.03)

PwrSupl: PS2 (type=ACBEL POLYTECH , fw=03.03)

ChasCnt: 1 (Single-Chassis System)

NetIF: ib1,ib0,igb0,igb1,bxe0,bxe1

IBType: MT4099 QDR (Mellanox MT4099 IB QDR Card)

LCDver: IsiVFD1 (Isilon VFD V1)

IMB: Board Version 0xffffffff

Power Supplies OK

Power Supply 1 good

Power Supply 2 good

CPU Operation (raw 0x88390000) = Normal

CPU Speed Limit = 100.00%

FAN TAC SENSOR 1 = 8800.000

FAN TAC SENSOR 2 = 8800.000

FAN TAC SENSOR 3 = 8800.000

PS FAN SPEED 1 = 9600.000

PS FAN SPEED 2 = 9500.000

BB +12.0V = 11.935

BB +5.0V = 4.937

BB +3.3V = 3.268

BB +5.0V STBY = 4.894

BB +3.3V AUX = 3.268

BB +1.05V P1Vccp = 0.828

BB +1.05V P2Vccp = 0.822

BB +1.5 P1DDR AB = na

BB +1.5 P1DDR CD = na

BB +1.5 P2DDR AB = na

BB +1.5 P2DDR CD = na

BB +1.8V AUX = 1.769

BB +1.1V STBY = 1.081

BB VBAT = 3.120

BB +1.35 P1LV AB = 1.342

BB +1.35 P1LV CD = 1.348

BB +1.35 P2LV AB = 1.378

BB +1.35 P2LV CD = 1.348

VCC_12V0 = 12.100

VCC_5V0 = 5.000

VCC_3V3 = 3.300

VCC_1V8 = 1.800

VCC_5V0_SB = 4.900

VCC_1V0 = 0.990

VCC_5V0_CBL = 5.000

VCC_SW = 4.900

VBATT_1 = 4.000

VBATT_2 = 4.000

PS IN VOLT 1 = 241.000

PS IN VOLT 2 = 241.000

PS OUT VOLT 1 = 12.300

PS OUT VOLT 2 = 12.300

PS IN CURR 1 = 1.200

PS IN CURR 2 = 1.200

PS OUT CURR 1 = 19.000

PS OUT CURR 2 = 19.500

Front Panel Temp = 20.6

BB EDGE Temp = 25.000

BB BMC Temp = 34.000

BB P2 VR Temp = 30.000

BB MEM VR Temp = 28.000

LAN NIC Temp = 42.000

P1 Therm Margin = -56.000

P2 Therm Margin = -58.000

P1 DTS Therm Mgn = -56.000

P2 DTS Therm Mgn = -58.000

DIMM Thrm Mrgn 1 = -66.000

DIMM Thrm Mrgn 2 = -68.000

DIMM Thrm Mrgn 3 = -67.000

DIMM Thrm Mrgn 4 = -66.000

TEMP SENSOR 1 = 23.000

PS TEMP 1 = 28.000

PS TEMP 2 = 28.000

List devices on nodes 5 (node logical node number):

BIC-Isilon-Cluster-1# isi devices list --node-lnn 5 Lnn Location Device Lnum State Serial ----------------------------------------------- 5 Bay 1 /dev/da1 35 HEALTHY S1Z1S6BY 5 Bay 2 /dev/da2 34 HEALTHY Z1ZAECJM 5 Bay 3 /dev/da19 17 HEALTHY S1Z1SB0L 5 Bay 4 /dev/da20 16 HEALTHY S1Z1SAYP 5 Bay 5 /dev/da3 33 HEALTHY Z1ZA74A4 5 Bay 6 /dev/da21 15 HEALTHY Z1ZAEQ13 5 Bay 7 /dev/da22 14 HEALTHY S1Z1SAF5 5 Bay 8 /dev/da23 13 HEALTHY S1Z1SB0C 5 Bay 9 /dev/da4 32 HEALTHY Z1ZAEPR8 5 Bay 10 /dev/da24 12 HEALTHY Z1ZAB3ZD 5 Bay 11 /dev/da25 11 HEALTHY S1Z1RYGS 5 Bay 12 /dev/da26 10 HEALTHY S1Z1SB0A 5 Bay 13 /dev/da5 31 HEALTHY Z1ZAEPS5 5 Bay 14 /dev/da6 30 HEALTHY Z1ZAF5GQ 5 Bay 15 /dev/da7 29 HEALTHY Z1ZAB40S 5 Bay 16 /dev/da27 9 HEALTHY Z1ZAF625 5 Bay 17 /dev/da8 28 HEALTHY Z1ZAEPJY 5 Bay 18 /dev/da9 27 HEALTHY Z1ZAF1LG 5 Bay 19 /dev/da10 26 HEALTHY Z1ZAF724 5 Bay 20 /dev/da28 8 HEALTHY Z1ZAF5W8 5 Bay 21 /dev/da11 25 HEALTHY Z1ZAEW1W 5 Bay 22 /dev/da12 24 HEALTHY Z1ZAF0CW 5 Bay 23 /dev/da29 7 HEALTHY Z1ZAF5VM 5 Bay 24 /dev/da30 6 HEALTHY Z1ZAF59X 5 Bay 25 /dev/da31 5 HEALTHY Z1ZAF21G 5 Bay 26 /dev/da32 4 HEALTHY Z1ZAF5QJ 5 Bay 27 /dev/da33 3 HEALTHY Z1ZAF58Y 5 Bay 28 /dev/da13 23 HEALTHY Z1ZAF6CG 5 Bay 29 /dev/da34 2 HEALTHY Z1ZAB3XJ 5 Bay 30 /dev/da14 22 HEALTHY S1Z1RYHB 5 Bay 31 /dev/da35 1 HEALTHY Z1ZAB3TQ 5 Bay 32 /dev/da15 21 HEALTHY Z1ZAEPYX 5 Bay 33 /dev/da36 0 HEALTHY Z1ZAF4Z0 5 Bay 34 /dev/da16 20 HEALTHY Z1ZAEPMC 5 Bay 35 /dev/da17 19 HEALTHY Z1ZAF4H4 5 Bay 36 /dev/da18 18 HEALTHY Z1ZAF6JA ----------------------------------------------- Total: 36

Use command isi_for_array an select node 4 and list its drives:

BIC-Isilon-Cluster-1# isi_for_array -n4 isi devices drive list BIC-Isilon-Cluster-4: Lnn Location Device Lnum State Serial BIC-Isilon-Cluster-4: ----------------------------------------------- BIC-Isilon-Cluster-4: 4 Bay 1 /dev/da1 35 HEALTHY S1Z1STTN BIC-Isilon-Cluster-4: 4 Bay 2 /dev/da2 36 HEALTHY Z1Z9XE67 BIC-Isilon-Cluster-4: 4 Bay 3 /dev/da19 17 HEALTHY S1Z1NE5B BIC-Isilon-Cluster-4: 4 Bay 4 /dev/da20 16 HEALTHY S1Z1QQBN BIC-Isilon-Cluster-4: 4 Bay 5 /dev/da3 33 HEALTHY S1Z1RYJ0 BIC-Isilon-Cluster-4: 4 Bay 6 /dev/da21 15 HEALTHY S1Z1SL53 BIC-Isilon-Cluster-4: 4 Bay 7 /dev/da22 14 HEALTHY S1Z1QNVG BIC-Isilon-Cluster-4: 4 Bay 8 /dev/da23 13 HEALTHY S1Z1R8TT BIC-Isilon-Cluster-4: 4 Bay 9 /dev/da4 32 HEALTHY S1Z1SLDG BIC-Isilon-Cluster-4: 4 Bay 10 /dev/da24 12 HEALTHY S1Z1RVGX BIC-Isilon-Cluster-4: 4 Bay 11 /dev/da25 11 HEALTHY S1Z1QNSG BIC-Isilon-Cluster-4: 4 Bay 12 /dev/da26 10 HEALTHY S1Z1NEGJ BIC-Isilon-Cluster-4: 4 Bay 13 /dev/da5 31 HEALTHY S1Z1QR9E BIC-Isilon-Cluster-4: 4 Bay 14 /dev/da6 30 HEALTHY S1Z1SL23 BIC-Isilon-Cluster-4: 4 Bay 15 /dev/da7 29 HEALTHY S1Z1NEPA BIC-Isilon-Cluster-4: 4 Bay 16 /dev/da27 9 HEALTHY S1Z1SLAZ BIC-Isilon-Cluster-4: 4 Bay 17 /dev/da8 28 HEALTHY S1Z1STT6 BIC-Isilon-Cluster-4: 4 Bay 18 /dev/da9 27 HEALTHY S1Z1SL2W BIC-Isilon-Cluster-4: 4 Bay 19 /dev/da10 26 HEALTHY S1Z1SL4P BIC-Isilon-Cluster-4: 4 Bay 20 /dev/da28 8 HEALTHY S1Z1QS4J BIC-Isilon-Cluster-4: 4 Bay 21 /dev/da11 25 HEALTHY S1Z1SAXY BIC-Isilon-Cluster-4: 4 Bay 22 /dev/da12 24 HEALTHY S1Z1SL9J BIC-Isilon-Cluster-4: 4 Bay 23 /dev/da29 7 HEALTHY S1Z1NFS6 BIC-Isilon-Cluster-4: 4 Bay 24 /dev/da30 6 HEALTHY S1Z1NE26 BIC-Isilon-Cluster-4: 4 Bay 25 /dev/da31 5 HEALTHY S1Z1RX6H BIC-Isilon-Cluster-4: 4 Bay 26 /dev/da32 4 HEALTHY S1Z1QRTK BIC-Isilon-Cluster-4: 4 Bay 27 /dev/da33 3 HEALTHY S1Z1SAWG BIC-Isilon-Cluster-4: 4 Bay 28 /dev/da13 23 HEALTHY S1Z1QR5B BIC-Isilon-Cluster-4: 4 Bay 29 /dev/da34 2 HEALTHY S1Z1RVEK BIC-Isilon-Cluster-4: 4 Bay 30 /dev/da14 22 HEALTHY S1Z1SLAN BIC-Isilon-Cluster-4: 4 Bay 31 /dev/da35 1 HEALTHY S1Z1QPES BIC-Isilon-Cluster-4: 4 Bay 32 /dev/da15 21 HEALTHY S1Z1SLBR BIC-Isilon-Cluster-4: 4 Bay 33 /dev/da36 0 HEALTHY S1Z1SAXM BIC-Isilon-Cluster-4: 4 Bay 34 /dev/da16 20 HEALTHY S1Z1RVJX BIC-Isilon-Cluster-4: 4 Bay 35 /dev/da17 19 HEALTHY S1Z1RV62 BIC-Isilon-Cluster-4: 4 Bay 36 /dev/da18 18 HEALTHY S1Z1RYH9 BIC-Isilon-Cluster-4: ----------------------------------------------- BIC-Isilon-Cluster-4: Total: 36

Loop through the cluster nodes and grep for non-healthy drives using

BIC-Isilon-Cluster-4# isi_for_array "isi devices drive list| grep -iv healthy" BIC-Isilon-Cluster-2: Lnn Location Device Lnum State Serial BIC-Isilon-Cluster-2: ----------------------------------------------- BIC-Isilon-Cluster-2: ----------------------------------------------- BIC-Isilon-Cluster-2: Total: 36 BIC-Isilon-Cluster-3: Lnn Location Device Lnum State Serial BIC-Isilon-Cluster-3: ----------------------------------------------- BIC-Isilon-Cluster-3: ----------------------------------------------- BIC-Isilon-Cluster-3: Total: 36 BIC-Isilon-Cluster-4: Lnn Location Device Lnum State Serial BIC-Isilon-Cluster-4: ----------------------------------------------- BIC-Isilon-Cluster-4: 4 Bay 2 - N/A REPLACE - BIC-Isilon-Cluster-4: ----------------------------------------------- BIC-Isilon-Cluster-4: Total: 36 BIC-Isilon-Cluster-1: Lnn Location Device Lnum State Serial BIC-Isilon-Cluster-1: ----------------------------------------------- BIC-Isilon-Cluster-1: ----------------------------------------------- BIC-Isilon-Cluster-1: Total: 36 BIC-Isilon-Cluster-5: Lnn Location Device Lnum State Serial BIC-Isilon-Cluster-5: ----------------------------------------------- BIC-Isilon-Cluster-5: ----------------------------------------------- BIC-Isilon-Cluster-5: Total: 36

View Firmware Devices Status

BIC-Isilon-Cluster-5# isi devices drive firmware list --node-lnn all Lnn Location Firmware Desired Model ----------------------------------------------------- 1 Bay 1 SNG4 - ST4000NM0033-9ZM170 1 Bay 2 SNG4 - ST4000NM0033-9ZM170 1 Bay 3 SNG4 - ST4000NM0033-9ZM170 1 Bay 4 SNG4 - ST4000NM0033-9ZM170 1 Bay 5 SNG4 - ST4000NM0033-9ZM170 1 Bay 6 SNG4 - ST4000NM0033-9ZM170 1 Bay 7 SNG4 - ST4000NM0033-9ZM170 1 Bay 8 SNG4 - ST4000NM0033-9ZM170 1 Bay 9 SNG4 - ST4000NM0033-9ZM170 1 Bay 10 SNG4 - ST4000NM0033-9ZM170 1 Bay 11 SNG4 - ST4000NM0033-9ZM170 1 Bay 12 SNG4 - ST4000NM0033-9ZM170 1 Bay 13 SNG4 - ST4000NM0033-9ZM170 1 Bay 14 SNG4 - ST4000NM0033-9ZM170 1 Bay 15 SNG4 - ST4000NM0033-9ZM170 1 Bay 16 SNG4 - ST4000NM0033-9ZM170 1 Bay 17 SNG4 - ST4000NM0033-9ZM170 1 Bay 18 SNG4 - ST4000NM0033-9ZM170 1 Bay 19 SNG4 - ST4000NM0033-9ZM170 1 Bay 20 SNG4 - ST4000NM0033-9ZM170 1 Bay 21 SNG4 - ST4000NM0033-9ZM170 1 Bay 22 SNG4 - ST4000NM0033-9ZM170 1 Bay 23 SNG4 - ST4000NM0033-9ZM170 1 Bay 24 SNG4 - ST4000NM0033-9ZM170 1 Bay 25 SNG4 - ST4000NM0033-9ZM170 1 Bay 26 SNG4 - ST4000NM0033-9ZM170 1 Bay 27 SNG4 - ST4000NM0033-9ZM170 1 Bay 28 SNG4 - ST4000NM0033-9ZM170 1 Bay 29 SNG4 - ST4000NM0033-9ZM170 1 Bay 30 SNG4 - ST4000NM0033-9ZM170 1 Bay 31 SNG4 - ST4000NM0033-9ZM170 1 Bay 32 SNG4 - ST4000NM0033-9ZM170 1 Bay 33 SNG4 - ST4000NM0033-9ZM170 1 Bay 34 SNG4 - ST4000NM0033-9ZM170 1 Bay 35 SNG4 - ST4000NM0033-9ZM170 1 Bay 36 SNG4 - ST4000NM0033-9ZM170 2 Bay 1 SNG4 - ST4000NM0033-9ZM170 2 Bay 2 SNG4 - ST4000NM0033-9ZM170 2 Bay 3 SNG4 - ST4000NM0033-9ZM170 2 Bay 4 SNG4 - ST4000NM0033-9ZM170 2 Bay 5 SNG4 - ST4000NM0033-9ZM170 2 Bay 6 SNG4 - ST4000NM0033-9ZM170 2 Bay 7 SNG4 - ST4000NM0033-9ZM170 2 Bay 8 SNG4 - ST4000NM0033-9ZM170 2 Bay 9 SNG4 - ST4000NM0033-9ZM170 2 Bay 10 SNG4 - ST4000NM0033-9ZM170 2 Bay 11 SNG4 - ST4000NM0033-9ZM170 2 Bay 12 SNG4 - ST4000NM0033-9ZM170 2 Bay 13 SNG4 - ST4000NM0033-9ZM170 2 Bay 14 SNG4 - ST4000NM0033-9ZM170 2 Bay 15 SNG4 - ST4000NM0033-9ZM170 2 Bay 16 SNG4 - ST4000NM0033-9ZM170 2 Bay 17 SNG4 - ST4000NM0033-9ZM170 2 Bay 18 SNG4 - ST4000NM0033-9ZM170 2 Bay 19 SNG4 - ST4000NM0033-9ZM170 2 Bay 20 SNG4 - ST4000NM0033-9ZM170 2 Bay 21 SNG4 - ST4000NM0033-9ZM170 2 Bay 22 SNG4 - ST4000NM0033-9ZM170 2 Bay 23 SNG4 - ST4000NM0033-9ZM170 2 Bay 24 SNG4 - ST4000NM0033-9ZM170 2 Bay 25 SNG4 - ST4000NM0033-9ZM170 2 Bay 26 SNG4 - ST4000NM0033-9ZM170 2 Bay 27 SNG4 - ST4000NM0033-9ZM170 2 Bay 28 SNG4 - ST4000NM0033-9ZM170 2 Bay 29 SNG4 - ST4000NM0033-9ZM170 2 Bay 30 SNG4 - ST4000NM0033-9ZM170 2 Bay 31 SNG4 - ST4000NM0033-9ZM170 2 Bay 32 SNG4 - ST4000NM0033-9ZM170 2 Bay 33 SNG4 - ST4000NM0033-9ZM170 2 Bay 34 SNG4 - ST4000NM0033-9ZM170 2 Bay 35 SNG4 - ST4000NM0033-9ZM170 2 Bay 36 SNG4 - ST4000NM0033-9ZM170 4 Bay 1 SNG4 - ST4000NM0033-9ZM170 4 Bay 2 SNG4 - ST4000NM0033-9ZM170 4 Bay 3 SNG4 - ST4000NM0033-9ZM170 4 Bay 4 SNG4 - ST4000NM0033-9ZM170 4 Bay 5 SNG4 - ST4000NM0033-9ZM170 4 Bay 6 SNG4 - ST4000NM0033-9ZM170 4 Bay 7 SNG4 - ST4000NM0033-9ZM170 4 Bay 8 SNG4 - ST4000NM0033-9ZM170 4 Bay 9 SNG4 - ST4000NM0033-9ZM170 4 Bay 10 SNG4 - ST4000NM0033-9ZM170 4 Bay 11 SNG4 - ST4000NM0033-9ZM170 4 Bay 12 SNG4 - ST4000NM0033-9ZM170 4 Bay 13 SNG4 - ST4000NM0033-9ZM170 4 Bay 14 SNG4 - ST4000NM0033-9ZM170 4 Bay 15 SNG4 - ST4000NM0033-9ZM170 4 Bay 16 SNG4 - ST4000NM0033-9ZM170 4 Bay 17 SNG4 - ST4000NM0033-9ZM170 4 Bay 18 SNG4 - ST4000NM0033-9ZM170 4 Bay 19 SNG4 - ST4000NM0033-9ZM170 4 Bay 20 SNG4 - ST4000NM0033-9ZM170 4 Bay 21 SNG4 - ST4000NM0033-9ZM170 4 Bay 22 SNG4 - ST4000NM0033-9ZM170 4 Bay 23 SNG4 - ST4000NM0033-9ZM170 4 Bay 24 SNG4 - ST4000NM0033-9ZM170 4 Bay 25 SNG4 - ST4000NM0033-9ZM170 4 Bay 26 SNG4 - ST4000NM0033-9ZM170 4 Bay 27 SNG4 - ST4000NM0033-9ZM170 4 Bay 28 SNG4 - ST4000NM0033-9ZM170 4 Bay 29 SNG4 - ST4000NM0033-9ZM170 4 Bay 30 SNG4 - ST4000NM0033-9ZM170 4 Bay 31 SNG4 - ST4000NM0033-9ZM170 4 Bay 32 SNG4 - ST4000NM0033-9ZM170 4 Bay 33 SNG4 - ST4000NM0033-9ZM170 4 Bay 34 SNG4 - ST4000NM0033-9ZM170 4 Bay 35 SNG4 - ST4000NM0033-9ZM170 4 Bay 36 SNG4 - ST4000NM0033-9ZM170 3 Bay 1 SNG4 - ST4000NM0033-9ZM170 3 Bay 2 SNG4 - ST4000NM0033-9ZM170 3 Bay 3 SNG4 - ST4000NM0033-9ZM170 3 Bay 4 SNG4 - ST4000NM0033-9ZM170 3 Bay 5 SNG4 - ST4000NM0033-9ZM170 3 Bay 6 SNG4 - ST4000NM0033-9ZM170 3 Bay 7 SNG4 - ST4000NM0033-9ZM170 3 Bay 8 SNG4 - ST4000NM0033-9ZM170 3 Bay 9 SNG4 - ST4000NM0033-9ZM170 3 Bay 10 SNG4 - ST4000NM0033-9ZM170 3 Bay 11 SNG4 - ST4000NM0033-9ZM170 3 Bay 12 SNG4 - ST4000NM0033-9ZM170 3 Bay 13 SNG4 - ST4000NM0033-9ZM170 3 Bay 14 SNG4 - ST4000NM0033-9ZM170 3 Bay 15 SNG4 - ST4000NM0033-9ZM170 3 Bay 16 SNG4 - ST4000NM0033-9ZM170 3 Bay 17 SNG4 - ST4000NM0033-9ZM170 3 Bay 18 SNG4 - ST4000NM0033-9ZM170 3 Bay 19 SNG4 - ST4000NM0033-9ZM170 3 Bay 20 SNG4 - ST4000NM0033-9ZM170 3 Bay 21 SNG4 - ST4000NM0033-9ZM170 3 Bay 22 SNG4 - ST4000NM0033-9ZM170 3 Bay 23 SNG4 - ST4000NM0033-9ZM170 3 Bay 24 SNG4 - ST4000NM0033-9ZM170 3 Bay 25 SNG4 - ST4000NM0033-9ZM170 3 Bay 26 SNG4 - ST4000NM0033-9ZM170 3 Bay 27 SNG4 - ST4000NM0033-9ZM170 3 Bay 28 SNG4 - ST4000NM0033-9ZM170 3 Bay 29 SNG4 - ST4000NM0033-9ZM170 3 Bay 30 SNG4 - ST4000NM0033-9ZM170 3 Bay 31 SNG4 - ST4000NM0033-9ZM170 3 Bay 32 SNG4 - ST4000NM0033-9ZM170 3 Bay 33 SNG4 - ST4000NM0033-9ZM170 3 Bay 34 SNG4 - ST4000NM0033-9ZM170 3 Bay 35 SNG4 - ST4000NM0033-9ZM170 3 Bay 36 SNG4 - ST4000NM0033-9ZM170 5 Bay 1 SNG4 - ST4000NM0033-9ZM170 5 Bay 2 SNG4 - ST4000NM0033-9ZM170 5 Bay 3 SNG4 - ST4000NM0033-9ZM170 5 Bay 4 SNG4 - ST4000NM0033-9ZM170 5 Bay 5 SNG4 - ST4000NM0033-9ZM170 5 Bay 6 SNG4 - ST4000NM0033-9ZM170 5 Bay 7 SNG4 - ST4000NM0033-9ZM170 5 Bay 8 SNG4 - ST4000NM0033-9ZM170 5 Bay 9 SNG4 - ST4000NM0033-9ZM170 5 Bay 10 SNG4 - ST4000NM0033-9ZM170 5 Bay 11 SNG4 - ST4000NM0033-9ZM170 5 Bay 12 SNG4 - ST4000NM0033-9ZM170 5 Bay 13 SNG4 - ST4000NM0033-9ZM170 5 Bay 14 SNG4 - ST4000NM0033-9ZM170 5 Bay 15 SNG4 - ST4000NM0033-9ZM170 5 Bay 16 SNG4 - ST4000NM0033-9ZM170 5 Bay 17 SNG4 - ST4000NM0033-9ZM170 5 Bay 18 SNG4 - ST4000NM0033-9ZM170 5 Bay 19 SNG4 - ST4000NM0033-9ZM170 5 Bay 20 SNG4 - ST4000NM0033-9ZM170 5 Bay 21 SNG4 - ST4000NM0033-9ZM170 5 Bay 22 SNG4 - ST4000NM0033-9ZM170 5 Bay 23 SNG4 - ST4000NM0033-9ZM170 5 Bay 24 SNG4 - ST4000NM0033-9ZM170 5 Bay 25 SNG4 - ST4000NM0033-9ZM170 5 Bay 26 SNG4 - ST4000NM0033-9ZM170 5 Bay 27 SNG4 - ST4000NM0033-9ZM170 5 Bay 28 SNG4 - ST4000NM0033-9ZM170 5 Bay 29 SNG4 - ST4000NM0033-9ZM170 5 Bay 30 SNG4 - ST4000NM0033-9ZM170 5 Bay 31 SNG4 - ST4000NM0033-9ZM170 5 Bay 32 SNG4 - ST4000NM0033-9ZM170 5 Bay 33 SNG4 - ST4000NM0033-9ZM170 5 Bay 34 SNG4 - ST4000NM0033-9ZM170 5 Bay 35 SNG4 - ST4000NM0033-9ZM170 5 Bay 36 SNG4 - ST4000NM0033-9ZM170 ----------------------------------------------------- Total: 180

Add a drive to a node:

BIC-Isilon-Cluster-5# isi devices add <bay> --node-lnn=< node#>

Disk Failure Replacement Procedure

- A disk in bay 4 of the Logical Node Number 5 (mode 5) is bad.

### Remove bad disk, insert new one.

# List disk devices on node 5:

BIC-Isilon-Cluster-4# isi_for_array -n5 isi devices drive list

BIC-Isilon-Cluster-5: Lnn Location Device Lnum State Serial

BIC-Isilon-Cluster-5: -----------------------------------------------

BIC-Isilon-Cluster-5: 5 Bay 1 /dev/da1 35 HEALTHY S1Z1S6BY

BIC-Isilon-Cluster-5: 5 Bay 2 /dev/da2 34 HEALTHY Z1ZAECJM

BIC-Isilon-Cluster-5: 5 Bay 3 /dev/da19 17 HEALTHY S1Z1SB0L

BIC-Isilon-Cluster-5: 5 Bay 4 /dev/da20 N/A NEW K4K73KGB

BIC-Isilon-Cluster-5: 5 Bay 5 /dev/da3 33 HEALTHY Z1ZA74A4

BIC-Isilon-Cluster-5: 5 Bay 6 /dev/da21 15 HEALTHY Z1ZAEQ13

BIC-Isilon-Cluster-5: 5 Bay 7 /dev/da22 14 HEALTHY S1Z1SAF5

BIC-Isilon-Cluster-5: 5 Bay 8 /dev/da23 13 HEALTHY S1Z1SB0C

BIC-Isilon-Cluster-5: 5 Bay 9 /dev/da4 32 HEALTHY Z1ZAEPR8

BIC-Isilon-Cluster-5: 5 Bay 10 /dev/da24 36 HEALTHY S1Z26JWM

BIC-Isilon-Cluster-5: 5 Bay 11 /dev/da25 11 HEALTHY S1Z1RYGS

BIC-Isilon-Cluster-5: 5 Bay 12 /dev/da26 10 HEALTHY S1Z1SB0A

BIC-Isilon-Cluster-5: 5 Bay 13 /dev/da5 31 HEALTHY Z1ZAEPS5

BIC-Isilon-Cluster-5: 5 Bay 14 /dev/da6 30 HEALTHY Z1ZAF5GQ

BIC-Isilon-Cluster-5: 5 Bay 15 /dev/da7 29 HEALTHY Z1ZAB40S

BIC-Isilon-Cluster-5: 5 Bay 16 /dev/da27 9 HEALTHY Z1ZAF625

BIC-Isilon-Cluster-5: 5 Bay 17 /dev/da8 28 HEALTHY Z1ZAEPJY

BIC-Isilon-Cluster-5: 5 Bay 18 /dev/da9 27 HEALTHY Z1ZAF1LG

BIC-Isilon-Cluster-5: 5 Bay 19 /dev/da10 26 HEALTHY Z1ZAF724

BIC-Isilon-Cluster-5: 5 Bay 20 /dev/da28 8 HEALTHY Z1ZAF5W8

BIC-Isilon-Cluster-5: 5 Bay 21 /dev/da11 25 HEALTHY Z1ZAEW1W

BIC-Isilon-Cluster-5: 5 Bay 22 /dev/da12 24 HEALTHY Z1ZAF0CW

BIC-Isilon-Cluster-5: 5 Bay 23 /dev/da29 7 HEALTHY Z1ZAF5VM

BIC-Isilon-Cluster-5: 5 Bay 24 /dev/da30 6 HEALTHY Z1ZAF59X

BIC-Isilon-Cluster-5: 5 Bay 25 /dev/da31 5 HEALTHY Z1ZAF21G

BIC-Isilon-Cluster-5: 5 Bay 26 /dev/da32 4 HEALTHY Z1ZAF5QJ

BIC-Isilon-Cluster-5: 5 Bay 27 /dev/da33 3 HEALTHY Z1ZAF58Y

BIC-Isilon-Cluster-5: 5 Bay 28 /dev/da13 23 HEALTHY Z1ZAF6CG

BIC-Isilon-Cluster-5: 5 Bay 29 /dev/da34 2 HEALTHY Z1ZAB3XJ

BIC-Isilon-Cluster-5: 5 Bay 30 /dev/da14 22 HEALTHY S1Z1RYHB

BIC-Isilon-Cluster-5: 5 Bay 31 /dev/da35 1 HEALTHY Z1ZAB3TQ

BIC-Isilon-Cluster-5: 5 Bay 32 /dev/da15 21 HEALTHY Z1ZAEPYX

BIC-Isilon-Cluster-5: 5 Bay 33 /dev/da36 0 HEALTHY Z1ZAF4Z0

BIC-Isilon-Cluster-5: 5 Bay 34 /dev/da16 20 HEALTHY Z1ZAEPMC

BIC-Isilon-Cluster-5: 5 Bay 35 /dev/da17 19 HEALTHY Z1ZAF4H4

BIC-Isilon-Cluster-5: 5 Bay 36 /dev/da18 18 HEALTHY Z1ZAF6JA

BIC-Isilon-Cluster-5: -----------------------------------------------

BIC-Isilon-Cluster-5: Total: 36

# Check cluster status:

BIC-Isilon-Cluster-4# isi status

Cluster Name: BIC-Isilon-Cluster

Cluster Health: [ ATTN]

Cluster Storage: HDD SSD Storage

Size: 638.0T (645.7T Raw) 0 (0 Raw)

VHS Size: 7.7T

Used: 204.8T (32%) 0 (n/a)

Avail: 433.2T (68%) 0 (n/a)

Health Throughput (bps) HDD Storage SSD Storage

ID |IP Address |DASR | In Out Total| Used / Size |Used / Size

---+---------------+-----+-----+-----+-----+-----------------+-----------------

1|172.16.10.20 | OK |28.8M|83.3k|28.9M|41.0T/ 130T( 32%)|(No Storage SSDs)

2|172.16.10.21 | OK | 1.2M| 3.7M| 4.9M|40.9T/ 130T( 32%)|(No Storage SSDs)

3|172.16.10.22 | OK | 3.2k| 167k| 170k|41.0T/ 130T( 32%)|(No Storage SSDs)

4|172.16.10.23 | OK | 861k|49.2M|50.0M|41.0T/ 130T( 32%)|(No Storage SSDs)

5|172.16.10.24 |-A-- | 858k| 1.6M| 2.5M|41.0T/ 126T( 32%)|(No Storage SSDs)

---+---------------+-----+-----+-----+-----+-----------------+-----------------

Cluster Totals: |31.7M|54.7M|86.4M| 205T/ 638T( 32%)|(No Storage SSDs)

Health Fields: D = Down, A = Attention, S = Smartfailed, R = Read-Only

Critical Events:

10/14 23:24 5 One or more drives (bay(s) 4 / location / type(s) HDD) are...

Cluster Job Status:

No running jobs.

No paused or waiting jobs.

# Try to add disk drive in bay 4 of node 5:

BIC-Isilon-Cluster-4# isi devices add 4 --node-lnn=5

You are about to add drive bay4, on node lnn 5. Are you sure? (yes/[no]): yes

Initiating add on bay4

The drive in bay4 was not added to the file system because it is not formatted.

Format the drive to add it to the file system by running the following command,

where <bay> is the bay number of the drive: isi devices drive format <bay>

# Oups. It doesn't like it. The new disk is an Hitachi drive while

# the cluster is built of Seagate ES3.

# Login directly to node 5 as it's easier to do the stuff directly there.

BIC-Isilon-Cluster-4# ssh BIC-Isilon-Cluster-5

Password:

Last login: Sun Oct 15 02:31:18 2017 from 172.16.10.160

Copyright (c) 2001-2016 EMC Corporation. All Rights Reserved.

Copyright (c) 1992-2016 The FreeBSD Project.

Copyright (c) 1979, 1980, 1983, 1986, 1988, 1989, 1991, 1992, 1993, 1994

The Regents of the University of California. All rights reserved.

Isilon OneFS v8.0.0.4

# Check the state of the drive in bay 4:

BIC-Isilon-Cluster-5# isi devices drive view 4

Lnn: 5

Location: Bay 4

Lnum: N/A

Device: /dev/da20

Baynum: 4

Handle: 333

Serial: K4K73KGB

Model: HUS726040ALA610

Tech: SATA

Media: HDD

Blocks: 7814037168

Logical Block Length: 512

Physical Block Length: 512

WWN: 0000000000000000

State: NEW

Purpose: UNKNOWN

Purpose Description: A drive whose purpose is unknown

Present: Yes

# Check the difference between it and the drive in bay 3 (healthy):

BIC-Isilon-Cluster-5# isi devices drive view 3

Lnn: 5

Location: Bay 3

Lnum: 17

Device: /dev/da19

Baynum: 3

Handle: 353

Serial: S1Z1SB0L

Model: ST4000NM0033-9ZM170

Tech: SATA

Media: HDD

Blocks: 7814037168

Logical Block Length: 512

Physical Block Length: 512

WWN: 5000C5008CAD9092

State: HEALTHY

Purpose: STORAGE

Purpose Description: A drive used for normal data storage operation

Present: Yes

# Format the drive bay 4:

BIC-Isilon-Cluster-5# isi devices drive format 4

You are about to format drive bay4, on node lnn 5. Are you sure? (yes/[no]): yes

BIC-Isilon-Cluster-5# isi devices drive view 4

Lnn: 5

Location: Bay 4

Lnum: 37

Device: /dev/da20

Baynum: 4

Handle: 332

Serial: K4K73KGB

Model: HUS726040ALA610

Tech: SATA

Media: HDD

Blocks: 7814037168

Logical Block Length: 512

Physical Block Length: 512

WWN: 0000000000000000

State: NONE

Purpose: NONE

Purpose Description: A drive that doesn't yet have a purpose

Present: Yes

# The drive shows up as 'PREPARING'now:

BIC-Isilon-Cluster-5# isi devices drive list

Lnn Location Device Lnum State Serial

-------------------------------------------------

5 Bay 1 /dev/da1 35 HEALTHY S1Z1S6BY

5 Bay 2 /dev/da2 34 HEALTHY Z1ZAECJM

5 Bay 3 /dev/da19 17 HEALTHY S1Z1SB0L

5 Bay 4 /dev/da20 37 PREPARING K4K73KGB

5 Bay 5 /dev/da3 33 HEALTHY Z1ZA74A4

5 Bay 6 /dev/da21 15 HEALTHY Z1ZAEQ13

5 Bay 7 /dev/da22 14 HEALTHY S1Z1SAF5

5 Bay 8 /dev/da23 13 HEALTHY S1Z1SB0C

5 Bay 9 /dev/da4 32 HEALTHY Z1ZAEPR8

5 Bay 10 /dev/da24 36 HEALTHY S1Z26JWM

5 Bay 11 /dev/da25 11 HEALTHY S1Z1RYGS

5 Bay 12 /dev/da26 10 HEALTHY S1Z1SB0A

5 Bay 13 /dev/da5 31 HEALTHY Z1ZAEPS5

5 Bay 14 /dev/da6 30 HEALTHY Z1ZAF5GQ

5 Bay 15 /dev/da7 29 HEALTHY Z1ZAB40S

5 Bay 16 /dev/da27 9 HEALTHY Z1ZAF625

5 Bay 17 /dev/da8 28 HEALTHY Z1ZAEPJY

5 Bay 18 /dev/da9 27 HEALTHY Z1ZAF1LG

5 Bay 19 /dev/da10 26 HEALTHY Z1ZAF724

5 Bay 20 /dev/da28 8 HEALTHY Z1ZAF5W8

5 Bay 21 /dev/da11 25 HEALTHY Z1ZAEW1W

5 Bay 22 /dev/da12 24 HEALTHY Z1ZAF0CW

5 Bay 23 /dev/da29 7 HEALTHY Z1ZAF5VM

5 Bay 24 /dev/da30 6 HEALTHY Z1ZAF59X

5 Bay 25 /dev/da31 5 HEALTHY Z1ZAF21G

5 Bay 26 /dev/da32 4 HEALTHY Z1ZAF5QJ

5 Bay 27 /dev/da33 3 HEALTHY Z1ZAF58Y

5 Bay 28 /dev/da13 23 HEALTHY Z1ZAF6CG

5 Bay 29 /dev/da34 2 HEALTHY Z1ZAB3XJ

5 Bay 30 /dev/da14 22 HEALTHY S1Z1RYHB

5 Bay 31 /dev/da35 1 HEALTHY Z1ZAB3TQ

5 Bay 32 /dev/da15 21 HEALTHY Z1ZAEPYX

5 Bay 33 /dev/da36 0 HEALTHY Z1ZAF4Z0

5 Bay 34 /dev/da16 20 HEALTHY Z1ZAEPMC

5 Bay 35 /dev/da17 19 HEALTHY Z1ZAF4H4

5 Bay 36 /dev/da18 18 HEALTHY Z1ZAF6JA

-------------------------------------------------

# Add the drive bay 4:

BIC-Isilon-Cluster-5# isi devices drive add 4

You are about to add drive bay4, on node lnn 5. Are you sure? (yes/[no]): yes

Initiating add on bay4

The add operation is in-progress.

A OneFS-formatted drive was found in bay4 and is being added to the file system.

Wait a few minutes and then list all drives to verify that the add operation completed successfully.

# There was a event while this was going on.

# Not sure what is means as bay for is not a Seagate ES3 anymore.

BIC-Isilon-Cluster-5# isi event groups view 4882746

ID: 4882746

Started: 10/18 14:20

Causes Long: Drive in bay 4 location Bay 4 is unknown model ST4000NM0033-9ZM170

Lnn: 5

Devid: 6

Last Event: 2017-10-18T14:20:30

Ignore: No

Ignore Time: Never

Resolved: Yes

Resolve Time: 2017-10-18T14:18:15

Ended: 10/18 14:18

Events: 2

Severity: warning

# After a little while, minutes, the drive shows up good:

BIC-Isilon-Cluster-5# isi devices drive list

Lnn Location Device Lnum State Serial

-----------------------------------------------

5 Bay 1 /dev/da1 35 HEALTHY S1Z1S6BY

5 Bay 2 /dev/da2 34 HEALTHY Z1ZAECJM

5 Bay 3 /dev/da19 17 HEALTHY S1Z1SB0L

5 Bay 4 /dev/da20 37 HEALTHY K4K73KGB

5 Bay 5 /dev/da3 33 HEALTHY Z1ZA74A4

5 Bay 6 /dev/da21 15 HEALTHY Z1ZAEQ13

5 Bay 7 /dev/da22 14 HEALTHY S1Z1SAF5

5 Bay 8 /dev/da23 13 HEALTHY S1Z1SB0C

5 Bay 9 /dev/da4 32 HEALTHY Z1ZAEPR8

5 Bay 10 /dev/da24 36 HEALTHY S1Z26JWM

5 Bay 11 /dev/da25 11 HEALTHY S1Z1RYGS

5 Bay 12 /dev/da26 10 HEALTHY S1Z1SB0A

5 Bay 13 /dev/da5 31 HEALTHY Z1ZAEPS5

5 Bay 14 /dev/da6 30 HEALTHY Z1ZAF5GQ

5 Bay 15 /dev/da7 29 HEALTHY Z1ZAB40S

5 Bay 16 /dev/da27 9 HEALTHY Z1ZAF625

5 Bay 17 /dev/da8 28 HEALTHY Z1ZAEPJY

5 Bay 18 /dev/da9 27 HEALTHY Z1ZAF1LG

5 Bay 19 /dev/da10 26 HEALTHY Z1ZAF724

5 Bay 20 /dev/da28 8 HEALTHY Z1ZAF5W8

5 Bay 21 /dev/da11 25 HEALTHY Z1ZAEW1W

5 Bay 22 /dev/da12 24 HEALTHY Z1ZAF0CW

5 Bay 23 /dev/da29 7 HEALTHY Z1ZAF5VM

5 Bay 24 /dev/da30 6 HEALTHY Z1ZAF59X

5 Bay 25 /dev/da31 5 HEALTHY Z1ZAF21G

5 Bay 26 /dev/da32 4 HEALTHY Z1ZAF5QJ

5 Bay 27 /dev/da33 3 HEALTHY Z1ZAF58Y

5 Bay 28 /dev/da13 23 HEALTHY Z1ZAF6CG

5 Bay 29 /dev/da34 2 HEALTHY Z1ZAB3XJ

5 Bay 30 /dev/da14 22 HEALTHY S1Z1RYHB

5 Bay 31 /dev/da35 1 HEALTHY Z1ZAB3TQ

5 Bay 32 /dev/da15 21 HEALTHY Z1ZAEPYX

5 Bay 33 /dev/da36 0 HEALTHY Z1ZAF4Z0

5 Bay 34 /dev/da16 20 HEALTHY Z1ZAEPMC

5 Bay 35 /dev/da17 19 HEALTHY Z1ZAF4H4

5 Bay 36 /dev/da18 18 HEALTHY Z1ZAF6JA

-----------------------------------------------

Total: 36

BIC-Isilon-Cluster-5# isi devices drive view 4

Lnn: 5

Location: Bay 4

Lnum: 37

Device: /dev/da20

Baynum: 4

Handle: 332

Serial: K4K73KGB

Model: HUS726040ALA610

Tech: SATA

Media: HDD

Blocks: 7814037168

Logical Block Length: 512

Physical Block Length: 512

WWN: 0000000000000000

State: HEALTHY

Purpose: STORAGE

Purpose Description: A drive used for normal data storage operation

Present: Yes

# Check cluster state after resolving the event group.

# I did resolve through the web UI as it is easier.

BIC-Isilon-Cluster-5# isi status

Cluster Name: BIC-Isilon-Cluster

Cluster Health: [ OK ]

Cluster Storage: HDD SSD Storage

Size: 641.6T (649.3T Raw) 0 (0 Raw)

VHS Size: 7.7T

Used: 204.8T (32%) 0 (n/a)

Avail: 436.8T (68%) 0 (n/a)

Health Throughput (bps) HDD Storage SSD Storage

ID |IP Address |DASR | In Out Total| Used / Size |Used / Size

---+---------------+-----+-----+-----+-----+-----------------+-----------------

1|172.16.10.20 | OK | 500k| 0| 500k|41.0T/ 130T( 32%)|(No Storage SSDs)

2|172.16.10.21 | OK | 4.6k| 320k| 325k|40.9T/ 130T( 32%)|(No Storage SSDs)

3|172.16.10.22 | OK | 0| 0| 0|41.0T/ 130T( 32%)|(No Storage SSDs)

4|172.16.10.23 | OK | 321k|83.4k| 404k|41.0T/ 130T( 32%)|(No Storage SSDs)

5|172.16.10.24 | OK | 1.1M| 0| 1.1M|41.0T/ 130T( 32%)|(No Storage SSDs)

---+---------------+-----+-----+-----+-----+-----------------+-----------------

Cluster Totals: | 1.9M| 403k| 2.3M| 205T/ 642T( 32%)|(No Storage SSDs)

Health Fields: D = Down, A = Attention, S = Smartfailed, R = Read-Only

Critical Events:

Cluster Job Status:

Running jobs:

Job Impact Pri Policy Phase Run Time

-------------------------- ------ --- ---------- ----- ----------

MultiScan[4097] Low 4 LOW 1/4 0:02:18

No paused or waiting jobs.

No failed jobs.

Recent job results:

Time Job Event

--------------- -------------------------- ------------------------------

10/18 04:00:22 ShadowStoreProtect[4096] Succeeded (LOW)

10/18 03:02:15 SnapshotDelete[4095] Succeeded (MEDIUM)

10/18 02:00:04 WormQueue[4094] Succeeded (LOW)

10/18 01:01:37 SnapshotDelete[4093] Succeeded (MEDIUM)

10/18 00:31:08 SnapshotDelete[4092] Succeeded (MEDIUM)

10/18 00:01:39 SnapshotDelete[4091] Succeeded (MEDIUM)

10/17 23:04:54 FSAnalyze[4089] Succeeded (LOW)

10/17 22:33:17 SnapshotDelete[4090] Succeeded (MEDIUM)

11/15 14:53:34 MultiScan[1254] MultiScan[1254] Failed

10/06 14:45:55 ChangelistCreate[975] ChangelistCreate[975] Failed

Network

BIC-Isilon-Cluster-1# isi config Welcome to the Isilon IQ configuration console. Copyright (c) 2001-2016 EMC Corporation. All Rights Reserved. Enter 'help' to see list of available commands. Enter 'help <command>' to see help for a specific command. Enter 'quit' at any prompt to discard changes and exit. Node build: Isilon OneFS v8.0.0.0 B_8_0_0_037(RELEASE) Node serial number: SX410-301608-0260 BIC-Isilon-Cluster >>> status Configuration for 'BIC-Isilon-Cluster' Local machine: ----------------------------------+----------------------------------------- Node LNN : 1 | Date : 2016/06/09 15:53:01 EDT ----------------------------------+----------------------------------------- Interface : ib1 | MAC : 00:00:00:49:fe:80:00:00:00:00:00:00:7c:fe:90:03:00:9e:e9:a2 IP Address : 10.0.1.1 | MAC Options : none ----------------------------------+----------------------------------------- Interface : ib0 | MAC : 00:00:00:48:fe:80:00:00:00:00:00:00:7c:fe:90:03:00:9e:e9:a1 IP Address : 10.0.2.1 | MAC Options : none ----------------------------------+----------------------------------------- Interface : lo0 | MAC : 00:00:00:00:00:00 IP Address : 10.0.3.1 | MAC Options : none ----------------------------------+----------------------------------------- Network: ----------------------------------+----------------------------------------- JoinMode : Manual Interfaces: ----------------------------------+----------------------------------------- Interface : int-a | Flags : enabled_ok Netmask : 255.255.255.0 | MTU : N/A ----------------+-----------------+------------------+---------------------- Low IP | High IP | Allocated | Free ----------------+-----------------+------------------+---------------------- 10.0.1.1 | 10.0.1.254 | 5 | 249 ----------------+-----------------+------------------+---------------------- Interface : int-b | Flags : enabled_ok Netmask : 255.255.255.0 | MTU : N/A ----------------+-----------------+------------------+---------------------- Low IP | High IP | Allocated | Free ----------------+-----------------+------------------+---------------------- 10.0.2.1 | 10.0.2.254 | 5 | 249 ----------------+-----------------+------------------+---------------------- Interface : lpbk | Flags : enabled_ok cluster_traffic failover Netmask : 255.255.255.0 | MTU : 1500 ----------------+-----------------+------------------+---------------------- Low IP | High IP | Allocated | Free ----------------+-----------------+------------------+---------------------- 10.0.3.1 | 10.0.3.254 | 5 | 249 ----------------+-----------------+------------------+----------------------

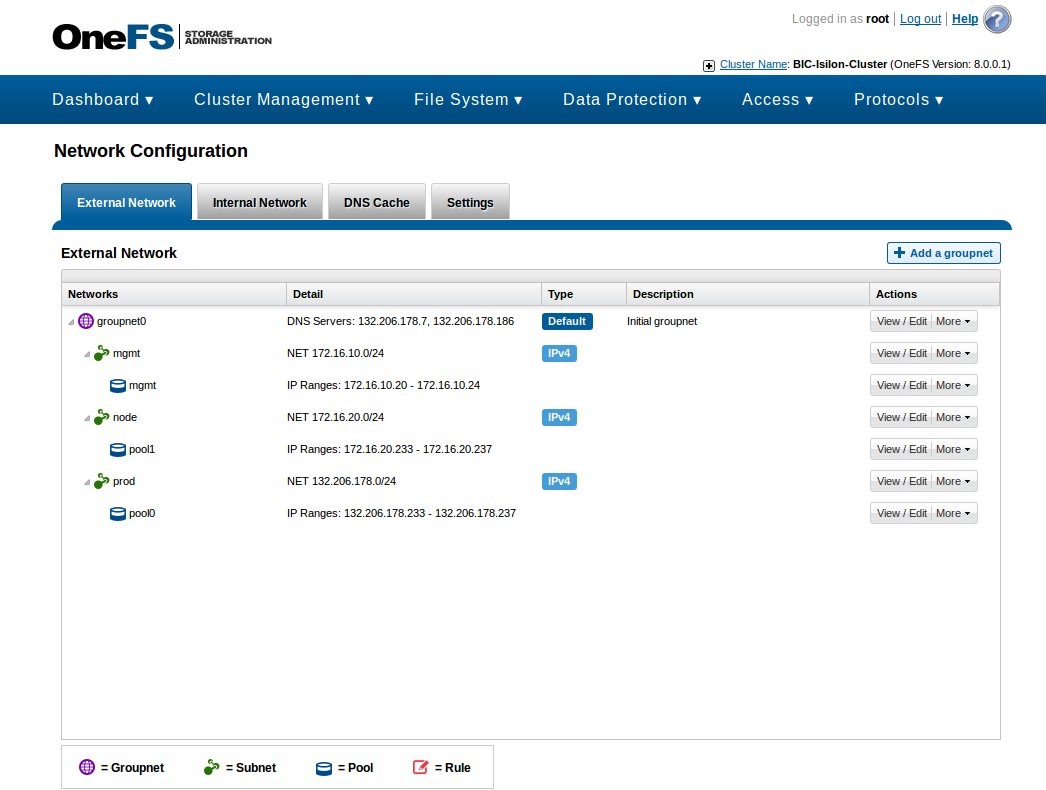

- Initial groupnet and DNS client settings:

BIC-Isilon-Cluster-4# isi network groupnets list

ID DNS Cache Enabled DNS Search DNS Servers Subnets

-----------------------------------------------------------------

groupnet0 True - 132.206.178.7 mgmt

132.206.178.186 prod

node

-----------------------------------------------------------------

Total: 1

BIC-Isilon-Cluster-4# isi network groupnets view groupnet0

ID: groupnet0

Name: groupnet0

Description: Initial groupnet

DNS Cache Enabled: True

DNS Options: -

DNS Search: -

DNS Servers: 132.206.178.7, 132.206.178.186

Server Side DNS Search: True

Subnets: mgmt, prod, node

- List and view the network subnets defined in the cluster:

BIC-Isilon-Cluster-4# isi network subnets list ID Subnet Gateway|Priority Pools SC Service groupnet0.mgmt 172.16.10.0/24 172.16.10.1|2 mgmt 0.0.0.0 groupnet0.node 172.16.20.0/23 172.16.20.1|3 pool1 172.16.20.232 groupnet0.prod 132.206.178.0/24 132.206.178.1|1 pool0 132.206.178.232 ------------------------------------------------------------------------ Total: 3

- List and view the network pools defined in the cluster.

- Note that the IP allocation for the pool groupnet0.prod.pool0 is set to dynamic. This requires a SmartConnect Advanced license.

BIC-Isilon-Cluster-4# isi network pools list

ID SC Zone Allocation Method

groupnet0.mgmt.mgmt mgmt.isi.bic.mni.mcgill.ca static

groupnet0.node.pool1 nfs.isi-node.bic.mni.mcgill.ca dynamic

groupnet0.prod.pool0 nfs.isi.bic.mni.mcgill.ca dynamic

----------------------------------------------------------------------

Total: 3

BIC-Isilon-Cluster-4# isi network pools view groupnet0.mgmt.mgmt

ID: groupnet0.mgmt.mgmt

Groupnet: groupnet0

Subnet: mgmt

Name: mgmt

Rules: -

Access Zone: System

Allocation Method: static

Aggregation Mode: lacp

SC Suspended Nodes: -

Description: -

Ifaces: 1:ext-1, 2:ext-1, 4:ext-1, 3:ext-1, 5:ext-1

IP Ranges: 172.16.10.20-172.16.10.24

Rebalance Policy: auto

SC Auto Unsuspend Delay: 0

SC Connect Policy: round_robin

SC Zone: mgmt.isi.bic.mni.mcgill.ca

SC DNS Zone Aliases: -

SC Failover Policy: round_robin

SC Subnet: prod

SC Ttl: 0

Static Routes: -

BIC-Isilon-Cluster-4# isi network pools view groupnet0.prod.pool0

ID: groupnet0.prod.pool0

Groupnet: groupnet0

Subnet: prod

Name: pool0

Rules: -

Access Zone: prod

Allocation Method: dynamic

Aggregation Mode: lacp

SC Suspended Nodes: -

Description: -

Ifaces: 1:10gige-agg-1, 2:10gige-agg-1, 4:10gige-agg-1, 3:10gige-agg-1, 5:10gige-agg-1

IP Ranges: 132.206.178.233-132.206.178.237

Rebalance Policy: auto

SC Auto Unsuspend Delay: 0

SC Connect Policy: round_robin

SC Zone: nfs.isi.bic.mni.mcgill.ca

SC DNS Zone Aliases: -

SC Failover Policy: round_robin

SC Subnet: prod

SC Ttl: 0

Static Routes: -

IC-Isilon-Cluster-2# isi network pools view groupnet0.node.pool1

ID: groupnet0.node.pool1

Groupnet: groupnet0

Subnet: node

Name: pool1

Rules: -

Access Zone: prod

Allocation Method: dynamic

Aggregation Mode: lacp

SC Suspended Nodes: -

Description: -

Ifaces: 1:10gige-agg-1, 2:10gige-agg-1, 4:10gige-agg-1, 3:10gige-agg-1, 5:10gige-agg-1

IP Ranges: 172.16.20.233-172.16.20.237

Rebalance Policy: auto

SC Auto Unsuspend Delay: 0

SC Connect Policy: round_robin

SC Zone: nfs.isi-node.bic.mni.mcgill.ca

SC DNS Zone Aliases: -

SC Failover Policy: round_robin

SC Subnet: node

SC Ttl: 0

Static Routes: -

- Display network interfaces configuration:

BIC-Isilon-Cluster-4# isi network interfaces list

LNN Name Status Owners IP Addresses

--------------------------------------------------------------------

1 10gige-1 Up - -

1 10gige-2 Up - -

1 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.237

groupnet0.node.pool1 172.16.20.237

1 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.20

1 ext-2 No Carrier - -

1 ext-agg Not Available - -

2 10gige-1 Up - -

2 10gige-2 Up - -

2 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.236

groupnet0.node.pool1 172.16.20.236

2 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.21

2 ext-2 No Carrier - -

2 ext-agg Not Available - -

3 10gige-1 Up - -

3 10gige-2 Up - -

3 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.234

groupnet0.node.pool1 172.16.20.234

3 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.22

3 ext-2 No Carrier - -

3 ext-agg Not Available - -

4 10gige-1 Up - -

4 10gige-2 Up - -

4 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.235

groupnet0.node.pool1 172.16.20.235

4 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.23

4 ext-2 No Carrier - -

4 ext-agg Not Available - -

5 10gige-1 Up - -

5 10gige-2 Up - -

5 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.233

groupnet0.node.pool1 172.16.20.233

5 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.24

5 ext-2 No Carrier - -

5 ext-agg Not Available - -

--------------------------------------------------------------------

Total: 30

- Suspend or resume a node:

From the docu65065_OneFS-8.0.0-CLI-Administration-Guide, page 950:

Suspend or resume a node

You can suspend and resume SmartConnect DNS query responses on a node.

Procedure

1. To suspend DNS query responses for an node:

a. (Optional) To identify a list of nodes and IP address pools, run the

following command:

isi network interfaces list

b. Run the isi network pools sc-suspend-nodes command and specify the pool ID

and logical node number (LNN).

Specify the pool ID you want in the following format:

<groupnet_name>.<subnet_name>.<pool_name>

The following command suspends DNS query responses on node 3 when queries come

through IP addresses in pool5 under groupnet1.subnet 3:

isi network pools sc-suspend-nodes groupnet1.subnet3.pool5 3

2. To resume DNS query responses for an IP address pool, run the isi network

pools sc-resume-nodes command and specify the pool ID and logical node number

(LNN).

The following command resumes DNS query responses on node 3 when queries come

through IP addresses in pool5 under groupnet1.subnet 3:

isi network pools sc-resume-nodes groupnet1.subnet3.pool5 3

Example of an IP Failover with Dynamic Allocation Method

- First, set the dynamic IP allocation for the pool:

isi network pools modify groupnet0.prod.pool0 --alloc-method=dynamic

- Then pull the fiber cables from one node, say node 5 and watch what happens:

- Before pulling the cables:

BIC-Isilon-Cluster-4# isi network interfaces list ... 3 10gige-1 Up - - 3 10gige-2 Up - - 3 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.235 3 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.22 3 ext-2 No Carrier - - 3 ext-agg Not Available - - 4 10gige-1 Up - - ... 5 10gige-1 Up - - 5 10gige-2 Up - - 5 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.237 5 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.24 5 ext-2 No Carrier - - 5 ext-agg Not Available - - -------------------------------------------------------------------- Total: 30

- After.

- Node 5 external network interfaces 10gig-1, −2, -agg-1 now display No Carrier.

- Note how node 3 external network interface 10gige-agg-1 picked up the IP of node 5.

BIC-Isilon-Cluster-4# isi network interfaces list

LNN Name Status Owners IP Addresses

...

3 10gige-1 Up - -

3 10gige-2 Up - -

3 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.235

132.206.178.237

3 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.22

3 ext-2 No Carrier - -

3 ext-agg Not Available - -

...

5 10gige-1 No Carrier - -

5 10gige-2 No Carrier - -

5 10gige-agg-1 No Carrier groupnet0.prod.pool0 -

5 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.24

5 ext-2 No Carrier - -

5 ext-agg Not Available - -

How To Add a Subnet to a Cluster

- Goal: to have clients access the Isilon cluster through the private network

172.16.20.0/24(data network). - Hosts in the

arnodescompute cluster have 2 extra bounded NICs that are configured on this network. - The private network

172.16.20.0/24is directly attached to cluster’s front-end: there are no intervening gateways or routers in between. - This section explains how to configure the Isilon cluster such that clients on

172.16.20.0/24are granted NFS access. - Current network cluster state:

BIC-Isilon-Cluster-4# isi network interfaces ls LNN Name Status Owners IP Addresses -------------------------------------------------------------------- 1 10gige-1 Up - - 1 10gige-2 Up - - 1 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.237 1 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.20 1 ext-2 No Carrier - - 1 ext-agg Not Available - - 2 10gige-1 Up - - 2 10gige-2 Up - - 2 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.236 2 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.21 2 ext-2 No Carrier - - 2 ext-agg Not Available - - 3 10gige-1 Up - - 3 10gige-2 Up - - 3 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.233 3 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.22 3 ext-2 No Carrier - - 3 ext-agg Not Available - - 4 10gige-1 Up - - 4 10gige-2 Up - - 4 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.234 4 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.23 4 ext-2 No Carrier - - 4 ext-agg Not Available - - 5 10gige-1 Up - - 5 10gige-2 Up - - 5 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.235 5 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.24 5 ext-2 No Carrier - - 5 ext-agg Not Available - - -------------------------------------------------------------------- BIC-Isilon-Cluster-4# isi network pools ls ID SC Zone Allocation Method ---------------------------------------------------------------------- groupnet0.mgmt.mgmt mgmt.isi.bic.mni.mcgill.ca static groupnet0.prod.pool0 nfs.isi.bic.mni.mcgill.ca dynamic ---------------------------------------------------------------------- Total: 2 BIC-Isilon-Cluster-4# isi network subnets ls ID Subnet Gateway|Priority Pools SC Service ------------------------------------------------------------------------ groupnet0.mgmt 172.16.10.0/24 172.16.10.1|2 mgmt 0.0.0.0 groupnet0.prod 132.206.178.0/24 132.206.178.1|1 pool0 132.206.178.232 ------------------------------------------------------------------------ Total: 2

- It is faster and easier to configure this by using the WebUI rather than the CLI.

- Essentially, it boils down to the following actions:

- Create a new subnet called

nodein the default groupnetgroupnet0. - Set the SmartConnect (Sc) Service IP to

172.16.20.232. - Update the domain master DNS server with the new delegation record and “glue” record. More on this later.

- Create a new subnet called

BIC-Isilon-Cluster-4# isi network subnets view groupnet0.node

ID: groupnet0.node

Name: node

Groupnet: groupnet0

Pools: pool1

Addr Family: ipv4

Base Addr: 172.16.20.0

CIDR: 172.16.20.0/24

Description: -

Dsr Addrs: -

Gateway: 172.16.20.1

Gateway Priority: 3

MTU: 1500

Prefixlen: 24

Netmask: 255.255.255.0

Sc Service Addr: 172.16.20.232

VLAN Enabled: False

VLAN ID: -

- Create a new pool called

pool1with the following properties:- Access zone is set to

prodlike the poolpool0. - Allocation method is

dynamic. - Select the 10gige aggregate interfaces from each node.

- Set the SmartConnect Connect policy to

round-robin. - Best practices might require to set it to

cpuornetwork utilizationfor NFSv4. Benchmarking should help. - Name the SmartConnect zone as

nfs.isi-node.bic.mni.mcgill.ca. - Proper records in the master domain DNS server will have to be set for the new zone. More on this later.

- Access zone is set to

BIC-Isilon-Cluster-4# isi network pools view groupnet0.node.pool1

ID: groupnet0.node.pool1

Groupnet: groupnet0

Subnet: node

Name: pool1

Rules: -

Access Zone: prod

Allocation Method: dynamic

Aggregation Mode: lacp

SC Suspended Nodes: -

Description: -

Ifaces: 1:10gige-agg-1, 2:10gige-agg-1, 4:10gige-agg-1, 3:10gige-agg-1, 5:10gige-agg-1

IP Ranges: 172.16.20.233-172.16.20.237

Rebalance Policy: auto

SC Auto Unsuspend Delay: 0

SC Connect Policy: round_robin

SC Zone: nfs.isi-node.bic.mni.mcgill.ca

SC DNS Zone Aliases: -

SC Failover Policy: round_robin

SC Subnet: node

SC Ttl: 0

Static Routes: -

- With this in place, the cluster network interfaces settings will be:

BIC-Isilon-Cluster-4# isi network interfaces ls

LNN Name Status Owners IP Addresses

--------------------------------------------------------------------

1 10gige-1 Up - -

1 10gige-2 Up - -

1 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.237

groupnet0.node.pool1 172.16.20.237

1 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.20

1 ext-2 No Carrier - -

1 ext-agg Not Available - -

2 10gige-1 Up - -

2 10gige-2 Up - -

2 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.236

groupnet0.node.pool1 172.16.20.236

2 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.21

2 ext-2 No Carrier - -

2 ext-agg Not Available - -

3 10gige-1 Up - -

3 10gige-2 Up - -

3 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.233

groupnet0.node.pool1 172.16.20.234

3 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.22

3 ext-2 No Carrier - -

3 ext-agg Not Available - -

4 10gige-1 Up - -

4 10gige-2 Up - -

4 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.234

groupnet0.node.pool1 172.16.20.235

4 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.23

4 ext-2 No Carrier - -

4 ext-agg Not Available - -

5 10gige-1 Up - -

5 10gige-2 Up - -

5 10gige-agg-1 Up groupnet0.prod.pool0 132.206.178.235

groupnet0.node.pool1 172.16.20.233

5 ext-1 Up groupnet0.mgmt.mgmt 172.16.10.24

5 ext-2 No Carrier - -

5 ext-agg Not Available - -

--------------------------------------------------------------------

Total: 30

- A few notes about the above:

- Because the initial cluster configuration was sloppy, the LNNs (Logical Node Number) and Node ID don’t match.

- This explains why some 10gige-agg interface have different octal bits in

pool0andpool1. - Ultimately, the LLNs and NodeIDs should be re-assigned to match the nodes position in the rack.

- This would avoid potential mistakes when updating or servicing the cluster.

- Current setting:

BIC-Isilon-Cluster-4# isi config

Welcome to the Isilon IQ configuration console.

Copyright (c) 2001-2016 EMC Corporation. All Rights Reserved.

Enter 'help' to see list of available commands.

Enter 'help <command>' to see help for a specific command.

Enter 'quit' at any prompt to discard changes and exit.

Node build: Isilon OneFS v8.0.0.1 B_MR_8_0_0_1_131(RELEASE)

Node serial number: SX410-301608-0264

BIC-Isilon-Cluster >>> lnnset

LNN Device ID Cluster IP

----------------------------------------

1 1 10.0.3.1

2 2 10.0.3.2

3 4 10.0.3.4

4 3 10.0.3.3

5 6 10.0.3.5

BIC-Isilon-Cluster >>> exit

BIC-Isilon-Cluster-4#

- The domain DNS configuration must be updated:

- The new zone delegation for the SmartConnect zone

isi-node.bic.mni.mcgill.ca.has to be put in place. - A new glue record must be created for the SSIP (SmartConnect Service IP) of the delegated zone.

- The new zone delegation for the SmartConnect zone

; glue record sip-node.bic.mni.mcgill.ca. IN A 172.16.20.232 ; zone delegation isi-node.bic.mni.mcgill.ca. IN NS sip-node.bic.mni.mcgill.ca.

- Verify the

SC Zone: nfs.isi-node.bic.mni.mcgill.caresolves properly and in a round-robin way. - Both on the cluster and a clients:

malin@login1:~$ dig +short nfs.isi-node.bic.mni.mcgill.ca 172.16.20.236 malin@login1:~$ dig +short nfs.isi-node.bic.mni.mcgill.ca 172.16.20.237 malin@login1:~$ dig +short nfs.isi-node.bic.mni.mcgill.ca 172.16.20.233 malin@login1:~$ dig +short nfs.isi-node.bic.mni.mcgill.ca 172.16.20.234 malin@login1:~$ dig +short nfs.isi-node.bic.mni.mcgill.ca 172.16.20.235 malin@login1:~$ dig +short nfs.isi-node.bic.mni.mcgill.ca 172.16.20.236 BIC-Isilon-Cluster-4# dig +short nfs.isi-node.bic.mni.mcgill.ca 172.16.20.237 BIC-Isilon-Cluster-4# dig +short nfs.isi-node.bic.mni.mcgill.ca 172.16.20.233 BIC-Isilon-Cluster-4# dig +short nfs.isi-node.bic.mni.mcgill.ca 172.16.20.234 BIC-Isilon-Cluster-4# dig +short nfs.isi-node.bic.mni.mcgill.ca 172.16.20.235 BIC-Isilon-Cluster-4# dig +short nfs.isi-node.bic.mni.mcgill.ca 172.16.20.236 BIC-Isilon-Cluster-4# dig +short nfs.isi-node.bic.mni.mcgill.ca 172.16.20.237

- It works!

FileSystems and Access Zones

- There are 2 access zones defined.

- Default zone is System: it must exist and cannot be deleted.

- The access zone called prod will be used to hold the user data.

- Both zones have lsa-nis-provider:BIC as Auth Providers.

- See below in Section about NIS that this might create a security weakness.

BIC-Isilon-Cluster-4# isi zone zones list

Name Path

------------

System /ifs

prod /ifs

------------

Total: 2

BIC-Isilon-Cluster-4# isi zone view System

Name: System

Path: /ifs

Groupnet: groupnet0

Map Untrusted: -

Auth Providers: lsa-nis-provider:BIC, lsa-file-provider:System, lsa-local-provider:System

NetBIOS Name: -

User Mapping Rules: -

Home Directory Umask: 0077

Skeleton Directory: /usr/share/skel

Cache Entry Expiry: 4H

Zone ID: 1

BIC-Isilon-Cluster-4# isi zone view prod

Name: prod

Path: /ifs

Groupnet: groupnet0

Map Untrusted: -

Auth Providers: lsa-nis-provider:BIC, lsa-local-provider:prod, lsa-file-provider:System

NetBIOS Name: -

User Mapping Rules: -

Home Directory Umask: 0077

Skeleton Directory: /usr/share/skel

Cache Entry Expiry: 4H

Zone ID: 2

- Another. more concise way of displaying the defined access zones:

IC-Isilon-Cluster-4# isi zone list -v

Name: System

Path: /ifs

Groupnet: groupnet0

Map Untrusted: -

Auth Providers: lsa-nis-provider:BIC, lsa-file-provider:System, lsa-local-provider:System

NetBIOS Name: -

User Mapping Rules: -

Home Directory Umask: 0077

Skeleton Directory: /usr/share/skel

Cache Entry Expiry: 4H

Zone ID: 1

--------------------------------------------------------------------------------

Name: prod

Path: /ifs

Groupnet: groupnet0

Map Untrusted: -

Auth Providers: lsa-nis-provider:BIC, lsa-local-provider:prod, lsa-file-provider:System

NetBIOS Name: -

User Mapping Rules: -

Home Directory Umask: 0077

Skeleton Directory: /usr/share/skel

Cache Entry Expiry: 4H

Zone ID: 2

NFS, NIS: Exports and Aliases.

- There seem to be something amiss with NIS and OneFS v8.0.

- The System access zone had to be provided with NIS authentication as otherwise only numerical UIDs and GIDs show up on the /isi/data filesystem.

- There might be a potential security weakness there.

- See https://community.emc.com/thread/193468?start=0&tstart=0 even though this thread is for v7.2

- Created /etc/netgroup with “+” in it on one node as suggested in the post above and somehow OneFS propagated it to the other nodes.

- List the NIS auth providers:

BIC-Isilon-Cluster-4# isi auth nis list

Name NIS Domain Servers Status

-----------------------------------------

BIC vamana 132.206.178.227 online

132.206.178.243

-----------------------------------------

Total: 1

BIC-Isilon-Cluster-1# isi auth nis view BIC

Name: BIC

NIS Domain: vamana

Servers: 132.206.178.227, 132.206.178.243

Status: online

Authentication: Yes

Balance Servers: Yes

Check Online Interval: 3m

Create Home Directory: No

Enabled: Yes

Enumerate Groups: Yes

Enumerate Users: Yes

Findable Groups: -

Findable Users: -

Group Domain: NIS_GROUPS

Groupnet: groupnet0

Home Directory Template: -

Hostname Lookup: Yes

Listable Groups: -

Listable Users: -

Login Shell: /bin/bash

Normalize Groups: No

Normalize Users: No

Provider Domain: -

Ntlm Support: all

Request Timeout: 20

Restrict Findable: Yes

Restrict Listable: No

Retry Time: 5

Unfindable Groups: wheel, 0, insightiq, 15, isdmgmt, 16

Unfindable Users: root, 0, insightiq, 15, isdmgmt, 16

Unlistable Groups: -

Unlistable Users: -

User Domain: NIS_USERS

Ypmatch Using Tcp: No

- Show the exports for the zone prod:

BIC-Isilon-Cluster-1# isi nfs exports list --zone prod

ID Zone Paths Description

---------------------------------

1 prod /ifs/data -

---------------------------------

Total: 1

BIC-Isilon-Cluster-1# isi nfs exports view 1 --zone prod

ID: 1

Zone: prod

Paths: /ifs/data

Description: -

Clients: 132.206.178.0/24

Root Clients: -

Read Only Clients: -

Read Write Clients: -

All Dirs: No

Block Size: 8.0k

Can Set Time: Yes

Case Insensitive: No

Case Preserving: Yes

Chown Restricted: No

Commit Asynchronous: No

Directory Transfer Size: 128.0k

Encoding: DEFAULT

Link Max: 32767

Map Lookup UID: No

Map Retry: Yes

Map Root

Enabled: True

User: nobody

Primary Group: -

Secondary Groups: -

Map Non Root

Enabled: False

User: nobody

Primary Group: -

Secondary Groups: -

Map Failure

Enabled: False

User: nobody

Primary Group: -

Secondary Groups: -

Map Full: Yes

Max File Size: 8192.00000P

Name Max Size: 255

No Truncate: No

Read Only: No

Readdirplus: Yes

Readdirplus Prefetch: 10

Return 32Bit File Ids: No

Read Transfer Max Size: 1.00M

Read Transfer Multiple: 512

Read Transfer Size: 128.0k

Security Type: unix

Setattr Asynchronous: No

Snapshot: -

Symlinks: Yes

Time Delta: 1.0 ns

Write Datasync Action: datasync

Write Datasync Reply: datasync

Write Filesync Action: filesync

Write Filesync Reply: filesync

Write Unstable Action: unstable

Write Unstable Reply: unstable

Write Transfer Max Size: 1.00M

Write Transfer Multiple: 512

Write Transfer Size: 512.0k

- It doesn’t seem possible to directly list the netgroups defined on the NIS master.

- One can however list the members of a specific netgroup if one happens to know its name:

BIC-Isilon-Cluster-4# isi auth netgroups view xgeraid --recursive --provider nis:BIC Netgroup: - Domain: - Hostname: edgar-xge.bic.mni.mcgill.ca Username: - -------------------------------------------------------------------------------- Netgroup: - Domain: - Hostname: gustav-xge.bic.mni.mcgill.ca Username: - -------------------------------------------------------------------------------- Netgroup: - Domain: - Hostname: tatania-xge.bic.mni.mcgill.ca Username: - -------------------------------------------------------------------------------- Netgroup: - Domain: - Hostname: tubal-xge.bic.mni.mcgill.ca Username: - -------------------------------------------------------------------------------- Netgroup: - Domain: - Hostname: tullus-xge.bic.mni.mcgill.ca Username: - -------------------------------------------------------------------------------- Netgroup: - Domain: - Hostname: tutor-xge.bic.mni.mcgill.ca Username: - BIC-Isilon-Cluster-1# isi auth netgroups view computecore Netgroup: - Domain: - Hostname: thaisa Username: - -------------------------------------------------------------------------------- Netgroup: - Domain: - Hostname: vaux Username: - -------------------------------------------------------------------------------- Netgroup: - Domain: - Hostname: widow Username: -

I can’t reproduce this behaviour anymore so it should be taken with a grain of salt! I’ll leave this in place for the moment, buut it might go away soon…

- Clients in netgroups must be specified with IP addresses, names don’t work:

BIC-Isilon-Cluster-1# isi nfs exports modify 1 --clear-clients --zone prod

BIC-Isilon-Cluster-1# isi auth netgroups view isix --zone prod

Netgroup: -

Domain: -

Hostname: dromio.bic.mni.mcgill.ca

Username: -

BIC-Isilon-Cluster-1# isi nfs exports modify 1 --clients isix --zone prod

bad host dromio in netgroup isix, skipping

BIC-Isilon-Cluster-1# isi auth netgroups view xisi

Netgroup: -

Domain: -

Hostname: 132.206.178.51

Username: -

BIC-Isilon-Cluster-1# isi nfs exports modify 1 --add-clients xisi --zone prod

BIC-Isilon-Cluster-1# isi nfs exports view 1 --zone prod

ID: 1

Zone: prod

Paths: /ifs/data

Description: -

Clients: xisi

Root Clients: -

Read Only Clients: -

Read Write Clients: -

All Dirs: No

Block Size: 8.0k

Can Set Time: Yes

Case Insensitive: No

Case Preserving: Yes

Chown Restricted: No

Commit Asynchronous: No

Directory Transfer Size: 128.0k

Encoding: DEFAULT

Link Max: 32767

Map Lookup UID: No

Map Retry: Yes

Map Root

Enabled: True

User: nobody

Primary Group: -

Secondary Groups: -

Map Non Root

Enabled: False

User: nobody

Primary Group: -

Secondary Groups: -

Map Failure

Enabled: False

User: nobody

Primary Group: -

Secondary Groups: -

Map Full: Yes

Max File Size: 8192.00000P

Name Max Size: 255

No Truncate: No

Read Only: No

Readdirplus: Yes

Readdirplus Prefetch: 10

Return 32Bit File Ids: No

Read Transfer Max Size: 1.00M

Read Transfer Multiple: 512

Read Transfer Size: 128.0k

Security Type: unix

Setattr Asynchronous: No

Snapshot: -

Symlinks: Yes

Time Delta: 1.0 ns

Write Datasync Action: datasync

Write Datasync Reply: datasync

Write Filesync Action: filesync

Write Filesync Reply: filesync

Write Unstable Action: unstable

Write Unstable Reply: unstable

Write Transfer Max Size: 1.00M

Write Transfer Multiple: 512

Write Transfer Size: 512.0k

Workaround To The Zone Exports Issue With Netgroups.

- Netgroup entries in the NIS maps must be FQDN: even short names won’t work with the option

—ignore-unresolvable-hosts. - Modify the zone exports by using the options to the

isi nfs exports modify 1 —add-clients sgibic —ignore-unresolvable-hosts —zone prod

BIC-Isilon-Cluster-3# isi auth netgroups view sgibic --recursive --provider nis:BIC

Netgroup: -

Domain: -

Hostname: julia.bic.mni.mcgill.ca

Username: -

--------------------------------------------------------------------------------

Netgroup: -

Domain: -

Hostname: luciana.bic.mni.mcgill.ca

Username: -

--------------------------------------------------------------------------------

Netgroup: -

Domain: -

Hostname: mouldy.bic.mni.mcgill.ca

Username: -

--------------------------------------------------------------------------------

Netgroup: -

Domain: -

Hostname: vaux.bic.mni.mcgill.ca

Username: -

BIC-Isilon-Cluster-3# isi nfs exports view 1 --zone prod

ID: 1

Zone: prod

Paths: /ifs/data

Description: -

Clients: isix, xisi

Root Clients: -

Read Only Clients: -

Read Write Clients: -

All Dirs: No

Block Size: 8.0k

Can Set Time: Yes

Case Insensitive: No

Case Preserving: Yes

Chown Restricted: No

Commit Asynchronous: No

Directory Transfer Size: 128.0k

Encoding: DEFAULT

Link Max: 32767

Map Lookup UID: No

Map Retry: Yes

Map Root

Enabled: True

User: nobody

Primary Group: -

Secondary Groups: -

Map Non Root

Enabled: False

User: nobody

Primary Group: -

Secondary Groups: -

Map Failure

Enabled: False

User: nobody

Primary Group: -

Secondary Groups: -

Map Full: Yes

Max File Size: 8192.00000P

Name Max Size: 255

No Truncate: No

Read Only: No

Readdirplus: Yes

Readdirplus Prefetch: 10

Return 32Bit File Ids: No

Read Transfer Max Size: 1.00M

Read Transfer Multiple: 512

Read Transfer Size: 128.0k

Security Type: unix

Setattr Asynchronous: No

Snapshot: -

Symlinks: Yes

Time Delta: 1.0 ns

Write Datasync Action: datasync

Write Datasync Reply: datasync

Write Filesync Action: filesync

Write Filesync Reply: filesync

Write Unstable Action: unstable

Write Unstable Reply: unstable

Write Transfer Max Size: 1.00M

Write Transfer Multiple: 512

Write Transfer Size: 512.0k

BIC-Isilon-Cluster-3# isi nfs exports modify 1 --add-clients sgibic --zone prod

bad host julia in netgroup sgibic, skipping

BIC-Isilon-Cluster-3# isi nfs exports view 1 --zone prod

ID: 1

Zone: prod

Paths: /ifs/data

Description: -

Clients: isix, xisi

Root Clients: -

Read Only Clients: -

Read Write Clients: -

All Dirs: No

Block Size: 8.0k

Can Set Time: Yes

Case Insensitive: No

Case Preserving: Yes

Chown Restricted: No

Commit Asynchronous: No

Directory Transfer Size: 128.0k

Encoding: DEFAULT

Link Max: 32767

Map Lookup UID: No

Map Retry: Yes

Map Root

Enabled: True

User: nobody

Primary Group: -

Secondary Groups: -

Map Non Root

Enabled: False

User: nobody

Primary Group: -

Secondary Groups: -

Map Failure

Enabled: False

User: nobody

Primary Group: -

Secondary Groups: -

Map Full: Yes

Max File Size: 8192.00000P

Name Max Size: 255

No Truncate: No

Read Only: No

Readdirplus: Yes

Readdirplus Prefetch: 10

Return 32Bit File Ids: No

Read Transfer Max Size: 1.00M

Read Transfer Multiple: 512

Read Transfer Size: 128.0k

Security Type: unix

Setattr Asynchronous: No

Snapshot: -

Symlinks: Yes

Time Delta: 1.0 ns

Write Datasync Action: datasync

Write Datasync Reply: datasync

Write Filesync Action: filesync

Write Filesync Reply: filesync

Write Unstable Action: unstable

Write Unstable Reply: unstable

Write Transfer Max Size: 1.00M

Write Transfer Multiple: 512

Write Transfer Size: 512.0k

BIC-Isilon-Cluster-3# isi nfs exports modify 1 --add-clients sgibic --ignore-unresolvable-hosts --zone prod

BIC-Isilon-Cluster-3# isi nfs exports view 1 --zone prod

ID: 1

Zone: prod

Paths: /ifs/data

Description: -

Clients: isix, sgibic, xisi

Root Clients: -

Read Only Clients: -

Read Write Clients: -

All Dirs: No

Block Size: 8.0k

Can Set Time: Yes

Case Insensitive: No

Case Preserving: Yes

Chown Restricted: No