You are crazy if you use this. Those are just my personnal, scattered notes on setting up a cluster. DO NOT USE! You have been warned!

Pacemaker/Corosync Cluster + DRBD + STONITH + Xen

(:#toc:)

Packages Installation Base

ii drbd8-module-2.6.32-5-xen-amd64 2:8.3.8-0+2.6.32-30 RAID 1 over tcp/ip for Linux kernel module ii drbd8-module-source 2:8.3.8-0 RAID 1 over tcp/ip for Linux module source ii drbd8-utils 2:8.3.8-0 RAID 1 over tcp/ip for Linux utilities ii libxenstore3.0 4.0.1-2 Xenstore communications library for Xen ii linux-headers-2.6.32-5-common-xen 2.6.32-34squeeze1 Common header files for Linux 2.6.32-5-xen ii linux-headers-2.6.32-5-xen-amd64 2.6.32-34squeeze1 Header files for Linux 2.6.32-5-xen-amd64 ii linux-image-2.6.32-5-xen-amd64 2.6.32-34squeeze1 Linux 2.6.32 for 64-bit PCs, Xen dom0 support ii linux-headers-2.6.32-5-common-xen 2.6.32-35 Common header files for Linux 2.6.32-5-xen ii linux-headers-2.6.32-5-xen-amd64 2.6.32-35 Header files for Linux 2.6.32-5-xen-amd64 ii linux-image-2.6.32-5-xen-amd64 2.6.32-35 Linux 2.6.32 for 64-bit PCs, Xen dom0 support ii xen-hypervisor-4.0-amd64 4.0.1-2 The Xen Hypervisor on AMD64 ii xen-tools 4.2-1 Tools to manage Xen virtual servers ii xen-utils-4.0 4.0.1-2 XEN administrative tools ii xen-utils-common 4.0.0-1 XEN administrative tools - common files ii xenstore-utils 4.0.1-2 Xenstore utilities for Xen ii xenwatch 0.5.4-2 Virtualization utilities, mostly for Xen ii libvirt-bin 0.8.3-5 the programs for the libvirt library ii libvirt-doc 0.8.3-5 documentation for the libvirt library ii libvirt0 0.8.3-5 library for interfacing with different virtualization systems ii python-libvirt 0.8.3-5 libvirt Python bindings ii virt-manager 0.8.4-8 desktop application for managing virtual machines ii virt-viewer 0.2.1-1 Displaying the graphical console of a virtual machine ii virtinst 0.500.3-2 Programs to create and clone virtual machines ii ocfs2-tools 1.4.4-3 tools for managing OCFS2 cluster filesystems ii ocfs2-tools-pacemaker 1.4.4-3 tools for managing OCFS2 cluster filesystems - pacemaker support ii ocfs2console 1.4.4-3 tools for managing OCFS2 cluster filesystems - graphical interface ii corosync 1.2.1-4 Standards-based cluster framework (daemon and modules) ii pacemaker 1.0.9.1+hg15626-1 HA cluster resource manager ii libopenais3 1.1.2-2 Standards-based cluster framework (libraries) ii openais 1.1.2-2 Standards-based cluster framework (daemon and modules) ii dlm-pcmk 3.0.12-2 Red Hat cluster suite - DLM pacemaker module ii libdlm3 3.0.12-2 Red Hat cluster suite - distributed lock manager library ii libdlmcontrol3 3.0.12-2 Red Hat cluster suite - distributed lock manager library ii ipmitool 1.8.11-2 utility for IPMI control with kernel driver or LAN interface ii libopenipmi0 2.0.16-1.2 Intelligent Platform Management Interface - runtime ii openhpi-plugin-ipmidirect 2.14.1-1 OpenHPI plugin module for direct IPMI over LAN (RMCP) or SMI ii openipmi 2.0.16-1.2 Intelligent Platform Management Interface (for servers) ii libopenhpi2 2.14.1-1 OpenHPI libraries (runtime and support files) ii openhpi-plugin-ipmidirect 2.14.1-1 OpenHPI plugin module for direct IPMI over LAN (RMCP) or SMI ii openhpid 2.14.1-1 OpenHPI daemon, supports gathering of manageability information

Power and Physical network connections

The goal is to have no single point of failure (SPOF). Right off the bat there is one: both nodes have a single PSU (cash constraint!) so it is important to have all the power feeds as distributed as possible. The nodes are connected to an Automatic Transfer Switch (R3-ATS-SW-1 in rack-3) as are the network switches. There is still the possibility that one ATS fails…

helena: power feed from R9-ATS-SW-2 (APS-C and UPS-1500-1)

eth0 eth1 eth2 IPMI

132.206.178.60 10.0.0.2 192.168.1.19 192.168.1.17

(sw3-p17) p-to-p (sw4-p19) (sw7-p21)

puck: power feed from R3-ATS-SW-1 (APS-A and UPS-B)

eth0 eth1 eth2 IPMI

132.206.178.61 10.0.0.1 192.168.1.18 192.168.1.16

(sw6-p18) p-to-p (sw7-p23) (sw7-p22)

sw3: power feed from R9-ATS-SW-2 (APS-C and UPS-1500-1)

sw4: power feed from R3-ATS-SW-1 (APS-A and UPS-B)

sw7: power feed from R3-ATS-SW-1 (APS-A and UPS-B)

sw6: power feed from R3-ATS-SW-1 (APS-A and UPS-B)

Note the weakness:

- IPMI ports and one eth2 (one of puck’s corosync dual link) are on the same switch.

20150112. The above is obsolete and kept only for historical reasons and tracking. Noth cluster nodes have been moved to their new location in Rack-A4-U33 and Rack-A4-U34. This entails a few changes to power and network connections. This is in flux and not the final layout. Stay tuned.

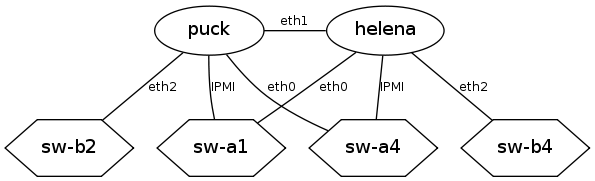

helena: power feed from ATS-A3-U26 eth0 eth1 eth2 IPMI 132.206.178.60 10.0.0.2 192.168.1.19 192.168.1.17 (SW-A1-p25) p-to-p (SW-B4-p50) (SW-A4-p17) yellow yellow blue white puck: power feed from ATS-A3-U26 *** eth0 eth1 eth2 IPMI 132.206.178.61 10.0.0.1 192.168.1.18 192.168.1.16 (SW-A4-p08) p-to-p (SW-B2-p49) (SW-A1-p29) yellow yellow blue white SW-A1-U35 and SW-A4-U35: power feed from ATS-A3-U26 (UPS-A1-U1 + UPS-A1-U3) SW-B2-U35 and SW-B4-U35: power feed from ATS-B2-U13 (UPS-B1-U1 + UPS-B1-U3)

A graphical view of the physical network connections between the nodes and network switches.

primitive resDRBDr0 ocf:linbit:drbd params drbd_resource=“r0” op start interval=“0” timeout=“240s” op stop interval=“0” timeout=“120s” op monitor interval=“20s” role=“Master” timeout=“240s” meta migration-threshold=“3” failure-timeout=“60s” primitive resLVM0 ocf:heartbeat:LVM params volgrpname=“vg0” op monitor interval=“10s” timeout=“60s” op start interval=“0” timeout=“60s” op stop interval=“0” timeout=“60s” meta migration-threshold=“3” failure-timeout=“60s” primitive resXen0 ocf:heartbeat:Xen params xmfile=“/etc/xen/matsya.bic.mni.mcgill.ca.cfg” name=“matsya.bic.mni.mcgill.ca” op monitor interval=“20s” timeout=“60s” op start interval=“0” timeout=“90s” op stop interval=“0” timeout=“90s” meta migration-threshold=“3” failure-timeout=“60s” ms msDRBDr0 grpDRBDr0 meta notify=“true” interleave=“true” colocation colLVM0-on-DRBDMaster0 inf: resLVM0 msDRBDr0:Master colocation colXen0-on-LVM0 inf: resXen0 resLVM0 order ordDRBDr0-before-LVM0 inf: msDRBDr0:promote resLVM0:start order ordLVM0-before-Xen0 inf: resLVM0 resXen0 (:sourceend:)

Network Configuration

Stuff the following in the file /etc/network/interfaces for helena:

- local loopback

auto lo iface lo inet loopback

- eth0 - wan

auto eth0 iface eth0 inet static

address 132.206.178.60 netmask 255.255.255.0 broadcast 132.206.178.255 gateway 132.206.178.1

- eth1 - drbd pt2pt replication link

auto eth1 iface eth1 inet static

address 10.0.0.2 netmask 255.0.0.0 broadcast 10.0.0.255 pointopoint 10.0.0.1

- eth2 - corosync ring

auto eth2 iface eth2 inet static

address 192.168.1.19 netmask 255.255.255.0 broadcast 192.168.1.255 gateway 192.168.1.1

(:sourceend:)

Make sure to change the relevant IP’s for puck.

Logical Volume Manager (LVM) Configuration

As explained in the DRBD user guide at http://www.drbd.org/users-guide-legacy/s-nested-lvm.html it is possible, if slightly advanced, to both use Logical Volumes as backing devices for DRBD and at the same time use a DRBD device itself as a Physical Volume (PV). In order to enable this configuration, follow these steps:

- Set an appropriate filter option in your

/etc/lvm/lvm.conf:

(:source:) filter = [ “a|/dev/drbd.*|”, “a|/dev/md0|”, “a|/dev/md/0|”, “r|.*|” ] (:sourceend:)

This filter expression accepts PV signatures found on any DRBD devices and on the underlying md0 mirror, while rejecting (ignoring) all others.

After modifying the lvm.conf file, you must run the vgscan command so LVM discards its configuration cache and re-scans devices for PV signatures.

- Disable the LVM cache by setting:

(:source:) write_cache_state = 0 (:sourceend:)

After disabling the LVM cache, make sure you remove any stale cache entries by deleting /etc/lvm/cache/.cache.

DRBD Installation and Configuration

Important note: the modules compilations and installation are dependent upon the kernel installed! In this case I have:

ii libxenstore3.0 4.0.1-2 Xenstore communications library for Xen ii linux-headers-2.6.32-5-common-xen 2.6.32-35 Common header files for Linux 2.6.32-5-xen ii linux-headers-2.6.32-5-xen-amd64 2.6.32-35 Header files for Linux 2.6.32-5-xen-amd64 ii linux-image-2.6.32-5-xen-amd64 2.6.32-35 Linux 2.6.32 for 64-bit PCs, Xen dom0 support ii xen-hypervisor-4.0-amd64 4.0.1-2 The Xen Hypervisor on AMD64 ii xen-tools 4.2-1 Tools to manage Xen virtual servers ii xen-utils-4.0 4.0.1-2 XEN administrative tools ii xen-utils-common 4.0.0-1 XEN administrative tools - common files ii xenstore-utils 4.0.1-2 Xenstore utilities for Xen ii xenwatch 0.5.4-2 Virtualization utilities, mostly for Xen

Check out sources from the public DRBD source repository

(Do this as a non-privileged user)

malin::~> git clone git://git.drbd.org/drbd-8.3.git malin::~> cd drbd-8.3 malin::~> git checkout drbd-8.3.8 malin::~> dpkg-buildpackage -rfakeroot -b -uc

This build process will create two Debian packages:

- A package containing the DRBD userspace tools, named drbd8-utils_x.y.z-BUILD_ARCH.deb

- A module source package suitable for module-assistant named drbd8-module-source_x.y.z-BUILD_all.deb.

Packages installation and module installation

Install the packages with dpkg and then run module-assistant:

~# module-assistant --text-mode --force auto-install drbd8

I’ve had problems loading the out-of-tree new drbd module on helena when doing an upgrade. I don’t know why but it seems that the in-tree drbd module (8.3.7) requires lru_cache module and while this one is loaded, modprobe will fail to load drbd-8.3.8. My solution is to unload the drbd module, then unload lru_cache, move the in-tree kernel drbd module away, rebuild the depmod file and modprobe drbd. Weird.

Nov 1 15:58:13 helena kernel: [ 1920.589633] drbd: exports duplicate symbol lc_seq_dump_details (owned by lru_cache)

Nov 1 16:10:36 helena kernel: [ 2663.655588] drbd: exports duplicate symbol lc_seq_dump_details (owned by lru_cache)

~# rmmod drbd

~# rmmod lru_cache

~# mv /lib/modules/2.6.32-5-xen-amd64/kernel/drivers/block/drbd/drbd.ko{,8.3.7}

~# depmod -a

~# modprobe drbd

After that the system file modules.dep should contain the following:

~# grep drbd /lib/modules/`uname -r`/modules.dep kernel/drivers/block/drbd.ko: kernel/drivers/connector/cn.ko

DRBD Storage configuration

Create a raid1 (mirror) with /dev/sdc1 and /dev/sdd1. Just follow the recipe given above in System disk migration to software raid1 (mirror).

Create one volume group vg_xen spanning the entire raid1 /dev/md0 and create a 24Gb logical volume on it for the VM disk image and the swap file. (Create as many LVMs as you will need Xen guests).

helena:~# pvcreate /dev/md0 Physical volume "/dev/md0" successfully created helena:~# vgcreate vg_xen /dev/md0 Volume group "vg_xen" successfully created helena:~# lvcreate --size 24G --name xen_lv0 vg_xen Logical volume "xen_lv0" created helena:~# lvcreate --size 24G --name xen_lv1 vg_xen Logical volume "xen_lv1" created helena:~# lvcreate --size 24G --name xen_lv2 vg_xen Logical volume "xen_lv2" created helena:~# pvs PV VG Fmt Attr PSize PFree /dev/md0 vg_xen lvm2 a- 465.76g 393.76g helena:~# vgs VG #PV #LV #SN Attr VSize VFree vg_xen 1 3 0 wz--n- 465.76g 393.76g helena:~# lvs LV VG Attr LSize Origin Snap% Move Log Copy% Convert xen_lv0 vg_xen -wi-ao 24.00g xen_lv1 vg_xen -wi-ao 24.00g xen_lv2 vg_xen -wi-ao 24.00g

Do exactly the same same on the other node!

DRBD resource r1

- The DRBD replication link is the point-to-point eth1-eth1 connection using 10.0.0.X addresses.

- The

become-primary-on bothin the startup section andallow-two-primaries@2 in thenet@@ section are mandatory for Xen live migration but we will no be using it. - The fencing stuff in the

handleris required to avoid split-brain situation when stonith is enabled in the cluster. It is absolutely essential to have fencing enabled in order to avoid potentially disastrous split-brain situations. There might be issues with the handlerfencing resource-and-stonithwhereby one has multiple guests running on different nodes and an event triggers a stonith action. I still haven’t fully understood the implications but suffice to say that a stonith-deathmatch is possible. Another possibility is that the cluster could create resources constraints that prevent one or more guests to run anywhere. - The

al-extentsin thesyncersection (the Activity Log Extents, 4MB each) is the nearest prime number close toE = (Sync_Rate)*(Sync_Time)/4. I usedSync_Rate = 30MB/sandSync_Time= 120syielding 900 (907 is nearest prime). - The resource configuration file is located in

/etc/drbd.d/r1.resand the DRBD config file/etc/drbd.confis such that all files matching/etc/drbd.d/*.reswill be loaded when drbd starts.

(:source:)

- /etc/drbd.d/r1.res

resource r1 {

device /dev/drbd1;

disk /dev/vg_xen/xen_lv1;

meta-disk internal;

startup {

degr-wfc-timeout 30;

wfc-timeout 30;

- become-primary-on both;

}

net {

- allow-two-primaries;

cram-hmac-alg sha1;

shared-secret “lucid”;

after-sb-0pri discard-zero-changes;

after-sb-1pri discard-secondary;

after-sb-2pri disconnect;

rr-conflict disconnect;

}

disk {

fencing resource-only;

- fencing resource-and-stonith;

on-io-error detach;

}

handlers {

fence-peer “/usr/lib/drbd/crm-fence-peer.sh”;

after-resync-target “/usr/lib/drbd/crm-unfence-peer.sh”;

outdate-peer “/usr/lib/drbd/outdate-peer.sh”;

split-brain “/usr/lib/drbd/notify-split-brain.sh root”;

pri-on-incon-degr “/usr/lib/drbd/notify-pri-on-incon-degr.sh root”;

pri-lost-after-sb “/usr/lib/drbd/notify-pri-lost-after-sb.sh root”;

local-io-error “/usr/lib/drbd/notify-io-error.sh root”;

}

syncer {

rate 30M;

csums-alg sha1;

al-extents 809;

verify-alg sha1;

}

on puck {

address 10.0.0.1:7789;

}

on helena {

address 10.0.0.2:7789;

}

}

(:sourceend:)

Make the obvious changes for the other drbd resources: the resource name, the DRBD block device and the backing device name.

Make absolutely sure to use a unique replication port number (7789 above) for each and single resource! Also, in device=/dev/drbdX the value X MUST BE AN INTEGER (it will be the device minor device number — anything else will trigger a drbd shutdown with massive disruption/corruption. Yes, I tried it and it’s not pretty :)

DRBD/Pacemaker fencing ssh keys:

For the script /usr/lib/drbd/outdate-peer.sh to work (referenced above in the handler section resource conf file) requires a password-less ssh key for the 2 nodes:

puck:~/root/.ssh/authorized_keys: from="helena,helena.bic.mni.mcgill.ca,132.206.178.60,10.0.0.2,192.168.1.19"... helena:~/root/.ssh/authorized_keys: from="puck,puck.bic.mni.mcgill.ca,132.206.178.61,10.0.0.1,192.168.1.18"...

Enabling DRBD resource and initial synchronization

helena:~# dd if=/dev/zero of=/dev/vg_xen/xen_lv1 bs=512 count=1024

1024+0 records in

1024+0 records out

533504 bytes (534 kB) copied, 0.0767713 s, 6.9 MB/s

helena:~# drbdadm create-md r1

Writing meta data...

initializing activity log

NOT initialized bitmap

New drbd meta data block successfully created.

helena:~# modprobe drbd

helena:~# drbdadm attach r1

helena:~# cat /proc/drbd

version: 8.3.8 (api:88/proto:86-94)

GIT-hash: d78846e52224fd00562f7c225bcc25b2d422321d build by root@helena, 2010-09-09 17:41:03

1: cs:StandAlone ro:Secondary/Unknown ds:Inconsistent/DUnknown r----

ns:0 nr:0 dw:0 dr:0 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:b oos:65009692

helena:~# drbdadm syncer r1

helena:~# cat /proc/drbd

version: 8.3.8 (api:88/proto:86-94)

GIT-hash: d78846e52224fd00562f7c225bcc25b2d422321d build by root@helena, 2010-09-09 17:41:03

1: cs:StandAlone ro:Secondary/Unknown ds:Inconsistent/DUnknown r----

ns:0 nr:0 dw:0 dr:0 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:b oos:65009692

helena:~# drbdadm connect r1

Do the same steps on the second node. The attach/syncer/connect steps can be done using drbdadm up resource.The DRBD state should then be like:

helena:~# cat /proc/drbd

version: 8.3.8 (api:88/proto:86-94)

GIT-hash: d78846e52224fd00562f7c225bcc25b2d422321d build by root@helena, 2010-09-09 17:41:03

1: cs:Connected ro:Secondary/Secondary ds:Inconsistent/Inconsistent C r----

ns:0 nr:0 dw:0 dr:0 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:b oos:67106780

If the devices are empty (no data) there is no prefered node to do the following but it must be done on only one of them!

become-primary-on both (if it is enabled) while doing the following. Edit the resource file and comment out the line. Then update the DRBD state with drbdadm -d adjust <res_name>. The -d flag is for ‘dry-run. If there is no error, run the command without dry-run.

helena:~# drbdadm -- --overwrite-data-of-peer primary r1

helena:~# cat /proc/drbd

version: 8.3.8 (api:88/proto:86-94)

GIT-hash: d78846e52224fd00562f7c225bcc25b2d422321d build by root@helena, 2010-09-09 17:41:03

1: cs:SyncSource ro:Primary/Secondary ds:UpToDate/Inconsistent C r----

ns:32 nr:0 dw:0 dr:81520 al:0 bm:4 lo:0 pe:0 ua:0 ap:0 ep:1 wo:b oos:67025468

[>....................] sync'ed: 0.2% (65452/65532)M delay_probe: 0

finish: 0:41:04 speed: 27,104 (27,104) K/sec

Do the same for all the other drbd resources. Note that a drbd device has to be in primary mode in order to access any Volume Group (VG) living on top of it.

Manually Resolving a Split-Brain Situation

See http://www.drbd.org/users-guide-legacy/s-resolve-split-brain.html for DRBD-3.X. It still supported but newer version 4.X

has different syntax as explained in http://www.drbd.org/users-guide/s-resolve-split-brain.html

I will just copy what’s in the drbd user guide:

Manual split brain recovery

DRBD detects split brain at the time connectivity becomes available again and the peer nodes exchange the initial DRBD protocol handshake. If DRBD detects that both nodes are (or were at some point, while disconnected) in the primary role, it immediately tears down the replication connection. The tell-tale sign of this is a message like the following appearing in the system log:

Split-Brain detected, dropping connection!

After split brain has been detected, one node will always have the resource in a StandAlone connection state. The other might either also be in the StandAlone state (if both nodes detected the split brain simultaneously), or in WFConnection (if the peer tore down the connection before the other node had a chance to detect split brain).

At this point, unless you configured DRBD to automatically recover from split brain, you must manually intervene by selecting one node whose modifications will be discarded (this node is referred to as the split brain victim). This intervention is made with the following commands:

~# drbdadm secondary resource ~# drbdadm -- --discard-my-data connect resource

On the other node (the split brain survivor), if its connection state is also StandAlone, you would enter:

~# drbdadm connect resource

You may omit this step if the node is already in the WFConnection state; it will then reconnect automatically. If the resource affected by the split brain is a stacked resource, use drbdadm --stacked

After re-synchronization has completed, the split brain is considered resolved and the two nodes form a fully consistent, redundant replicated storage system again.

Xen Setup

A good tutorial for Xen on Lenny 64bit can be found at http://www.howtoforge.com/virtualization-with-xen-on-debian-lenny-amd64.

Some notes on Xen-4.0 for Debian/Squeeze http://wiki.xensource.com/xenwiki/Xen4.0

Xen boot Serial Console Redirection

Setting up a serial console on a laptop to possibly capture Xen kernel boot messages can be useful for debugging. Here’s how.

Use the following grub menu.lst stanza (replace the root=UUID= with your boot disk):

title Raid1 (hd1) Xen 4.0-amd64 / Debian GNU/Linux, kernel 2.6.32–5-xen-amd64 / Serial Console

root (hd1,0)

kernel /boot/xen-4.0-amd64.gz dom0_mem=2048M dom0_max_vcpus=2 dom0_vcpus_pin loglvl=all guest_loglvl=all sync_console console_to_ring console=vga com1=38400,8n1 console=com1

module /boot/vmlinuz-2.6.32–5-xen-amd64 root=UUID=c0186fd3–605d-4a23-b718–3b4c869040e2 ro console=hvc0 earlyprintk=xen nomodeset

module /boot/initrd.img-2.6.32–5-xen-amd64

(:sourceend:)

This uses the first onboard serial port (COM1) on the host with a baud rate of 38400Bd with 8 databits, no parity and 1 stopbit.

The pv_ops dom0 Linux kernel is configured to use the Xen (hvc0) console. Dom0 Linux kernel console output will go to the serial console through Xen, so both Xen hypervisor and dom0 linux kernel output will go to the same serial console, where they can then be captured. More info can be found on the Xen Wiki http://wiki.xensource.com/xenwiki/XenSerialConsole.

Note in the above that dom0 memory is restricted to 2Gb (dom0_mem=2048M) and will use up to 2 virtual cpus (dom0_max_vcpus=2) and they are pinned with dom0_vcpus_pin. See the section below Xen VCPUs Memory Management for extra info.

The Xen boot options can be found here http://wiki.xensource.com/xenwiki/XenHypervisorBootOptions. Note that this is WorK In Progress (TM).

Use the following grub menu entry to hide the Xen boot messages and send the guest boot messages to the console (as usual) but keep the debug info for the Dom0 and the guests:

title Raid1 (hd1) Xen 4.0-amd64 / Debian GNU/Linux, kernel 2.6.32–5-xen-amd64

root (hd1,0)

kernel /boot/xen-4.0-amd64.gz dom0_mem=2048M dom0_max_vcpus=2 loglvl=all guest_loglvl=all console=tty0

module /boot/vmlinuz-2.6.32–5-xen-amd64 root=UUID=c0186fd3–605d-4a23-b718–3b4c869040e2 ro

module /boot/initrd.img-2.6.32–5-xen-amd64

(:sourceend:)

Here is a procedure to gather information in case of a kernel hang:

- In the Linux kernel, hit SysRq-L, SysRq-T

- Go in the hypervisor, hit Ctrl-A three times.

You should see a prompt saying (XEN) ** Serial …

- Hit * - that will collect all of the relevant information.

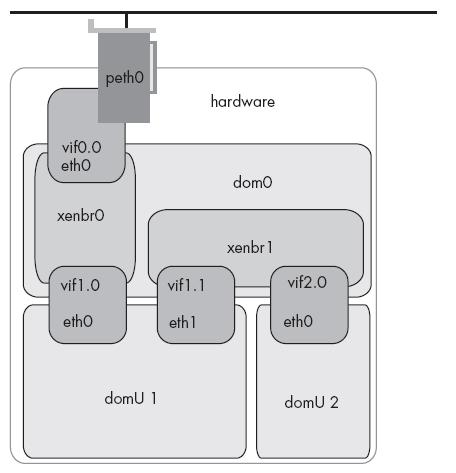

Network Bridging

Network bridging can be illustrated by this diagram (stolen from http://wiki.prgmr.com/mediawiki/index.php/Chapter_5:_Networking)

Here is the chain of events that happens when Xend starts up and runs the network-bridge script on the dom0:

- creates a new bridge named xenbr0

- “real” ethernet interface eth0 is brought down

- the IP and MAC addresses of eth0 are copied to virtual network interface veth0

- real interface eth0 is renamed peth0

- virtual interface veth0 is renamed eth0

- peth0 and vif0.0 are attached to bridge xenbr0. Please notice that in xen 3.3 and 4.0.x, the default bridge name is the same than the interface it is attached to. Eg: bridge name eth0, eth1 or ethX.VlanID

- the bridge, peth0, eth0 and vif0.0 are brought up

Enable network bridging for Xend by commenting out (network-script network-bridge) in /etc/xen/xend-config.sxp. Make sure to specify the netdev option with the proper value if there is more than one physical network interface.

(network-script 'network-bridge netdev=eth0')

Reboot. Have a look at http://wiki.xen.org/xenwiki/XenNetworking for an explanation of the different network setups and topologies for Xen.

as it is the case here.

Xen /dev and /proc files

Verify that Xend is well and running and that /proc/xen filesystem is mounted:

node1~: ls -l /proc/xen -r--r--r-- 1 root root 0 Sep 15 13:42 capabilities -rw------- 1 root root 0 Sep 15 13:42 privcmd -rw------- 1 root root 0 Sep 15 13:42 xenbus -rw------- 1 root root 0 Sep 15 13:42 xsd_kva -rw------- 1 root root 0 Sep 15 13:42 xsd_port node1~: cat /proc/xen/capabilities control_d

The control_d means that you are in the Xen Dom0 (control domain). You should have the following devices in /dev/xen

crw------- 1 root root 10, 56 Sep 15 13:42 evtchn crw------- 1 root root 10, 62 Sep 15 13:42 gntdev

and their minor/major device numbers should correspond to those in /proc/misc

56 xen/evtchn 62 xen/gntdev

Xend and xen-tools Configuration

Some default installation settings must be changed if one wants to enable live migration and ultimately have it supervized by pacemaker. It is important to restrict which host can issue a relocation request with (xend-relocation-hosts-allow ‘ ’) and be sure to check that there is no typo in there!

(:source:)

puck:~# ~malin/bin/crush /etc/xen/xend-config.sxp

(xend-http-server yes)

(xend-unix-server yes)

(xend-relocation-server yes)

(xend-port 8000)

(xend-relocation-port 8002)

(xend-address localhost)

(xend-relocation-address ‘ ’)

- (xend-relocation-hosts-allow ‘localhost helena\.bic\.mni\.mcgill\.ca puck\.bic\.mni\.mcgill\.ca’)

(xend-relocation-hosts-allow ‘^localhost$ ^helena\\.bic\\.mni\\.mcgill\\.ca$ ^puck\\.bic\\.mni\\.mcgill\\.ca$ ^132\\.206\\.178\\.60$ ^132\\.206\\.178\\.61$’)

(network-script ‘network-bridge netdev=eth0’)

(vif-script vif-bridge)

(dom0-min-mem 2048)

(enable-dom0-ballooning no)

(total_available_memory 0)

(dom0-cpus 0)

(vncpasswd ‘ ’)

(:sourceend:)

(:source:)

puck:~# ~malin/bin/crush /etc/xen-tools/xen-tools.conf

lvm = xen

install-method = debootstrap

install-method = debootstrap

debootstrap-cmd = /usr/sbin/debootstrap

size = 20Gb

memory = 2Gb

swap = 3Gb

fs = ext3

dist = `xt-guess-suite-and-mirror —suite`

image = full

gateway = 132.206.178.1

netmask = 255.255.255.0

broadcast = 132.206.178.255

nameserver = 132.206.178.7

bridge = eth0

kernel = /boot/vmlinuz-`uname -r`

initrd = /boot/initrd.img-`uname -r`

arch = amd64

mirror = `xt-guess-suite-and-mirror —mirror`

ext3_options = noatime,nodiratime,errors=remount-ro

ext2_options = noatime,nodiratime,errors=remount-ro

xfs_options = defaults

reiserfs_options = defaults

btrfs_options = defaults

serial_device = hvc0

disk_device = xvda

output = /etc/xen

extension = .cfg

copyhosts = 1

(:sourceend:)

(:source:)

puck:~# ~malin/bin/crush /etc/default/xendomains

XENDOMAINS_SYSRQ=“ ”

XENDOMAINS_USLEEP=100000

XENDOMAINS_CREATE_USLEEP=5000000

XENDOMAINS_MIGRATE=“ ”

XENDOMAINS_SAVE=/var/lib/xen/save

XENDOMAINS_SHUTDOWN=“—halt —wait”

XENDOMAINS_SHUTDOWN_ALL=“—all —halt —wait”

XENDOMAINS_RESTORE=true

XENDOMAINS_AUTO=/etc/xen/auto

XENDOMAINS_AUTO_ONLY=false

XENDOMAINS_STOP_MAXWAIT=300

(:sourceend:)

See http://wiki.xensource.com/xenwiki/XenBestPractices for more info. It’s best to allocate a fixed amount of memory to the Dom0 (dom0-min-mem 2048) and also to disable dom0 memory ballooning with the setting (enable-dom0-ballooning no) in the xend config file. This will make sure that the Dom0 never runs out of memory.

The Xen Dom0 physical network device should be present:

puck:~# ifconfig

eth0 Link encap:Ethernet HWaddr 00:1b:21:50:0f:ec

inet addr:132.206.178.61 Bcast:132.206.178.255 Mask:255.255.255.0

inet6 addr: fe80::21b:21ff:fe50:fec/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:2621736 errors:0 dropped:0 overruns:0 frame:0

TX packets:111799 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:290640331 (277.1 MiB) TX bytes:17035127 (16.2 MiB)

eth1 Link encap:Ethernet HWaddr 00:30:48:9e:ff:04

inet addr:10.0.0.1 Bcast:10.0.0.255 Mask:255.0.0.0

inet6 addr: fe80::230:48ff:fe9e:ff04/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:62308 errors:0 dropped:0 overruns:0 frame:0

TX packets:62314 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:4485102 (4.2 MiB) TX bytes:3258684 (3.1 MiB)

Memory:fbce0000-fbd00000

eth2 Link encap:Ethernet HWaddr 00:30:48:9e:ff:05

inet addr:192.168.1.18 Bcast:192.168.1.1 Mask:255.255.255.0

inet6 addr: fe80::230:48ff:fe9e:ff05/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:69006 errors:0 dropped:0 overruns:0 frame:0

TX packets:24 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:4140880 (3.9 MiB) TX bytes:1934 (1.8 KiB)

Memory:fbde0000-fbe00000

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:5590 errors:0 dropped:0 overruns:0 frame:0

TX packets:5590 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:6419836 (6.1 MiB) TX bytes:6419836 (6.1 MiB)

peth0 Link encap:Ethernet HWaddr 00:1b:21:50:0f:ec

inet6 addr: fe80::21b:21ff:fe50:fec/64 Scope:Link

UP BROADCAST RUNNING PROMISC MULTICAST MTU:1500 Metric:1

RX packets:73515001 errors:0 dropped:0 overruns:0 frame:0

TX packets:114824 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:72955828195 (67.9 GiB) TX bytes:17247510 (16.4 MiB)

Memory:fbb20000-fbb40000

and the bridge configuration can be inspected with the brctl command:

puck:~# brctl show bridge name bridge id STP enabled interfaces eth0 8000.001b21500fec no peth0

Create the Guest (LVM Disk Device)

Each Xen guest host will live on its own volume group as 2 logical volumes, one for its virtual disk and another for the virtual swap device). This volume group is located on top of its own replication device (so called nested DRBD).

See below for how to create a guest host using disk images rather than LVMs.

You will hit a buglet in xen-create-image if you specify the disk image size or swap in units of Kb in the file /etc/xen-tools/xen-tools.conf.

Ideally the domU config file should be shared between the nodes. Just make sure that both nodes have identical copies. For the moment I just stuff them in the default /etc/xen/<guest_name>.bic.mni.mcgill.ca.cfg and sync it manually to the other node. This will create a host guest called kurma with IP address 132.206.178.241 with the virtual disk devices on the volume group vg1. Obviously the volume group must be accessible and that means the drbd device under it must be in primary mode. If it’s not, then promote it to primary and force a scan of the volume groups with vgscan.

puck:~# xen-create-image --hostname=kurma.bic.mni.mcgill.ca --ip=132.206.178.241 --arch=amd64 --role=udev --lvm=vg1

General Information

--------------------

Hostname : kurma.bic.mni.mcgill.ca

Distribution : squeeze

Mirror : http://ftp.ca.debian.org/debian/

Partitions : swap 3Gb (swap)

/ 20Gb (ext3)

Image type : full

Memory size : 2Gb

Kernel path : /boot/vmlinuz-2.6.32-5-xen-amd64

Initrd path : /boot/initrd.img-2.6.32-5-xen-amd64

Networking Information

----------------------

IP Address 1 : 132.206.178.241 [MAC: 00:16:3E:85:58:34]

Netmask : 255.255.255.0

Broadcast : 132.206.178.255

Gateway : 132.206.178.1

Nameserver : 132.206.178.7

Logical volume "kurma.bic.mni.mcgill.ca-swap" created

Creating swap on /dev/vg1/kurma.bic.mni.mcgill.ca-swap

mkswap: /dev/vg1/kurma.bic.mni.mcgill.ca-swap: warning: don't erase bootbits sec

tors

on whole disk. Use -f to force.

Setting up swapspace version 1, size = 3145724 KiB

no label, UUID=cd23c566-4572-43b0-aed9-f8643eebbad8

Done

Logical volume "kurma.bic.mni.mcgill.ca-disk" created

Creating ext3 filesystem on /dev/vg1/kurma.bic.mni.mcgill.ca-disk

mke2fs 1.41.12 (17-May-2010)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=0 blocks, Stripe width=0 blocks

1310720 inodes, 5242880 blocks

262144 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=4294967296

160 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000

Writing inode tables: done

Writing superblocks and filesystem accounting information: done

This filesystem will be automatically checked every 20 mounts or

180 days, whichever comes first. Use tune2fs -c or -i to override.

Done

Installation method: debootstrap

Copying files from host to image.

Copying files from /var/cache/apt/archives/ -> /tmp/2eMPe0va9g/var/cache/apt/archives

Done

Done

I: Retrieving Release

I: Retrieving Packages

I: Validating Packages

I: Resolving dependencies of required packages...

I: Resolving dependencies of base packages...

I: Found additional required dependencies: insserv libbz2-1.0 libdb4.8 libslang2

I: Found additional base dependencies: libnfnetlink0 libsqlite3-0

I: Checking component main on http://ftp.ca.debian.org/debian...

I: Validating libacl1

I: Validating adduser

I: Validating apt-utils

I: Validating apt

Copying files from new installation to host.

Copying files from /tmp/2eMPe0va9g/var/cache/apt/archives -> /var/cache/apt/archives/

Done

Done

Done

Running hooks

Running hook 01-disable-daemons

hook 01-disable-daemons: done.

Running hook 05-shadowconfig-on

Shadow passwords are now on.

hook 05-shadowconfig-on: done.

Running hook 15-disable-hwclock

update-rc.d: using dependency based boot sequencing

hook 15-disable-hwclock: done.

Running hook 20-setup-apt

Get:1 http://ftp.ca.debian.org squeeze Release.gpg [835 B]

Ign http://ftp.ca.debian.org/debian/ squeeze/contrib Translation-en

Ign http://ftp.ca.debian.org/debian/ squeeze/main Translation-en

Ign http://ftp.ca.debian.org/debian/ squeeze/non-free Translation-en

Hit http://ftp.ca.debian.org squeeze Release

[...]

Creating Xen configuration file

Done

Setting up root password

Generating a password for the new guest.

All done

Installation Summary

---------------------

Hostname : kurma.bic.mni.mcgill.ca

Distribution : squeeze

IP-Address(es) : 132.206.178.241

RSA Fingerprint : 34:03:64:21:dd:9a:bb:c0:f5:4d:10:05:ce:20:6d:63

Root Password : *********

It is vital to explicitely specify the volume group name that will host the guest domU disks with —lvm=. Otherwize the default value from the xen-tools.conf will be used.

Boot the Guest

puck:~# xm create /xen_cluster/xen0/matsya.bic.mni.mcgill.ca.cfg [...] ~# xm list Name ID Mem VCPUs State Time(s) Domain-0 0 2043 2 r----- 137872.3 matsya.bic.mni.mcgill.ca 3 2048 3 -b---- 9275.4 ~# xm vcpu-list Name ID VCPU CPU State Time(s) CPU Affinity Domain-0 0 0 0 r-- 63861.3 0 Domain-0 0 1 1 -b- 74013.4 1 matsya.bic.mni.mcgill.ca 3 0 6 -b- 3536.2 any cpu matsya.bic.mni.mcgill.ca 3 1 7 -b- 2450.8 any cpu matsya.bic.mni.mcgill.ca 3 2 4 -b- 3288.4 any cpu

(:source:)

~# ~malin/bin/crush /etc/xen/matsya.bic.mni.mcgill.ca.cfg

kernel = ‘/boot/vmlinuz-2.6.32–5-xen-amd64’

ramdisk = ‘/boot/initrd.img-2.6.32–5-xen-amd64’

vcpus = ‘2’

memory = ‘2048’

root = ‘/dev/xvda2 ro’

disk = [

‘phy:/dev/vg0/xen0-disk,xvda2,w’,

‘phy:/dev/vg0/xen0-swap,xvda1,w’,

]

name = ‘matsya.bic.mni.mcgill.ca’

vif = [ ‘ip=132.206.178.240,mac=00:16:3E:17:1A:86,bridge=eth0’ ]

on_poweroff = ‘destroy’

on_reboot = ‘restart’

on_crash = ‘restart’

(:sourceend:)

Once the guest is created and running, Xen will setup the network. For instance here is the output of all the chains defined in the iptables of the Dom0 in the case of 2 running guests, matsya.bic.mni.mcgill.ca and kurma.bic.mni.mcgill.ca

helena:~# brctl show

bridge name bridge id STP enabled interfaces

eth0 8000.001b21538ed6 no peth0

vif7.0

vif8.0

helena:~# iptables -L

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain FORWARD (policy ACCEPT)

target prot opt source destination

ACCEPT all -- anywhere anywhere state RELATED,ESTABLISHED PHYSDEV match --physdev-out vif8.0

ACCEPT udp -- anywhere anywhere PHYSDEV match --physdev-in vif8.0 udp spt:bootpc dpt:bootps

ACCEPT all -- anywhere anywhere state RELATED,ESTABLISHED PHYSDEV match --physdev-out vif8.0

ACCEPT all -- xennode-1.bic.mni.mcgill.ca anywhere PHYSDEV match --physdev-in vif8.0

ACCEPT all -- anywhere anywhere state RELATED,ESTABLISHED PHYSDEV match --physdev-out vif7.0

ACCEPT udp -- anywhere anywhere PHYSDEV match --physdev-in vif7.0 udp spt:bootpc dpt:bootps

ACCEPT all -- anywhere anywhere state RELATED,ESTABLISHED PHYSDEV match --physdev-out vif7.0

ACCEPT all -- xennode-2.bic.mni.mcgill.ca anywhere PHYSDEV match --physdev-in vif7.0

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

You can peak at the networking setup of the guest using the xenstore command xenstore-ls fed with the Xen backend path of the vif (virtual interface):

puck:~# xm network-list kurma.bic.mni.mcgill.ca Idx BE MAC Addr. handle state evt-ch tx-/rx-ring-ref BE-path 0 0 00:16:3E:85:58:34 0 4 10 768 /769 /local/domain/0/backend/vif/11/0 puck:~# xenstore-ls /local/domain/0/backend/vif/11/0 bridge = "eth0" domain = "kurma.bic.mni.mcgill.ca" handle = "0" uuid = "f0cef322-dc1b-c935-223b-62a44a985181" script = "/etc/xen/scripts/vif-bridge" ip = "132.206.178.241" state = "4" frontend = "/local/domain/11/device/vif/0" mac = "00:16:3E:85:58:34" online = "1" frontend-id = "11" feature-sg = "1" feature-gso-tcpv4 = "1" feature-rx-copy = "1" feature-rx-flip = "0" feature-smart-poll = "1" hotplug-status = "connected"

Guest Console Login

malin@cicero:~$ ssh kurma

Linux kurma 2.6.32-5-xen-amd64 #1 SMP Wed Jan 12 05:46:49 UTC 2011 x86_64

The programs included with the Debian GNU/Linux system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Debian GNU/Linux comes with ABSOLUTELY NO WARRANTY, to the extent

permitted by applicable law.

kurma::~> pwd

/home/bic/malin

kurma::~> df

Filesystem Size Used Avail Use% Mounted on

/dev/xvda2 20G 1.1G 18G 6% /

tmpfs 1023M 0 1023M 0% /lib/init/rw

udev 987M 32K 987M 1% /dev

tmpfs 1023M 4.0K 1023M 1% /dev/shm

gustav:/raid/home/bic

503G 161G 342G 33% /home/bic

kurma::~>

Hit CTRL-] to disconnect from the virtusl console. At this point the domU is also accessible through ssh. Editing in the console can lead to frustation as the terminal device emulation is a bit screwy so it’s better to connect to the guest using a secure ssh connection.

Xen Block Device, Scheduling, Virtual CPUs and Memory Management

See http://book.xen.prgmr.com/mediawiki/index.php/Scheduling for good info.

http://publib.boulder.ibm.com/infocenter/lnxinfo/v3r0m0/index.jsp?topic=/liaai/xen/rhel/liaaixenrbindpin.htm

http://wiki.xensource.com/xenwiki/XenBestPractices for Xen Best Practices, like disabling ballooning for dom0, pinning core(s) to it and giving it more cpu time to service outstanding IO requests.

xm-tools are deprecated. A new tool call xl is now available.

Virtual Block Devices

You can attach and detach block devices to a guest using the xm-tools commands:

~# xm block-attach domain-id be-dev fe-dev mode [bedomain-id]

- domain-id

The domain id of the guest domain that the device will be attached to.

- be-dev

The device in the backend domain (usually domain 0) to be exported. This can be specified as a physical partition (phy:/dev/sda7) or as a file mounted as loopback (file://path/to/loop.iso).

- fe-dev

How the device should be presented to the guest domain. It can be specified as either a symbolic name, such as /dev/xvdb1 or even xvdb1.

- mode

The access mode for the device from the guest domain. Supported modes are w (read/write) or r (read-only).

- bedomain-id

The back end domain hosting the device. This defaults to domain 0.

To detach a domain’s virtual block device use:

~# xm block-detach domain-id devid [--force]

devid may be the symbolic name or the numeric device id given to the device by domain 0. You will need to run xm block-list to determine that number.

You need the cooperation of the guest to detach a device (it can be opened or in use inside the guest) and using —force can lead to IO errors. Be careful!

As an example, lets say one wants to give a guest with guest id 1 access to a logical volume lv0 created on the dom0 using the standard LVM tools:

~# lvcreate -L 250GB vg_xen lv0 ~# xm block-attach 1 phy:/dev/vg_xen/lv0 /dev/xvdb1 w

Scheduling

By default, unless specified with the Xen boot line sched= parameter the domU scheduler is set to credit. The xm sched-credit syntax:

xm sched-credit [ -d domain-id [ -w[=WEIGHT] | -c[=CAP] ] ]

This sets the credit scheduler parameters. The credit scheduler is a proportional fair share CPU scheduler built from the ground up to be work conserving on SMP hosts.

Each domain (including Domain0) is assigned a weight and a cap.

PARAMETERS:

- WEIGHT A domain with a weight of 512 will get twice as much CPU as a domain with a weight of 256 on a contended host. Legal weights range from 1 to 65535 and the default is 256.

- CAP The cap optionally fixes the maximum amount of CPU a domain will be able to consume, even if the host system has idle CPU cycles. The cap is expressed in percentage of one physical CPU: 100 is 1 physical CPU, 50 is half a CPU, 400 is 4 CPUs, etc. The default, 0, means there is no upper cap.

VCPUs and Memory

To restrict Dom0 to run on a certain CPUs one has to add the kernel stanza dom0_max_vcpus=X to the Xen boot line in /boot/grub/menu.lst, where X is the number of vcpus dedicated to Dom0. The grub menu entry for a dom0 with an allocation of 2GB of ram and 2 virtual CPUs would look like:

title Raid1 (hd1) Xen 4.0-amd64 / Debian GNU/Linux, kernel 2.6.32–5-xen-amd64 / Serial Console

root (hd1,0)

kernel /boot/xen-4.0-amd64.gz dom0_mem=2048M dom0_max_vcpus=2 loglvl=all guest_loglvl=all sync_console console_to_ring console=vga com1=38400,8n1 console=com1

module /boot/vmlinuz-2.6.32–5-xen-amd64 root=UUID=c0186fd3–605d-4a23-b718–3b4c869040e2 ro console=hvc0 earlyprintk=xen nomodeset

module /boot/initrd.img-2.6.32–5-xen-amd64

(:sourceend:)

The memory and virtual cpus allocated to an unprivileged Xen domain (domU) can be set both at the domain creation and reset while the domU is running. As an example of a domU allocated with 4 virtual cpus (vcpus=‘4’) and pinned to the third logical cpu to the 6th (cpus=[‘2–5’] and 2GB of memory (memory=‘2048’):

(:source:)

kernel = ‘/boot/vmlinuz-2.6.32–5-xen-amd64’

ramdisk = ‘/boot/initrd.img-2.6.32–5-xen-amd64’

vcpus = ‘4’

cpus = [‘2’,’3’,’4’,’5’]

memory = ‘2048’

root = ‘/dev/xvda1 ro’

disk = [

‘file:/xen_cluster/r1/disk.img,xvda1,w’, ‘file:/xen_cluster/r2/swap.img,xvda2,w’, ]

name = ‘xennode-1.bic.mni.mcgill.ca’

vif = [ ‘ip=132.206.178.241,mac=00:16:3E:12:94:DF,bridge=eth0’ ]

on_poweroff = ‘destroy’

on_reboot = ‘restart’

on_crash = ‘restart’

(:sourceend:)

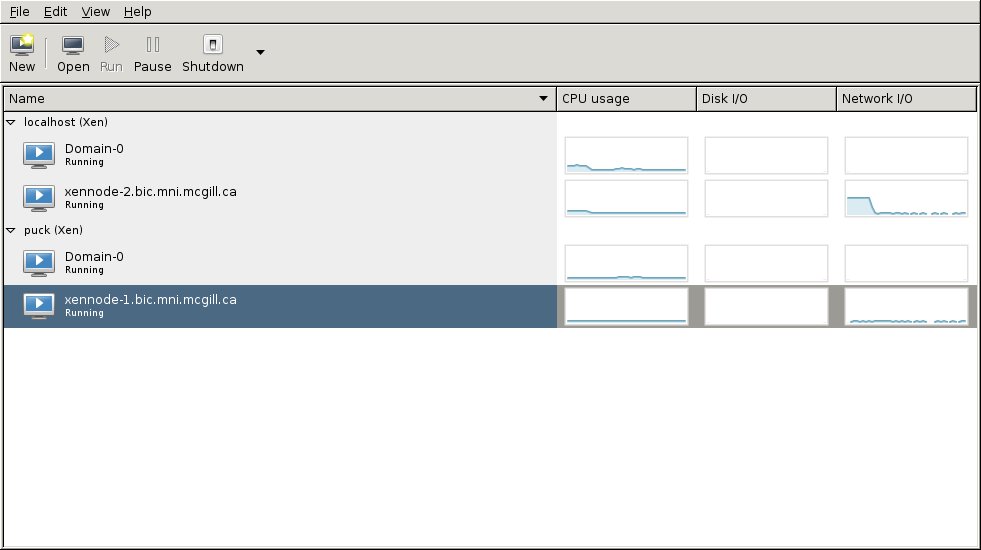

Xen resources management

A multitude of tools exist to manage and gather information and/or statistics from a Xen virtual domain. Have a look at http://wiki.xensource.com/xenwiki/XenManagementTools for a list.

A GUI that can connect remotely over secured channels is virt-manager for example. In order to allow connections one must reconfigure Xend by enabling (xend-unix-server yes)/etc/xen/xend-config.sxp/etc/init.d/xend restartVIRSH_DEFAULT_CONNECT_URI=xen:///

The command xentop will show the state of the Dom0 and running DomUs:

puck:~# xentop

xentop - 16:11:50 Xen 4.0.1

2 domains: 1 running, 1 blocked, 0 paused, 0 crashed, 0 dying, 0 shutdown

Mem: 12573788k total, 4351752k used, 8222036k free CPUs: 8 @ 2000MHz

NAME STATE CPU(sec) CPU(%) MEM(k) MEM(%) MAXMEM(k) MAXMEM(%) VCPUS NETS NETTX(k) NETRX(k) VBDS VBD_OO VBD_RD VBD_WR VBD_RSECT VBD_WSECT SSID

Domain-0 -----r 514 1.6 2092288 16.6 no limit n/a 2 0 0 0 0 0 0 0 0 0 0

VCPUs(sec): 0: 362s 1: 152s

xennode-1. --b--- 15 0.8 2089984 16.6 2097152 16.7 4 1 1199 6 1 0 2 164 16 2792 0

VCPUs(sec): 0: 12s 1: 1s 2: 1s 3: 1s

Net0 RX: 6258bytes 39pkts 0err 0drop TX: 1227871bytes 8872pkts 0err 9983drop

VBD BlkBack 51713 [ca: 1] OO: 0 RD: 2 WR: 164 RSECT: 16 WSECT: 2792

From the Xen-tools toolbox comes xm, which has a fairly rich syntax:

helena:~# xm vcpu-list Name ID VCPU CPU State Time(s) CPU Affinity Domain-0 0 0 7 r-- 368.5 any cpu Domain-0 0 1 1 -b- 154.9 any cpu xennode-1.bic.mni.mcgill.ca 2 0 2 -b- 14.7 2 xennode-1.bic.mni.mcgill.ca 2 1 3 -b- 1.2 3 xennode-1.bic.mni.mcgill.ca 2 2 4 -b- 1.1 4 xennode-1.bic.mni.mcgill.ca 2 3 5 -b- 1.1 5

Another one is libvirt and related binaries (virsh help)

helena:~# virsh list Id Name State ---------------------------------- 0 Domain-0 running 2 xennode-1.bic.mni.mcgill.ca idle helena:~# virsh dominfo xennode-1.bic.mni.mcgill.ca Id: 2 Name: xennode-1.bic.mni.mcgill.ca UUID: 7df57c76-8555-bd41-b9da-31054aef3521 OS Type: linux State: idle CPU(s): 4 CPU time: 21.6s Max memory: 2097152 kB Used memory: 2089984 kB Persistent: no Autostart: disable

Xen Virtual Block Devices (VBDs) and DRBD

/etc/xen/scripts/block that prevents /etc/xen/scripts/block-drbd from being run if you use DRBD VBDs. To fix it, just add a line XEN_SCRIPT_DIR=/etc/xen/scripts before the last 2 lines of the shell script. Even after making that change I find that the virtual drbd device stuff doesn’t work with Debian/Squeeze. It seems that the Xen packages miss a hotplug device script.

In order to use a DRBD resource (<resource> is the resource name NOT the block device) as the virtual block device xvda1, you must add a line like the following to your Xen domU configuration:

disk = [ 'drbd:<resource>,xvda1,w' ]

See http://www.drbd.org/users-guide/ch-xen.html for more details. But suffice to say that It Just Doesn’t Work (TM) for Debian/Squeeze.

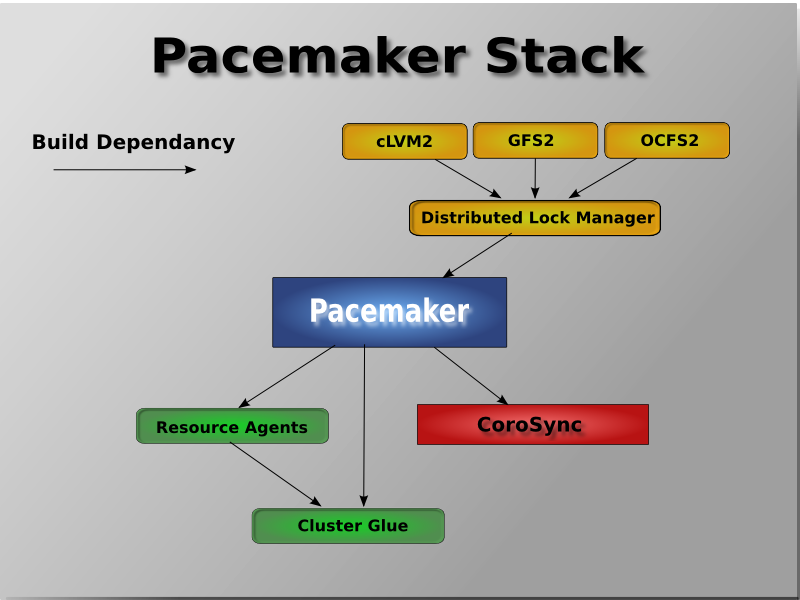

Corosync Installation and Configuration

First, what is Corosync?

Corosync is the first layer in the cluster stack (membership and messaging), Pacemaker is the second layer (cluster resource management), and services are on the third layer. We’ll cover Pacemaker later.

Create a new file /etc/apt/sources.list.d/pacemaker.list that contains:

deb http://people.debian.org/~madkiss/ha lenny main

Add the Madkiss key to you package system:

>~ apt-key adv --keyserver pgp.mit.edu --recv-key 1CFA3E8CD7145E30

If you omit this step you will get this error:

W: GPG error: http://people.debian.org lenny Release: The following signatures couldn't be verified because the public key is not available: NO_PUBKEY 1CFA3E8CD7145E30

Update the package list

>~ aptitude update

Installing the package pacemaker will install pacemaker with corosync, if you need openais later on, you could install that as a plugin in corosync. OpenAIS is need for example for DLM or CLVM, but thats beyond the scope of this howto.

>~ aptitude install pacemaker

Create an auth key for nodes communication

To create an authkey for corosync communication between your two nodes do this on the first node:

node1~: sudo corosync-keygen

This creates a key in /etc/corosync/authkey. If it’s too slow that’s because there is not enough entropy. Get the system active by doing find / -file blah or something along this. You need to copy this file to the second node and put it in the /etc/corosync directory with the right permissions. So on the first node:

node1~: scp /etc/corosync/authkey node2:/etc/corosync/authkey

And on the second node:

node2~: sudo mv ~/authkey /etc/corosync/authkey node2~: sudo chown root:root /etc/corosync/authkey node2~: sudo chmod 400 /etc/corosync/authkey

Make core files with exec name and pid’s appended on both nodes:

node1~: echo 1 > /proc/sys/kernel/core_uses_pid

node1~: echo core.%e.%p > /proc/sys/kernel/core_pattern

and make the change permanent on both nodes with:

node1~: cat /etc/sysctl.d/core_uses_pid.conf

kernel.core_uses_pid = 1

kernel.core_pattern = core.p

Edit corosync config file

Most of the options in the /etc/corosync/corosync.conf file are ok to start with, but you must check that corosync can communicate so make sure to adjust the interface section:

(:source:)

interface {

- The following values need to be set based on your environment

ringnumber: 0 bindnetaddr: 192.168.1.0 mcastaddr: 226.94.1.1 mcastport: 5405

}

(:sourceend:)

Adjust bindnetaddr to your local subnet: if you have configured the IP 10.0.0.1 for the first node and 10.0.0.2 for the second node, set bindnetaddr to 10.0.0.0. In my case the communication ring is redundant with one link with IP 132.206.178.60 to IP 132.206.178.61 (implying bindnetaddr set to 132.206.178.0 and another link with IP on a private network with IP 192.168.1.18 to 192.168.1.19 with the associated bindnetaddr set to 192.168.1.0

Corosync redundant dual ring config

One ring on public network 132.206.178.0/24 and the other ring on private network 192.168.1.0/24. /etc/corosync/corosync.conf:

(:source:)

totem {

version: 2

token: 3000

token_retransmits_before_loss_const: 10

join: 60

consensus: 4500

vsftype: none

max_messages: 20

clear_node_high_bit: yes

secauth: off

threads: 0

rrp_mode: active

interface {

ringnumber: 0

bindnetaddr: 132.206.178.0

mcastaddr: 226.94.1.0

mcastport: 5400

}

interface {

ringnumber: 1

bindnetaddr: 192.168.1.0

mcastaddr: 226.94.1.1

mcastport: 5401

}

}

amf {

mode: disabled

}

service {

ver: 0

name: pacemaker

}

aisexec {

user: root

group: root

}

logging {

fileline: off

to_stderr: yes

to_logfile: no

to_syslog: yes

syslog_facility: daemon

debug: off

timestamp: on

logger_subsys {

subsys: AMF

debug: off

tags: enter|leave|trace1|trace2|trace3|trace4|trace6

}

}

(:sourceend:)

Now that you have configured both nodes you can start the cluster on both sides.

Edit /etc/default/corosync to enable corosync at startup and start the services:

node1~: sudo /etc/init.d/coroync start Starting corosync daemon: corosync. node2~: sudo /etc/init.d/coroync start Starting corosync daemon: corosync.

The following processes should appear on both nodes:

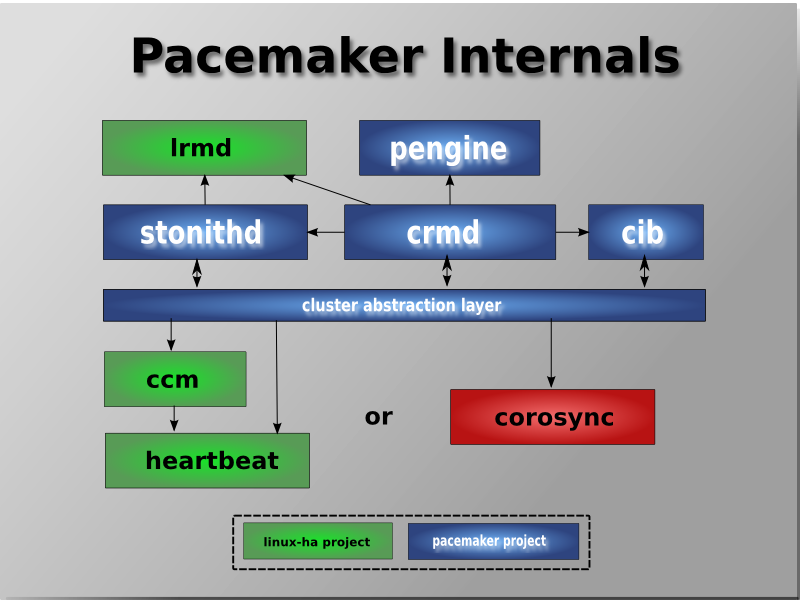

root /usr/sbin/corosync root \_ /usr/lib/heartbeat/stonithd 112 \_ /usr/lib/heartbeat/cib root \_ /usr/lib/heartbeat/lrmd 112 \_ /usr/lib/heartbeat/attrd 112 \_ /usr/lib/heartbeat/pengine 112 \_ /usr/lib/heartbeat/crmd stonithd -> shoot the other node in the head daemon cib -> cluster information base lrmd -> local resource manager daemon pengine -> policy engine crmd -> cluster resource manager deamon

Corosync Status and Ring Management

The ring state is displayed using the command:

node1:~# corosync-cfgtool -s

Printing ring status.

Local node ID 1018351236

RING ID 0

id = 132.206.178.60

status = ring 0 active with no faults

RING ID 1

id = 192.168.1.19

status = ring 1 active with no faults

To enable a fixed-but-used-to-be-faulty ring use command corosync-cfgtool -r

There is an annoying bug in corosync-1.2.x (supposed to be fixed in the upcoming Weaver’s Needle release — a.k.a. corosync 2.y.z) in that if the ring doesn’t initialize correctly there is no way out short of a reboot: restarting the network just doesn’t work as corosync processes simply hang:

~# corosync-cfgtool -s Could not initialize corosync configuration API error 6

What happens is that corosync cannot not bind to network socket(s) and everything becomes fubar. Some info can be found here https://lists.linux-foundation.org/pipermail/openais/2011-January/015626.html

IPMI Configuration for STONITH

In order to use the IPMI resource agent for stonith one must first install some packages on the cluster nodes.

puck:~# apt-get update puck:~# apt-get install ipmitool openipmi

To have the IPMI device handler modules loaded one adds the following line to /etc/modules

# /etc/modules: kernel modules to load at boot time. # # This file contains the names of kernel modules that should be loaded # at boot time, one per line. Lines beginning with "#" are ignored. # Parameters can be specified after the module name. ipmi_si ipmi_devintf

To load the modules do modprobe ipmi_si and modprobe ipmi_devintf. If successfull, a new device /dev/ipmi<X> should be created that allows in-band communications for the BMC.

The BMC network config is done at the BIOS level, using a static IP address along with broadcast, gateway and netmask (using DHCP is possible). Login through the web interface using the manufacturer default username and password ADMIN/ADMIN. Change the passsord and create another user called root with administrative privileges.

Use ipmitool command to attempt a connection to the remote node BMC, user root and password in file /root/ipmipass:

puck:~# ipmitool -I lanplus -H 192.168.1.17 -U root -f /root/ipmipass sel SEL Information Version : 1.5 (v1.5, v2 compliant) Entries : 0 Free Space : 10240 bytes Percent Used : 0% Last Add Time : Not Available Last Del Time : Not Available Overflow : false Supported Cmds : 'Reserve' 'Get Alloc Info' # of Alloc Units : 512 Alloc Unit Size : 20 # Free Units : 512 Largest Free Blk : 512 Max Record Size : 2 puck:~# ipmitool -I lanplus -H 192.168.1.17 -U root -f ./ipmipass sdr FAN 1 | 11881 RPM | ok FAN 2 | 11881 RPM | ok FAN 3 | 11881 RPM | ok FAN 4 | 10404 RPM | ok FAN 5 | disabled | ns FAN 6 | disabled | ns CPU1 Vcore | 0.95 Volts | ok CPU2 Vcore | 0.96 Volts | ok +1.5 V | 1.51 Volts | ok +5 V | 5.09 Volts | ok +5VSB | 5.09 Volts | ok +12 V | 12.14 Volts | ok -12 V | -12.29 Volts | ok +3.3VCC | 3.31 Volts | ok +3.3VSB | 3.26 Volts | ok VBAT | 3.24 Volts | ok CPU1 Temp | 0 unspecified | ok CPU2 Temp | 0 unspecified | ok System Temp | 29 degrees C | ok Chassis Intru | 0 unspecified | ok PS Status | 0 unspecified | nc puck:~# ipmitool -I lanplus -H 192.168.1.17 -U root -f /root/ipmipass chassis power status Chassis Power is on

Pacemaker/CRM (Cluster Resource Manager) Configuration

Cluster Architecture and Internals.

The Pacemaker architecture is described in the Pacemaker documentation available at http://www.clusterlabs.org/doc/en-US/Pacemaker/1.0/html/Pacemaker_Explained/s-intro-architecture.html.

A quick view of the stack (from the doc mentionned above):

The pacemaker internals consist of four key components:

- CIB (aka. Cluster Information Base)

- CRMd (aka. Cluster Resource Management daemon)

- PEngine (aka. PE or Policy Engine)

- STONITHd

Initial Configuration

To configure the cluster stack one can either go the brutral way with cibadmin (must speak/grok XML) or use the crm shell command line interface.

See http://www.clusterlabs.org/wiki/DRBD_HowTo_1.0 and http://www.clusterlabs.org/doc/crm_cli.html

http://www.clusterlabs.org/doc/en-US/Pacemaker/1.1/html/Pacemaker_Explained/index.html

First, one must disable a few services that are started automatically at boot time by the LSB init scripts. This is needed as the cluster will from now on manage the start/stop/restart and monitoring of those services and having them started at boot time before pacemaker has had a chance to get hold of them can cause confusion and even bring down the cluster.

Strickly speaking disabling corosync at boot time is not really necessary but, for the minyme paranoid inside me, having a node rebooted in a HA-cluster, me want to go through the logs and find out why and only when satisfied that all is good will I manually restart the cluster stack.

puck:~# update-rc.d -f corosync remove puck:~# update-rc.d -f drbd remove puck:~# update-rc.d -f o2cf remove puck:~# update-rc.d -f xendomain remove

You can always reinstate them with update-rc.d [-n] <name> defaults.

node1 -> puck node2 -> helena

After starting corosync on both systems one should see something like this (dates and versions will be different though, this is just from an old run of mine):

puck:~# crm_mon --one-shot -V crm_mon[7363]: 2009/07/26_22:05:40 ERROR: unpack_resources: No STONITH resources have been defined crm_mon[7363]: 2009/07/26_22:05:40 ERROR: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option crm_mon[7363]: 2009/07/26_22:05:40 ERROR: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity # crm_mon -1 ============ Last updated: Wed Oct 20 16:39:53 2010 Stack: openais Current DC: helena - partition with quorum Version: 1.0.9-74392a28b7f31d7ddc86689598bd23114f58978b 2 Nodes configured, 2 expected votes 0 Resources configured. ============ Online: [ puck helena ]

Cluster Options and Properties.

First, we set up some cluster options that will affect its behaviour as a whole.

In a two-node cluster, the concept of quorum does not apply. It is thus safe to set Pacemaker’s “no-quorum-policy” to ignore loss of quorum.

We also set a default stickiness greater than zero so that resources have a tendency to ‘stick’ to a node. This avoids resources being bounced around when a node is restarted/rebooted or a resource is unmigrated.

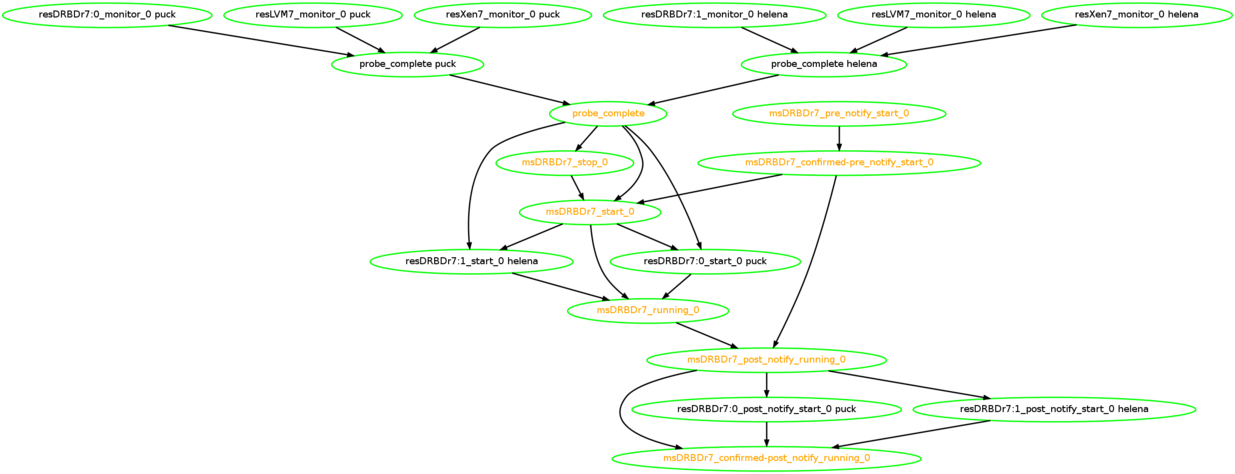

The Policy Engine creates files in /var/lib/pengine/pe-{input,warn,error}.bz2 —those are the transition files used by the PE to plot a path to attain a new state when events are detected. By default, they will just indefinitely accumulate. With one transition check every 15mins, we keep 672 (worth a week). They are useful in case an hb_report has to be submitted to the Pacemaker Mailing List.

Finally we disable stonith (just for the moment — it will be enabled later on).

puck:~# crm configure crm(live)configure# property no-quorum-policy=ignore crm(live)configure# property default-resource-stickiness=100 crm(live)configure# property pe-input-series-max="672" crm(live)configure# property pe-error-series-max="672" crm(live)configure# property pe-warn-series-max="672" crm(live)configure# property stonith-enabled=false crm(live)configure# verify crm(live)configure# commit crm(live)configure# exit bye

Actually, for a 2-node cluster, failure to disable quorum might lead to a stonith deathmatch when stonith is enabled. See the entry How STONITH works below.

LVM, DRBD and Xen

Next, define the resources for LVM, DRBD and Xen guest using the crm shell.

puck:~# crm configure edit

and stick the following in it:

(:source:)

primitive resDRBDr0 ocf:linbit:drbd params drbd_resource=“r0” op start interval=“0” timeout=“240s” op stop interval=“0” timeout=“120s” op monitor interval=“20s” role=“Master” timeout=“240s” meta migration-threshold=“3” failure-timeout=“60s”

primitive resLVM0 ocf:heartbeat:LVM params volgrpname=“vg0” op monitor interval=“10s” timeout=“60s” op start interval=“0” timeout=“60s” op stop interval=“0” timeout=“60s” meta migration-threshold=“3” failure-timeout=“60s”

primitive resXen0 ocf:heartbeat:Xen params xmfile=“/etc/xen/matsya.bic.mni.mcgill.ca.cfg” name=“matsya.bic.mni.mcgill.ca” op monitor interval=“20s” timeout=“60s” op start interval=“0” timeout=“90s” op stop interval=“0” timeout=“90s” meta migration-threshold=“3” failure-timeout=“60s” target-role=“Stopped”

ms msDRBDr0 grpDRBDr0 meta notify=“true” interleave=“true”

colocation colLVM0-on-DRBDMaster0 inf: resLVM0 msDRBDr0:Master

colocation colXen0-on-LVM0 inf: resXen0 resLVM0

order ordDRBDr0-before-LVM0 inf: msDRBDr0:promote resLVM0:start

order ordLVM0-before-Xen0 inf: resLVM0 resXen0

(:sourceend:)

A few notes regarding the above.

- Modify the LVM Volume Group, the drbd resource name and the Xen config name, etc, according to your local setup.

- The resource meta property

migration-threshold=Nwill move the resource away to a new node after N failures. A default value is not set by the cluster so it has to be explicitely set. - The resource meta property

failure-timeout=“60s”sets a timeout to expire the above constraint. So in this case the node will be able to run a failed resource after 60s. There is no default value for this meta property so again we must set it up explicitely. - The Xen resource is created in a

Stoppedstate and have explicit timeouts set for migration to or away from a node. Once were are done setting up the CIB we can start the resource withcrm resource start resXen1.

The bits:

(:source:)

ms msDRBDr1 resDRBDr1 meta notify=“true” interleave=“true” target-role=“Started”

(:sourceend:)

creates a Master/Slave resource for the DRBD primitive.

- The resources must be started in a specific order and colocation constraints between them must be established:

- The LVM Volume Group can only be activated if the drbd block device is in primary mode.

- Xen DomU can run only and only if it has access to its disk images on the volume group.

- The drbd resource must first be promoted to Master (primary) before LVM scans for volume groups.

- The LVM resource must be started (ie, made available by scanning for VG signatures) before the Xen DomU can be started.

Network connectivity and ping

After that one sets up a ping resource to monitor network connectivity, clone it on both nodes and restrict the Xen guests to run on a node if and only if the ping resource can connect to a configured gateway — 132.206.178.1 in our case.

(:source:)

primitive resPing ocf:pacemaker:ping params dampen=“5s” multiplier=“100” host_list=“132.206.178.1” attempts=“3” op monitor interval=“20s” timeout=“60s” op start interval=“0” timeout=“65s” op stop interval=“0” timeout=“30s”

clone cloPing resPing meta globally-unique=“false”

location locPing resXen1 rule -inf: not_defined pingd or pingd lte 0

(:sourceend:)

STONITH and IPMI

Finally one must have a way of fencing a node in an dual-primary cluster setup in case it behaves strangely or doesn’t respond. Failure to configure a fencing device will must likely lead to data corruption (a case of split-brain: both node are primary but data is not in a consistent state) between the nodes and a manual intervention will be necessary. See Manually Resolving a Split-Brain Situation for instructions on how to do that, God forbid.

The split-brain situation is avoided by using a stonith resource configured to use IPMI on both nodes. Once the primitives are defined one sets up location constraints such that each node has one instance of the stonith resource running such that it can shoot the other offending node in case of failure (ie, a resource won’t stop properly, a node seems to be available but doesn’t respond, etc). One then enables the cluster property stonith-enabled=“true” along with the options stonith-action=“poweroff” to power off a node when stonith’ed rather that a reset (the default).

(:source:)

primitive resStonitHelena stonith:external/ipmi params hostname=“helena” ipaddr=“192.168.1.17” userid=“root” passwd=“********” interface=“lanplus” op start interval=“0” timeout=“60s” op stop interval=“0” timeout=“60s” op monitor interval=“3600s” timeout=“60”

primitive resStonithPuck stonith:external/ipmi params hostname=“puck” ipaddr=“192.168.1.16” userid=“root” passwd=“********” interface=“lanplus” op start interval=“0” timeout=“60s” op stop interval=“0” timeout=“60s” op monitor interval=“3600s” timeout=“60”

location locStonithHelena resStonitHelena -inf: helena

location locStonithPuck resStonitPuck -inf: puck

property stonith-enabled=“true”

property stonith-action=“poweroff”

(:sourceend:)

Final Setup

After all this, here’s a CIB for with the following properties:

- 4 Xen guests, each running on disk devices located on volume groups, sitting on top of DRBD block devices, who in turn are sitting on top of LVMs.

- Active/Passive cluster, with DRBD in primary/secondary mode (no live migration).

- Stonith is enabled, using IPMI, to poweroff offending nodes.

- Ping resources are used to restrict guests to run on nodes with outside network connectivity.

- One guest has a block device attached, used as a disk for web content.

- Policy Engine files are restricted to

4*24*7 = 672

~# crm configure show

node helena

node puck

primitive resDRBDr-www ocf:linbit:drbd \

params drbd_resource="r-www" \

op start interval="0" timeout="240s" \

op stop interval="0" timeout="120s" \

op monitor interval="20s" role="Master" timeout="240s" \

meta migration-threshold="3" failure-timeout="60s"

primitive resDRBDr0 ocf:linbit:drbd \

params drbd_resource="r0" \

op start interval="0" timeout="240s" \

op stop interval="0" timeout="120s" \

op monitor interval="20s" role="Master" timeout="240s" \

meta migration-threshold="3" failure-timeout="60s"

primitive resDRBDr1 ocf:linbit:drbd \

params drbd_resource="r1" \

op start interval="0" timeout="240s" \

op stop interval="0" timeout="120s" \

op monitor interval="20s" role="Master" timeout="240s" \

meta migration-threshold="3" failure-timeout="60s"

primitive resDRBDr2 ocf:linbit:drbd \

params drbd_resource="r2" \

op start interval="0" timeout="240s" \

op stop interval="0" timeout="120s" \

op monitor interval="20s" role="Master" timeout="240s" \

meta migration-threshold="3" failure-timeout="60s"

primitive resDRBDr3 ocf:linbit:drbd \

params drbd_resource="r3" \

op start interval="0" timeout="240s" \

op stop interval="0" timeout="120s" \

op monitor interval="20s" role="Master" timeout="240s" \

meta migration-threshold="3" failure-timeout="60s"

primitive resLVM0 ocf:heartbeat:LVM \

params volgrpname="vg0" \

op monitor interval="10s" timeout="60s" \

op start interval="0" timeout="60s" \

op stop interval="0" timeout="60s" \

meta migration-threshold="3" failure-timeout="60s"

primitive resLVM1 ocf:heartbeat:LVM \

params volgrpname="vg1" \

op monitor interval="10s" timeout="60s" \

op start interval="0" timeout="60s" \

op stop interval="0" timeout="60s" \

meta migration-threshold="3" failure-timeout="60s"

primitive resLVM2 ocf:heartbeat:LVM \

params volgrpname="vg2" \

op monitor interval="10s" timeout="60s" \

op start interval="0" timeout="60s" \

op stop interval="0" timeout="60s" \

meta migration-threshold="3" failure-timeout="60s" target-role="Started"

primitive resLVM3 ocf:heartbeat:LVM \

params volgrpname="vg3" \

op monitor interval="10s" timeout="60s" \

op start interval="0" timeout="60s" \

op stop interval="0" timeout="60s" \

meta migration-threshold="3" failure-timeout="60s"

primitive resPing ocf:pacemaker:ping \

params dampen="5s" multiplier="100" host_list="132.206.178.1" attempts="3" \

op monitor interval="20s" timeout="60s" \

op start interval="0" timeout="65s" \

op stop interval="0" timeout="30s"

primitive resStonithHelena stonith:external/ipmi \

params hostname="helena" ipaddr="192.168.1.17" userid="root" passwd="********" interface="lanplus" \

op start interval="0" timeout="60s" \

op stop interval="0" timeout="60s" \

op monitor interval="3600s" timeout="60"

primitive resStonithPuck stonith:external/ipmi \

params hostname="puck" ipaddr="192.168.1.16" userid="root" passwd="********" interface="lanplus" \

op start interval="0" timeout="60s" \

op stop interval="0" timeout="60s" \

op monitor interval="3600s" timeout="60"

primitive resXen0 ocf:heartbeat:Xen \

params xmfile="/etc/xen/matsya.bic.mni.mcgill.ca.cfg" name="matsya.bic.mni.mcgill.ca" \

op monitor interval="20s" timeout="60s" \

op start interval="0" timeout="90s" \

op stop interval="0" timeout="90s" \

meta migration-threshold="3" failure-timeout="60s"

primitive resXen1 ocf:heartbeat:Xen \

params xmfile="/etc/xen/kurma.bic.mni.mcgill.ca.cfg" name="kurma.bic.mni.mcgill.ca" \

op monitor interval="20s" timeout="60s" \

op start interval="0" timeout="90s" \

op stop interval="0" timeout="90s" \

meta migration-threshold="3" failure-timeout="60s" target-role="Started"

primitive resXen2 ocf:heartbeat:Xen \

params xmfile="/etc/xen/varaha.bic.mni.mcgill.ca.cfg" name="varaha.bic.mni.mcgill.ca" \

op monitor interval="20s" timeout="60s" \

op start interval="0" timeout="90s" \

op stop interval="0" timeout="90s" \

meta migration-threshold="3" failure-timeout="60s" target-role="Started" is-managed="true"

primitive resXen3 ocf:heartbeat:Xen \

params xmfile="/etc/xen/narasimha.bic.mni.mcgill.ca.cfg" name="narasimha.bic.mni.mcgill.ca" \

op monitor interval="20s" timeout="60s" \

op start interval="0" timeout="90s" \

op stop interval="0" timeout="90s" \

meta migration-threshold="3" failure-timeout="60s"

group grpDRBDweb resDRBDr2 resDRBDr-www

ms msDRBDr0 resDRBDr0 \

meta notify="true" interleave="true"

ms msDRBDr1 resDRBDr1 \

meta notify="true" interleave="true"

ms msDRBDr2 grpDRBDweb \

meta notify="true" interleave="true" target-role="Started"

ms msDRBDr3 resDRBDr3 \

meta notify="true" interleave="true"

clone cloPing resPing \

meta globally-unique="false"

location locPing0 resXen0 \

rule $id="locPing-rule" -inf: not_defined pingd or pingd lte 0

location locPing1 resXen1 \

rule $id="locPing1-rule" -inf: not_defined pingd or pingd lte 0

location locPing2 resXen2 \

rule $id="locPing2-rule" -inf: not_defined pingd or pingd lte 0

location locPing3 resXen3 \

rule $id="locPing3-rule" -inf: not_defined pingd or pingd lte 0

location locStonithHelena resStonithHelena -inf: helena

location locStonithPuck resStonithPuck -inf: puck

colocation colLVM0-on-DRBDMaster0 inf: resLVM0 msDRBDr0:Master

colocation colLVM1-on-DRBDMaster1 inf: resLVM1 msDRBDr1:Master

colocation colLVM2-on-DRBDMaster2 inf: resLVM2 msDRBDr2:Master

colocation colLVM3-on-DRBDMaster3 inf: resLVM3 msDRBDr3:Master

colocation colXen0-on-LVM0 inf: resXen0 resLVM0

colocation colXen1-on-LVM1 inf: resXen1 resLVM1

colocation colXen2-on-LVM2 inf: resXen2 resLVM2

colocation colXen3-on-LVM3 inf: resXen3 resLVM3

order ordDRBDr0-before-LVM0 inf: msDRBDr0:promote resLVM0:start

order ordDRBDr1-before-LVM1 inf: msDRBDr1:promote resLVM1:start

order ordDRBDr2-before-LVM2 inf: msDRBDr2:promote resLVM2:start

order ordDRBDr3-before-LVM3 inf: msDRBDr3:promote resLVM3:start

order ordLVM0-before-Xen0 inf: resLVM0 resXen0

order ordLVM1-before-Xen1 inf: resLVM1 resXen1

order ordLVM2-before-Xen2 inf: resLVM2 resXen2

order ordLVM3-before-Xen3 inf: resLVM3 resXen3

property $id="cib-bootstrap-options" \

dc-version="1.0.9-74392a28b7f31d7ddc86689598bd23114f58978b" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

no-quorum-policy="ignore" \

default-resource-stickiness="100" \

pe-input-series-max="672" \

pe-error-series-max="672" \

pe-warn-series-max="672" \

stonith-enabled="true" \

last-lrm-refresh="1315482708" \

stonith-action="poweroff"

Colocation Crazyness

This doesn’t belong here but for the moment it will suffice. This is just for me to remember to look at the colocation semantics more carefully. This is from the pacemaker mailing list gurus (and it’s really conterintuitive to say the least).

> If you ever consider do something about it, here is another thing that > can be lived with, but is non-intuitive. > > 1) colocation c1 inf: A B > > the most significant is B (if B is stopped nothing else will be running) > > 2) colocation c2 inf: A B C > > most significant - A > > 3) colocation c3 inf: ( A B ) C > > most significant - C > > 4) colocation c4 inf: ( A B ) C D > > most significant - C again > > I am trying to find a logic to remember this, but fails so far :) No wonder. I have a patch ready to fix this, but have never been happy with it. Resources within a resource set have opposite semantics to 2-rsc collocations. But two adjacent resource sets are again as 2-rsc collocations, i.e. the left set follows the right set. Now, just to add to the confusion, in the example 4) above it is not very obvious that there are two sets, and that the second set is "C D". So, 4) should be equivalent to these 5 2-rsc collocations: A C B C A D B D D C What is the difference (if any) of the above to A D B D D C Well, we leave that to the interested as an exercise ;-)

More info on colocation semantics

colocation foo_on_bar inf: foo bar means that: 1. foo is to run wherever bar runs; 2. if bar is not started, then foo won't run at all; 3. if bar fails and cannot recover, then foo will shut down; 4. if foo is not started, bar will of course run; 5. if foo fails and cannot recover, bar will continue to run.

Cluster Resource Management

The full blown syntax is explained in the pacemaker documentation at

http://www.clusterlabs.org/doc/en-US/Pacemaker/1.0/html/Pacemaker_Explained/s-moving-resource.html

The exit return code of resource agents is explained in http://refspecs.linuxfoundation.org/LSB_4.1.0/LSB-Core-generic/LSB-Core-generic/iniscrptact.html

for the LSB compliants RAs and in http://www.linux-ha.org/wiki/OCF_Resource_Agents for the OCF agents.

See http://www.linux-ha.org/doc/dev-guides/ra-dev-guide.html for info on how to write (and test!) OCF-compliant RAs.

The main tool to manage a pacemaker cluster through a command line interface is the Cluster Resource Manager shell or crm. There are graphic interfaces out there but they are finicky at times. One that looks promising is lcmc (Linux Cluster Manager Console), a java GUI that can configure, manage and vizualize Linux clusters. It requires Sun (Oracle, pouah!) Java6 but can work on other JREs, or so they say. In the following I’ll use crm.

Cluster Status

crm

Use the command crm_mon to query the cluster status along with resources states, etc. The flag −1 is for a one-shot view of the cluster state. You can also group the resources per nodes (—group-by-node and show the inactive ones (not shown by default) with —inactive.

puck:~# crm_mon --group-by-node --inactive -1

============

Last updated: Tue Sep 13 14:32:49 2011

Stack: openais

Current DC: helena - partition with quorum

Version: 1.0.9-74392a28b7f31d7ddc86689598bd23114f58978b

2 Nodes configured, 2 expected votes

15 Resources configured.

============

Node puck: online

resStonithHelena (stonith:external/ipmi) Started

resDRBDr1:1 (ocf::linbit:drbd) Slave

resDRBDr3:1 (ocf::linbit:drbd) Slave

resPing:0 (ocf::pacemaker:ping) Started

resDRBDr0:1 (ocf::linbit:drbd) Slave

resDRBDr-www:1 (ocf::linbit:drbd) Slave

resDRBDr2:1 (ocf::linbit:drbd) Slave

Node helena: online

resStonithPuck (stonith:external/ipmi) Started

resDRBDr-www:0 (ocf::linbit:drbd) Master

resDRBDr1:0 (ocf::linbit:drbd) Master

resDRBDr2:0 (ocf::linbit:drbd) Master

resDRBDr3:0 (ocf::linbit:drbd) Master

resLVM1 (ocf::heartbeat:LVM) Started

resXen2 (ocf::heartbeat:Xen) Started

resLVM2 (ocf::heartbeat:LVM) Started

resLVM3 (ocf::heartbeat:LVM) Started

resXen3 (ocf::heartbeat:Xen) Started

resXen1 (ocf::heartbeat:Xen) Started

resDRBDr0:0 (ocf::linbit:drbd) Master

resXen0 (ocf::heartbeat:Xen) Started

resPing:1 (ocf::pacemaker:ping) Started

resLVM0 (ocf::heartbeat:LVM) Started

Inactive resources:

The crm shell can also be used with crm status.

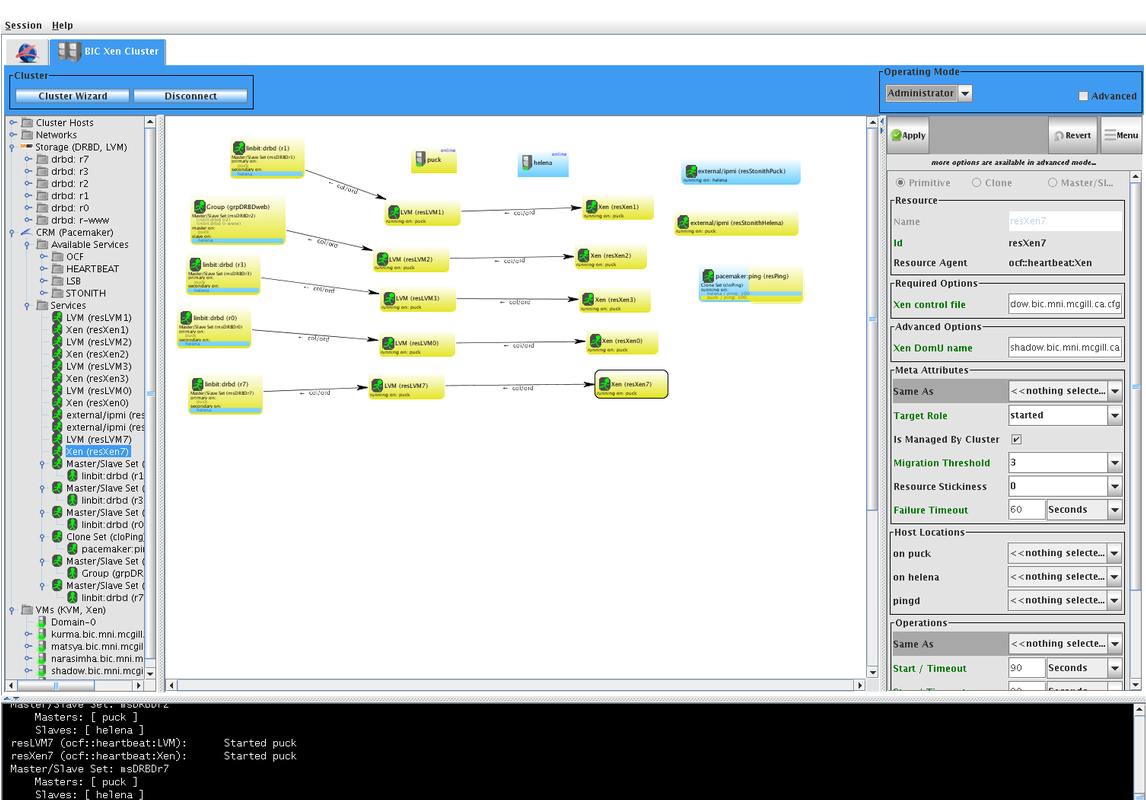

lcmc

You remotely connect to a cluster using a secure ssh session from a host with a X11 display using the Linux Cluster Manager Console with the command (the jar file can be found on the LCMC web site http://lcmc.sourceforge.net/)

~# java -jar LCMC-1.1.2.jar

After connecting you’ll get a view like this.

Changing Node Status

To put a node on standby mode issue the command crm node standbye <node_name>. The resources located on node node_name will then be stopped in an ordely fashion and restarted on the other node —IF there are no cluster constraints that forbid the online node to run the resources!

For instance if a resource originally running on node1 is manually migrated to node2 then it won’t restart anywhere upon putting node2 in standby unless one un-migrate the resource prior to putting node2 offline. See Manually Migrating (Moving) Resources Around for details.

To put back the node online type crm node online <node_name>. Resources might start migrating around, all depending on their stickiness.

Manually Migrating (Moving) Resources Around