|

The maximum margin required for support vectors becomes more difficult to compute in the case of nonlinearly separable classes.

To accommodate for this, a new parameter, C, is introduced to our previous Lagrangian formalization to allow for a soft margin:

[formalization of Lagrangian for soft margin]

The value of C determines the penalty for misclassification about the margin; in other words, the amount of slack allowed.

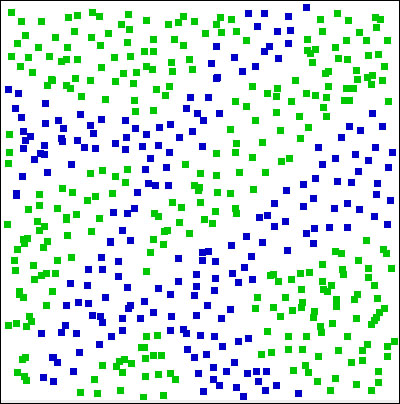

However, by itself, this penalty variable is not able to appropriately determine the boundaries for more complex sets of points such as the following.

In the nonlinear case, a mapping function is chosen to represent the original feature space in a higher dimensional space, in which an appropriate linear separator that satisfies the maximum margin properties is determined.

This separator is then mapped back into the original feature space resulting in an appropriate discriminant boundary. However, mapping the entire training space is computationally expensive.

This problem is resolved by applying the Kernel Trick. Since the original training algorithm involves only computing dot products between training points, it is not actually necessary to map individual points into the higher space. Instead, using a kernel, only these dot products are mapped. The following table provides a list of popular kernels.

[table of popular kernels]

|